Shuoyi Chen

Adaptive High-Frequency Transformer for Diverse Wildlife Re-Identification

Oct 09, 2024Abstract:Wildlife ReID involves utilizing visual technology to identify specific individuals of wild animals in different scenarios, holding significant importance for wildlife conservation, ecological research, and environmental monitoring. Existing wildlife ReID methods are predominantly tailored to specific species, exhibiting limited applicability. Although some approaches leverage extensively studied person ReID techniques, they struggle to address the unique challenges posed by wildlife. Therefore, in this paper, we present a unified, multi-species general framework for wildlife ReID. Given that high-frequency information is a consistent representation of unique features in various species, significantly aiding in identifying contours and details such as fur textures, we propose the Adaptive High-Frequency Transformer model with the goal of enhancing high-frequency information learning. To mitigate the inevitable high-frequency interference in the wilderness environment, we introduce an object-aware high-frequency selection strategy to adaptively capture more valuable high-frequency components. Notably, we unify the experimental settings of multiple wildlife datasets for ReID, achieving superior performance over state-of-the-art ReID methods. In domain generalization scenarios, our approach demonstrates robust generalization to unknown species.

Transformer for Object Re-Identification: A Survey

Jan 13, 2024Abstract:Object Re-Identification (Re-ID) aims to identify and retrieve specific objects from varying viewpoints. For a prolonged period, this field has been predominantly driven by deep convolutional neural networks. In recent years, the Transformer has witnessed remarkable advancements in computer vision, prompting an increasing body of research to delve into the application of Transformer in Re-ID. This paper provides a comprehensive review and in-depth analysis of the Transformer-based Re-ID. In categorizing existing works into Image/Video-Based Re-ID, Re-ID with limited data/annotations, Cross-Modal Re-ID, and Special Re-ID Scenarios, we thoroughly elucidate the advantages demonstrated by the Transformer in addressing a multitude of challenges across these domains. Considering the trending unsupervised Re-ID, we propose a new Transformer baseline, UntransReID, achieving state-of-the-art performance on both single-/cross modal tasks. Besides, this survey also covers a wide range of Re-ID research objects, including progress in animal Re-ID. Given the diversity of species in animal Re-ID, we devise a standardized experimental benchmark and conduct extensive experiments to explore the applicability of Transformer for this task to facilitate future research. Finally, we discuss some important yet under-investigated open issues in the big foundation model era, we believe it will serve as a new handbook for researchers in this field.

Rotation Invariant Transformer for Recognizing Object in UAVs

Nov 05, 2023

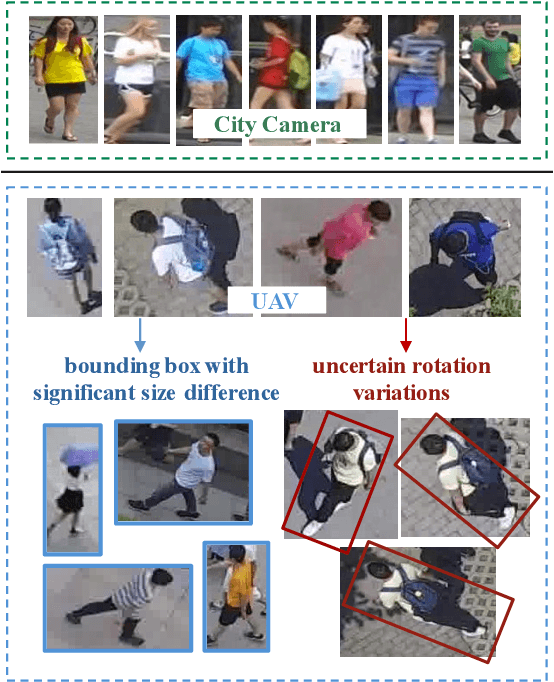

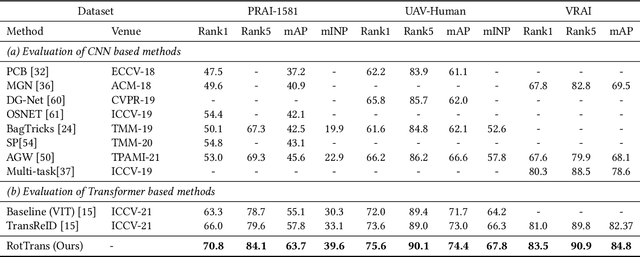

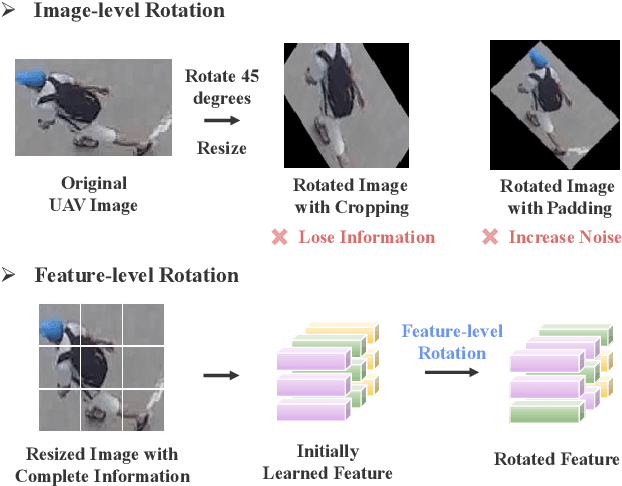

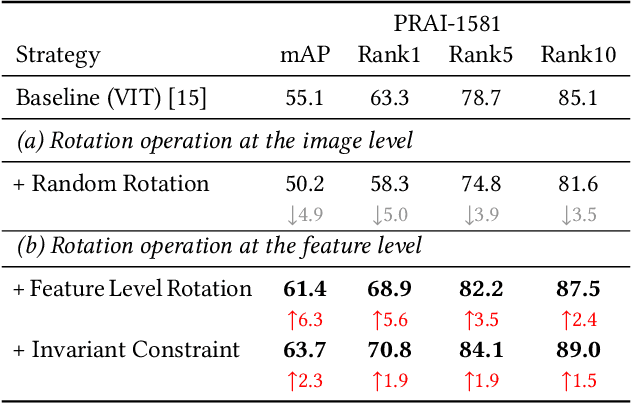

Abstract:Recognizing a target of interest from the UAVs is much more challenging than the existing object re-identification tasks across multiple city cameras. The images taken by the UAVs usually suffer from significant size difference when generating the object bounding boxes and uncertain rotation variations. Existing methods are usually designed for city cameras, incapable of handing the rotation issue in UAV scenarios. A straightforward solution is to perform the image-level rotation augmentation, but it would cause loss of useful information when inputting the powerful vision transformer as patches. This motivates us to simulate the rotation operation at the patch feature level, proposing a novel rotation invariant vision transformer (RotTrans). This strategy builds on high-level features with the help of the specificity of the vision transformer structure, which enhances the robustness against large rotation differences. In addition, we design invariance constraint to establish the relationship between the original feature and the rotated features, achieving stronger rotation invariance. Our proposed transformer tested on the latest UAV datasets greatly outperforms the current state-of-the-arts, which is 5.9\% and 4.8\% higher than the highest mAP and Rank1. Notably, our model also performs competitively for the person re-identification task on traditional city cameras. In particular, our solution wins the first place in the UAV-based person re-recognition track in the Multi-Modal Video Reasoning and Analyzing Competition held in ICCV 2021. Code is available at https://github.com/whucsy/RotTrans.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge