Shunyao Li

A self-adapting super-resolution structures framework for automatic design of GAN

Jun 10, 2021

Abstract:With the development of deep learning, the single super-resolution image reconstruction network models are becoming more and more complex. Small changes in hyperparameters of the models have a greater impact on model performance. In the existing works, experts have gradually explored a set of optimal model parameters based on empirical values or performing brute-force search. In this paper, we introduce a new super-resolution image reconstruction generative adversarial network framework, and a Bayesian optimization method used to optimizing the hyperparameters of the generator and discriminator. The generator is made by self-calibrated convolution, and discriminator is made by convolution lays. We have defined the hyperparameters such as the number of network layers and the number of neurons. Our method adopts Bayesian optimization as a optimization policy of GAN in our model. Not only can find the optimal hyperparameter solution automatically, but also can construct a super-resolution image reconstruction network, reducing the manual workload. Experiments show that Bayesian optimization can search the optimal solution earlier than the other two optimization algorithms.

Super-Resolution Image Reconstruction Based on Self-Calibrated Convolutional GAN

Jun 10, 2021

Abstract:With the effective application of deep learning in computer vision, breakthroughs have been made in the research of super-resolution images reconstruction. However, many researches have pointed out that the insufficiency of the neural network extraction on image features may bring the deteriorating of newly reconstructed image. On the other hand, the generated pictures are sometimes too artificial because of over-smoothing. In order to solve the above problems, we propose a novel self-calibrated convolutional generative adversarial networks. The generator consists of feature extraction and image reconstruction. Feature extraction uses self-calibrated convolutions, which contains four portions, and each portion has specific functions. It can not only expand the range of receptive fields, but also obtain long-range spatial and inter-channel dependencies. Then image reconstruction is performed, and finally a super-resolution image is reconstructed. We have conducted thorough experiments on different datasets including set5, set14 and BSD100 under the SSIM evaluation method. The experimental results prove the effectiveness of the proposed network.

Pun-GAN: Generative Adversarial Network for Pun Generation

Oct 24, 2019

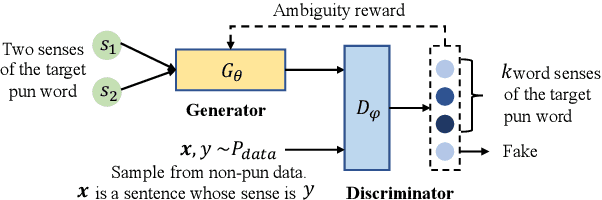

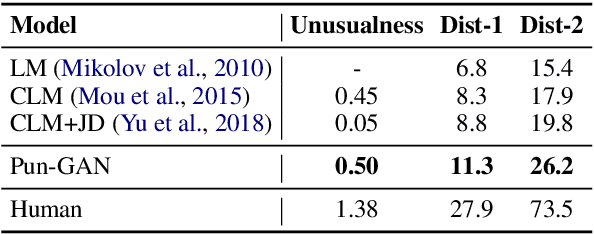

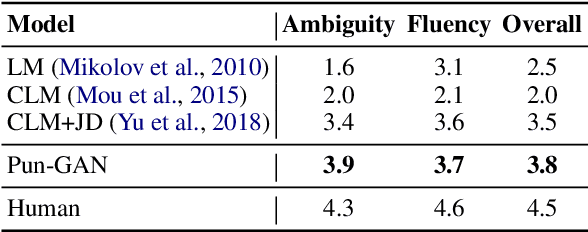

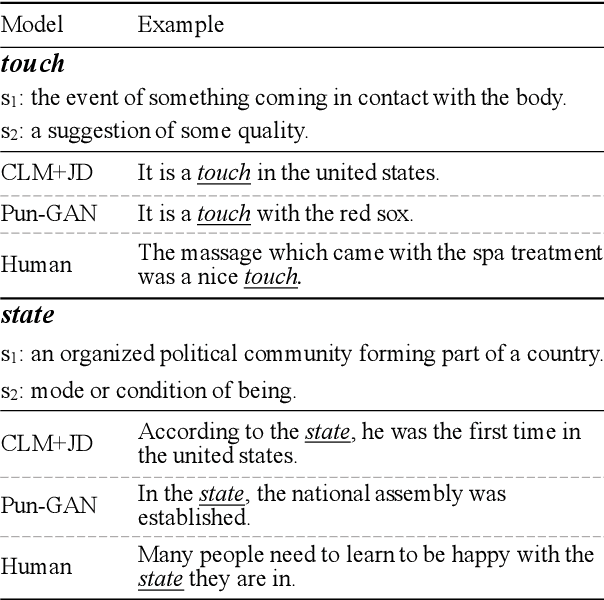

Abstract:In this paper, we focus on the task of generating a pun sentence given a pair of word senses. A major challenge for pun generation is the lack of large-scale pun corpus to guide the supervised learning. To remedy this, we propose an adversarial generative network for pun generation (Pun-GAN), which does not require any pun corpus. It consists of a generator to produce pun sentences, and a discriminator to distinguish between the generated pun sentences and the real sentences with specific word senses. The output of the discriminator is then used as a reward to train the generator via reinforcement learning, encouraging it to produce pun sentences that can support two word senses simultaneously. Experiments show that the proposed Pun-GAN can generate sentences that are more ambiguous and diverse in both automatic and human evaluation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge