Shucheng Yu

Over-the-Air Federated Learning with Enhanced Privacy

Dec 22, 2022

Abstract:Federated learning (FL) has emerged as a promising learning paradigm in which only local model parameters (gradients) are shared. Private user data never leaves the local devices thus preserving data privacy. However, recent research has shown that even when local data is never shared by a user, exchanging model parameters without protection can also leak private information. Moreover, in wireless systems, the frequent transmission of model parameters can cause tremendous bandwidth consumption and network congestion when the model is large. To address this problem, we propose a new FL framework with efficient over-the-air parameter aggregation and strong privacy protection of both user data and models. We achieve this by introducing pairwise cancellable random artificial noises (PCR-ANs) on end devices. As compared to existing over-the-air computation (AirComp) based FL schemes, our design provides stronger privacy protection. We analytically show the secrecy capacity and the convergence rate of the proposed wireless FL aggregation algorithm.

BO-DBA: Query-Efficient Decision-Based Adversarial Attacks via Bayesian Optimization

Jun 04, 2021

Abstract:Decision-based attacks (DBA), wherein attackers perturb inputs to spoof learning algorithms by observing solely the output labels, are a type of severe adversarial attacks against Deep Neural Networks (DNNs) requiring minimal knowledge of attackers. State-of-the-art DBA attacks relying on zeroth-order gradient estimation require an excessive number of queries. Recently, Bayesian optimization (BO) has shown promising in reducing the number of queries in score-based attacks (SBA), in which attackers need to observe real-valued probability scores as outputs. However, extending BO to the setting of DBA is nontrivial because in DBA only output labels instead of real-valued scores, as needed by BO, are available to attackers. In this paper, we close this gap by proposing an efficient DBA attack, namely BO-DBA. Different from existing approaches, BO-DBA generates adversarial examples by searching so-called \emph{directions of perturbations}. It then formulates the problem as a BO problem that minimizes the real-valued distortion of perturbations. With the optimized perturbation generation process, BO-DBA converges much faster than the state-of-the-art DBA techniques. Experimental results on pre-trained ImageNet classifiers show that BO-DBA converges within 200 queries while the state-of-the-art DBA techniques need over 15,000 queries to achieve the same level of perturbation distortion. BO-DBA also shows similar attack success rates even as compared to BO-based SBA attacks but with less distortion.

SAFELearning: Enable Backdoor Detectability In Federated Learning With Secure Aggregation

Feb 04, 2021

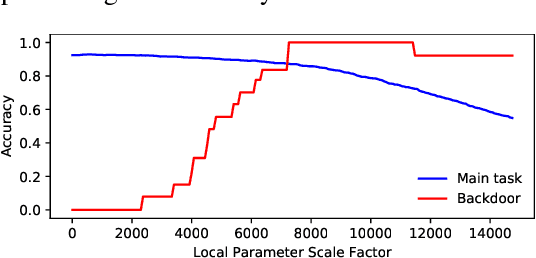

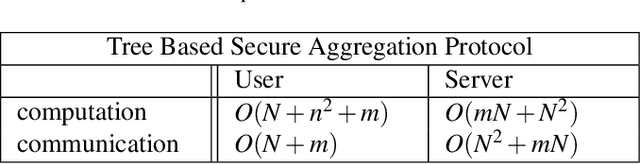

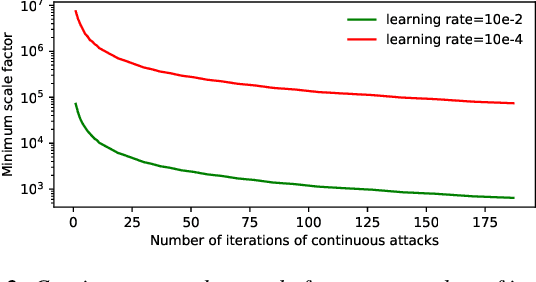

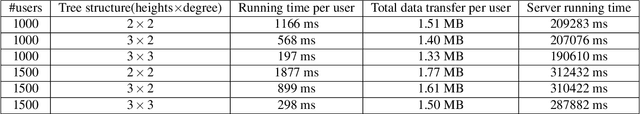

Abstract:For model privacy, local model parameters in federated learning shall be obfuscated before sent to the remote aggregator. This technique is referred to as \emph{secure aggregation}. However, secure aggregation makes model poisoning attacks, e.g., to insert backdoors, more convenient given existing anomaly detection methods mostly require access to plaintext local models. This paper proposes SAFELearning which supports backdoor detection for secure aggregation. We achieve this through two new primitives - \emph{oblivious random grouping (ORG)} and \emph{partial parameter disclosure (PPD)}. ORG partitions participants into one-time random subgroups with group configurations oblivious to participants; PPD allows secure partial disclosure of aggregated subgroup models for anomaly detection without leaking individual model privacy. SAFELearning is able to significantly reduce backdoor model accuracy without jeopardizing the main task accuracy under common backdoor strategies. Extensive experiments show SAFELearning reduces backdoor accuracy from $100\%$ to $8.2\%$ for ResNet-18 over CIFAR-10 when $10\%$ participants are malicious.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge