SAFELearning: Enable Backdoor Detectability In Federated Learning With Secure Aggregation

Paper and Code

Feb 04, 2021

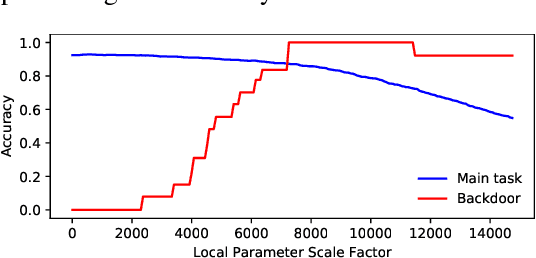

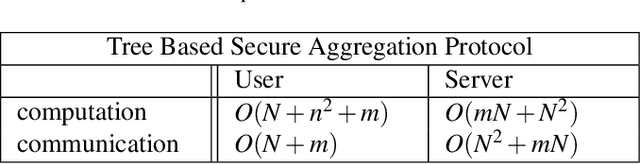

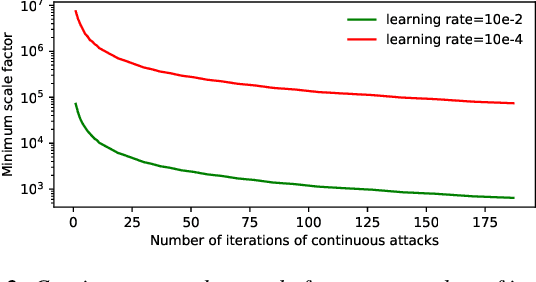

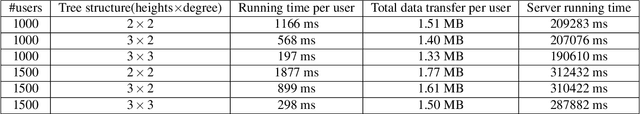

For model privacy, local model parameters in federated learning shall be obfuscated before sent to the remote aggregator. This technique is referred to as \emph{secure aggregation}. However, secure aggregation makes model poisoning attacks, e.g., to insert backdoors, more convenient given existing anomaly detection methods mostly require access to plaintext local models. This paper proposes SAFELearning which supports backdoor detection for secure aggregation. We achieve this through two new primitives - \emph{oblivious random grouping (ORG)} and \emph{partial parameter disclosure (PPD)}. ORG partitions participants into one-time random subgroups with group configurations oblivious to participants; PPD allows secure partial disclosure of aggregated subgroup models for anomaly detection without leaking individual model privacy. SAFELearning is able to significantly reduce backdoor model accuracy without jeopardizing the main task accuracy under common backdoor strategies. Extensive experiments show SAFELearning reduces backdoor accuracy from $100\%$ to $8.2\%$ for ResNet-18 over CIFAR-10 when $10\%$ participants are malicious.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge