Shuailiang Zhang

Semantics-Aware Inferential Network for Natural Language Understanding

Apr 28, 2020

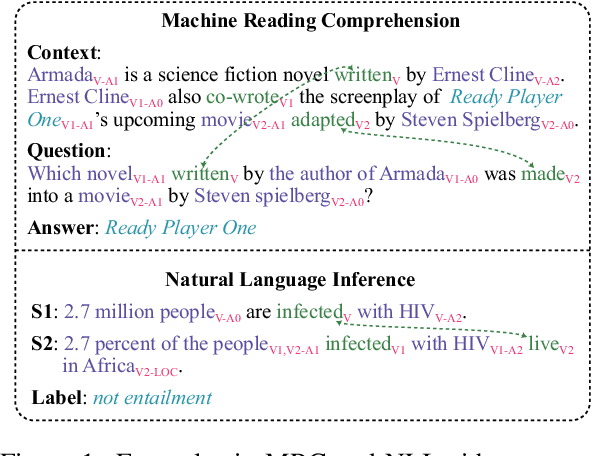

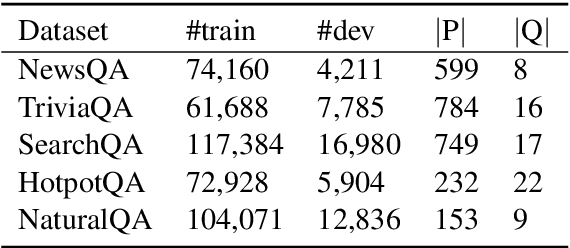

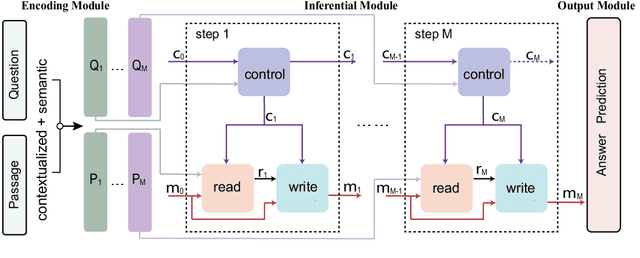

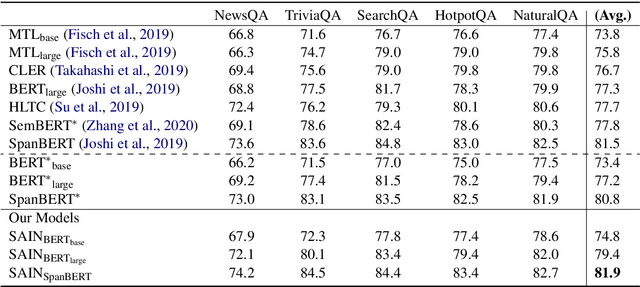

Abstract:For natural language understanding tasks, either machine reading comprehension or natural language inference, both semantics-aware and inference are favorable features of the concerned modeling for better understanding performance. Thus we propose a Semantics-Aware Inferential Network (SAIN) to meet such a motivation. Taking explicit contextualized semantics as a complementary input, the inferential module of SAIN enables a series of reasoning steps over semantic clues through an attention mechanism. By stringing these steps, the inferential network effectively learns to perform iterative reasoning which incorporates both explicit semantics and contextualized representations. In terms of well pre-trained language models as front-end encoder, our model achieves significant improvement on 11 tasks including machine reading comprehension and natural language inference.

Semantics-aware BERT for Language Understanding

Sep 05, 2019

Abstract:The latest work on language representations carefully integrates contextualized features into language model training, which enables a series of success especially in various machine reading comprehension and natural language inference tasks. However, the existing language representation models including ELMo, GPT and BERT only exploit plain context-sensitive features such as character or word embeddings. They rarely consider incorporating structured semantic information which can provide rich semantics for language representation. To promote natural language understanding, we propose to incorporate explicit contextual semantics from pre-trained semantic role labeling, and introduce an improved language representation model, Semantics-aware BERT (SemBERT), which is capable of explicitly absorbing contextual semantics over a BERT backbone. SemBERT keeps the convenient usability of its BERT precursor in a light fine-tuning way without substantial task-specific modifications. Compared with BERT, semantics-aware BERT is as simple in concept but more powerful. It obtains new state-of-the-art or substantially improves results on ten reading comprehension and language inference tasks.

DCMN+: Dual Co-Matching Network for Multi-choice Reading Comprehension

Aug 30, 2019

Abstract:Multi-choice reading comprehension is a challenging task to select an answer from a set of candidate options when given passage and question. Previous approaches usually only calculate question-aware passage representation and ignore passage-aware question representation when modeling the relationship between passage and question, which obviously cannot take the best of information between passage and question. In this work, we propose dual co-matching network (DCMN) which models the relationship among passage, question and answer options bidirectionally. Besides, inspired by how human solve multi-choice questions, we integrate two reading strategies into our model: (i) passage sentence selection that finds the most salient supporting sentences to answer the question, (ii) answer option interaction that encodes the comparison information between answer options. DCMN integrated with the two strategies (DCMN+) obtains state-of-the-art results on five multi-choice reading comprehension datasets which are from different domains: RACE, SemEval-2018 Task 11, ROCStories, COIN, MCTest.

Concurrent Parsing of Constituency and Dependency

Aug 18, 2019

Abstract:Constituent and dependency representation for syntactic structure share a lot of linguistic and computational characteristics, this paper thus makes the first attempt by introducing a new model that is capable of parsing constituent and dependency at the same time, so that lets either of the parsers enhance each other. Especially, we evaluate the effect of different shared network components and empirically verify that dependency parsing may be much more beneficial from constituent parsing structure. The proposed parser achieves new state-of-the-art performance for both parsing tasks, constituent and dependency on PTB and CTB benchmarks.

Dual Co-Matching Network for Multi-choice Reading Comprehension

Jan 27, 2019

Abstract:Multi-choice reading comprehension is a challenging task that requires complex reasoning procedure. Given passage and question, a correct answer need to be selected from a set of candidate answers. In this paper, we propose \textbf{D}ual \textbf{C}o-\textbf{M}atching \textbf{N}etwork (\textbf{DCMN}) which model the relationship among passage, question and answer bidirectionally. Different from existing approaches which only calculate question-aware or option-aware passage representation, we calculate passage-aware question representation and passage-aware answer representation at the same time. To demonstrate the effectiveness of our model, we evaluate our model on a large-scale multiple choice machine reading comprehension dataset({\em i.e.} RACE). Experimental result show that our proposed model achieves new state-of-the-art results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge