Shiyi Lin

Target-Prompt Online Graph Collaborative Learning for Temporal QoS Prediction

Aug 20, 2024

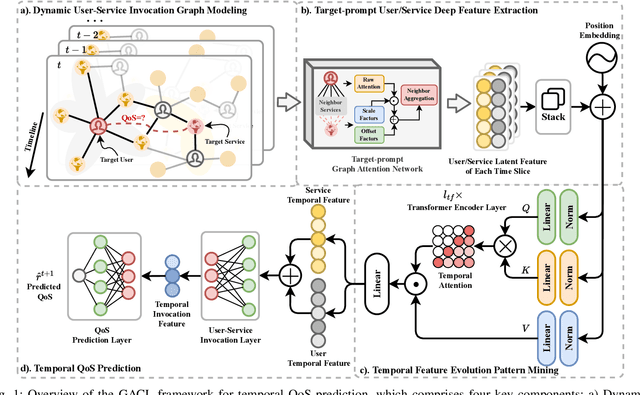

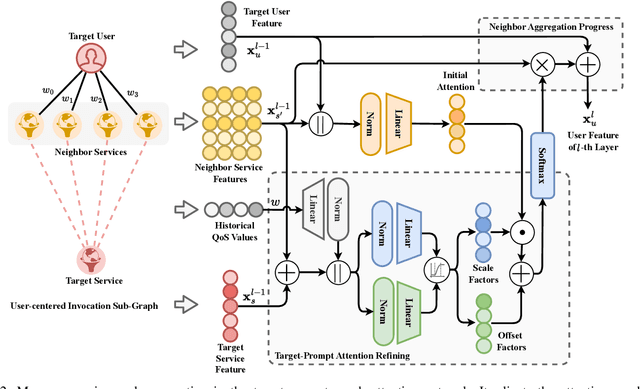

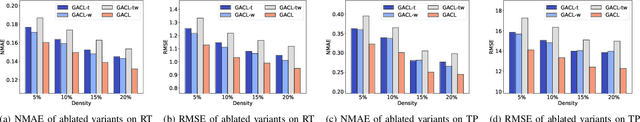

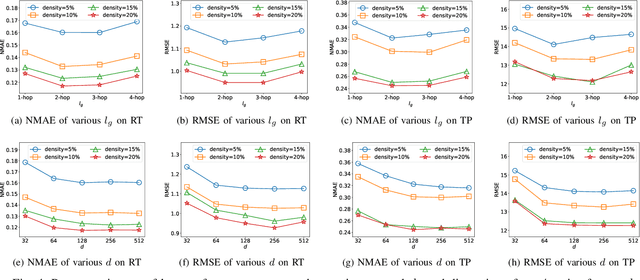

Abstract:In service-oriented architecture, accurately predicting the Quality of Service (QoS) is vital for maintaining reliability and enhancing user satisfaction. However, current methods often neglect high-order latent collaborative relationships and fail to dynamically adjust feature learning for specific user-service invocations, which are critical for precise feature extraction. Moreover, relying on RNNs to capture QoS evolution limits the ability to detect long-term trends due to challenges in managing long-range dependencies. To address these issues, we propose the Target-Prompt Online Graph Collaborative Learning (TOGCL) framework for temporal QoS prediction. It leverages a dynamic user-service invocation graph to comprehensively model historical interactions. Building on this graph, it develops a target-prompt graph attention network to extract online deep latent features of users and services at each time slice, considering implicit target-neighboring collaborative relationships and historical QoS values. Additionally, a multi-layer Transformer encoder is employed to uncover temporal feature evolution patterns, enhancing temporal QoS prediction. Extensive experiments on the WS-DREAM dataset demonstrate that TOGCL significantly outperforms state-of-the-art methods across multiple metrics, achieving improvements of up to 38.80\%. These results underscore the effectiveness of TOGCL for temporal QoS prediction.

Recurrent Transformer for Dynamic Graph Representation Learning with Edge Temporal States

Apr 20, 2023

Abstract:Dynamic graph representation learning is growing as a trending yet challenging research task owing to the widespread demand for graph data analysis in real world applications. Despite the encouraging performance of many recent works that build upon recurrent neural networks (RNNs) and graph neural networks (GNNs), they fail to explicitly model the impact of edge temporal states on node features over time slices. Additionally, they are challenging to extract global structural features because of the inherent over-smoothing disadvantage of GNNs, which further restricts the performance. In this paper, we propose a recurrent difference graph transformer (RDGT) framework, which firstly assigns the edges in each snapshot with various types and weights to illustrate their specific temporal states explicitly, then a structure-reinforced graph transformer is employed to capture the temporal node representations by a recurrent learning paradigm. Experimental results on four real-world datasets demonstrate the superiority of RDGT for discrete dynamic graph representation learning, as it consistently outperforms competing methods in dynamic link prediction tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge