Shinya Suzuki

Multi-Objective Bayesian Optimization with Active Preference Learning

Nov 22, 2023Abstract:There are a lot of real-world black-box optimization problems that need to optimize multiple criteria simultaneously. However, in a multi-objective optimization (MOO) problem, identifying the whole Pareto front requires the prohibitive search cost, while in many practical scenarios, the decision maker (DM) only needs a specific solution among the set of the Pareto optimal solutions. We propose a Bayesian optimization (BO) approach to identifying the most preferred solution in the MOO with expensive objective functions, in which a Bayesian preference model of the DM is adaptively estimated by an interactive manner based on the two types of supervisions called the pairwise preference and improvement request. To explore the most preferred solution, we define an acquisition function in which the uncertainty both in the objective functions and the DM preference is incorporated. Further, to minimize the interaction cost with the DM, we also propose an active learning strategy for the preference estimation. We empirically demonstrate the effectiveness of our proposed method through the benchmark function optimization and the hyper-parameter optimization problems for machine learning models.

Multi-objective Bayesian Optimization using Pareto-frontier Entropy

Jun 01, 2019

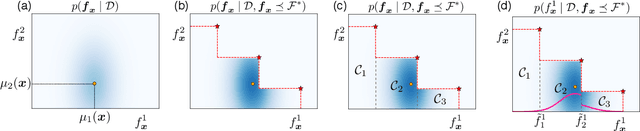

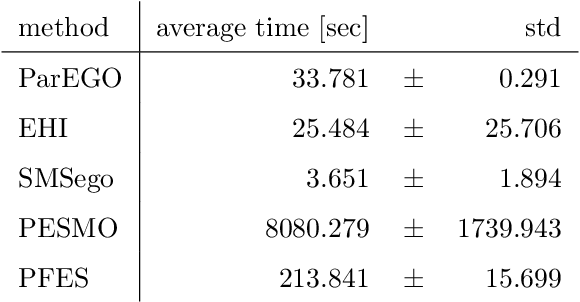

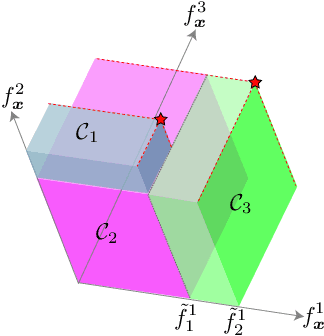

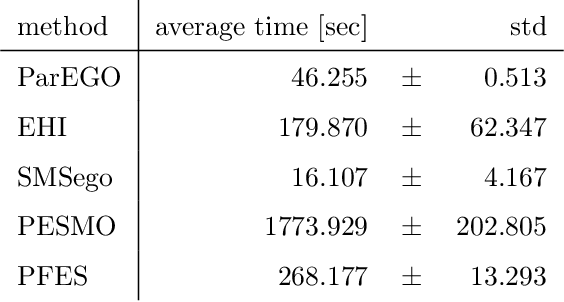

Abstract:We propose Pareto-frontier entropy search (PFES) for multi-objective Bayesian optimization (MBO). Unlike the existing entropy search for MBO which considers the entropy of the input space, we define the entropy of Pareto-frontier in the output space. By using a sampled Pareto-frontier from the current model, PFES provides a simple formula for directly evaluating the entropy. Besides the usual MBO setting, in which all the objectives are simultaneously observed, we also consider the "decoupled" setting, in which the objective functions can be observed separately. PFES can easily derive an acquisition function for the decoupled setting through the entropy of the marginal density for each output variable. For the both settings, by conditioning on the sampled Pareto-frontier, dependence among different objectives arises in the entropy evaluation. PFES can incorporate this dependency into the acquisition function, while the existing information-based MBO employs an independent Gaussian approximation. Our numerical experiments show effectiveness of PFES through synthetic functions and real-world datasets from materials science.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge