Shinya Sumikura

Privacy Preserving Visual SLAM

Jul 27, 2020

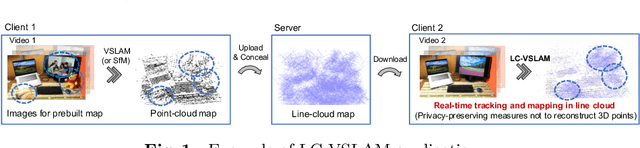

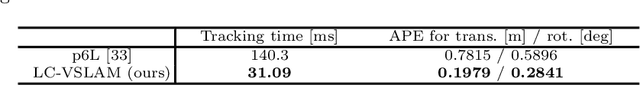

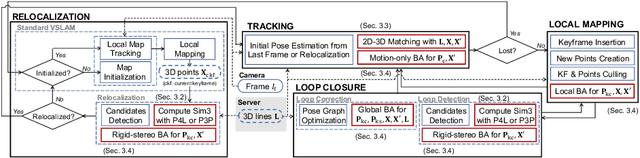

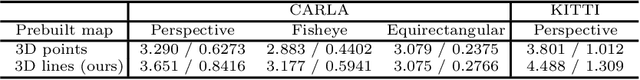

Abstract:This study proposes a privacy-preserving Visual SLAM framework for estimating camera poses and performing bundle adjustment with mixed line and point clouds in real time. Previous studies have proposed localization methods to estimate a camera pose using a line-cloud map for a single image or a reconstructed point cloud. These methods offer a scene privacy protection against the inversion attacks by converting a point cloud to a line cloud, which reconstruct the scene images from the point cloud. However, they are not directly applicable to a video sequence because they do not address computational efficiency. This is a critical issue to solve for estimating camera poses and performing bundle adjustment with mixed line and point clouds in real time. Moreover, there has been no study on a method to optimize a line-cloud map of a server with a point cloud reconstructed from a client video because any observation points on the image coordinates are not available to prevent the inversion attacks, namely the reversibility of the 3D lines. The experimental results with synthetic and real data show that our Visual SLAM framework achieves the intended privacy-preserving formation and real-time performance using a line-cloud map.

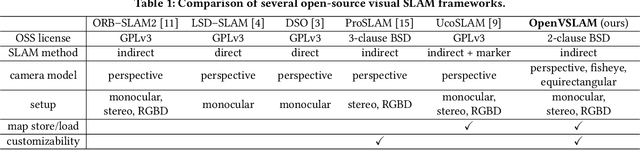

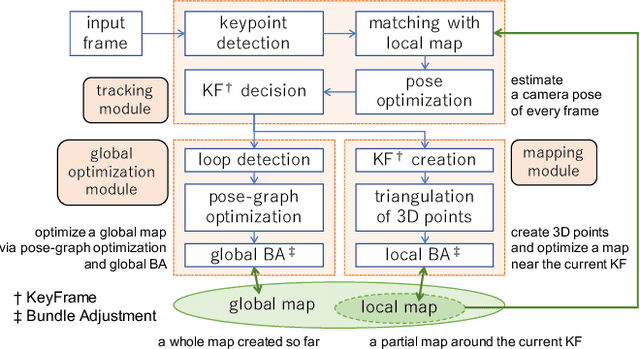

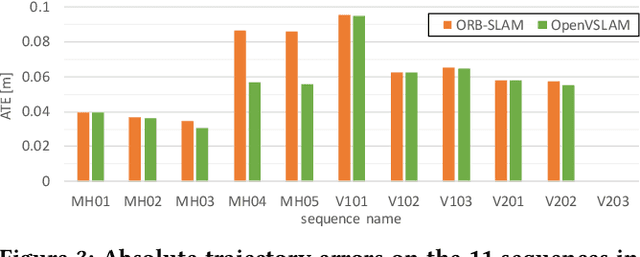

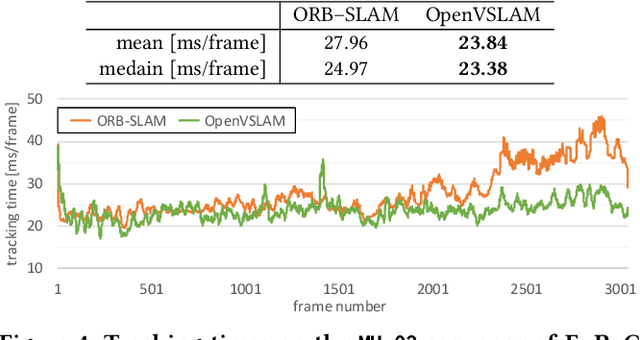

OpenVSLAM: A Versatile Visual SLAM Framework

Oct 10, 2019

Abstract:In this paper, we introduce OpenVSLAM, a visual SLAM framework with high usability and extensibility. Visual SLAM systems are essential for AR devices, autonomous control of robots and drones, etc. However, conventional open-source visual SLAM frameworks are not appropriately designed as libraries called from third-party programs. To overcome this situation, we have developed a novel visual SLAM framework. This software is designed to be easily used and extended. It incorporates several useful features and functions for research and development. OpenVSLAM is released at https://github.com/xdspacelab/openvslam under the 2-clause BSD license.

Scale Estimation of Monocular SfM for a Multi-modal Stereo Camera

Oct 28, 2018

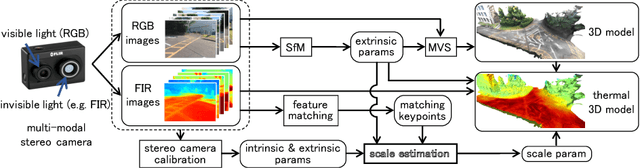

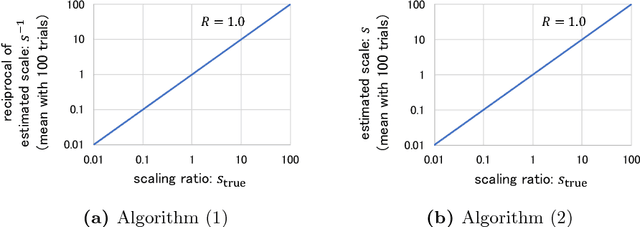

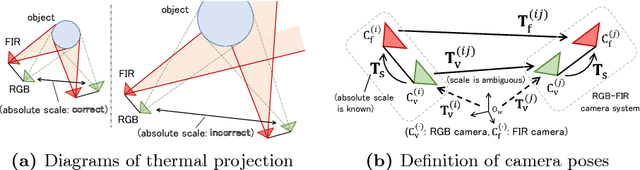

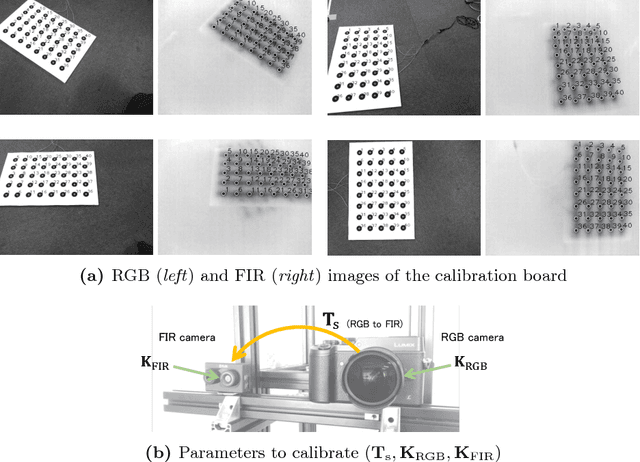

Abstract:This paper proposes a novel method of estimating the absolute scale of monocular SfM for a multi-modal stereo camera. In the fields of computer vision and robotics, scale estimation for monocular SfM has been widely investigated in order to simplify systems. This paper addresses the scale estimation problem for a stereo camera system in which two cameras capture different spectral images (e.g., RGB and FIR), whose feature points are difficult to directly match using descriptors. Furthermore, the number of matching points between FIR images can be comparatively small, owing to the low resolution and lack of thermal scene texture. To cope with these difficulties, the proposed method estimates the scale parameter using batch optimization, based on the epipolar constraint of a small number of feature correspondences between the invisible light images. The accuracy and numerical stability of the proposed method are verified by synthetic and real image experiments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge