Shihua Ma

Sluggish and Chemically-Biased Interstitial Diffusion in Concentrated Solid Solution Alloys: Mechanisms and Methods

Nov 28, 2023Abstract:Interstitial diffusion is a pivotal process that governs the phase stability and irradiation response of materials in non-equilibrium conditions. In this work, we study sluggish and chemically-biased interstitial diffusion in Fe-Ni concentrated solid solution alloys (CSAs) by combining machine learning (ML) and kinetic Monte Carlo (kMC), where ML is used to accurately and efficiently predict the migration energy barriers on-the-fly. The ML-kMC reproduces the diffusivity that was reported by molecular dynamics results at high temperatures. With this powerful tool, we find that the observed sluggish diffusion and the "Ni-Ni-Ni"-biased diffusion in Fe-Ni alloys are ascribed to a unique "Barrier Lock" mechanism, whereas the "Fe-Fe-Fe"-biased diffusion is influenced by a "Component Dominance" mechanism. Inspired by the mentioned mechanisms, a practical AvgS-kMC method is proposed for conveniently and swiftly determining interstitial-mediated diffusivity by only relying on the mean energy barriers of migration patterns. Combining the AvgS-kMC with the differential evolutionary algorithm, an inverse design strategy for optimizing sluggish diffusion properties is applied to emphasize the crucial role of favorable migration patterns.

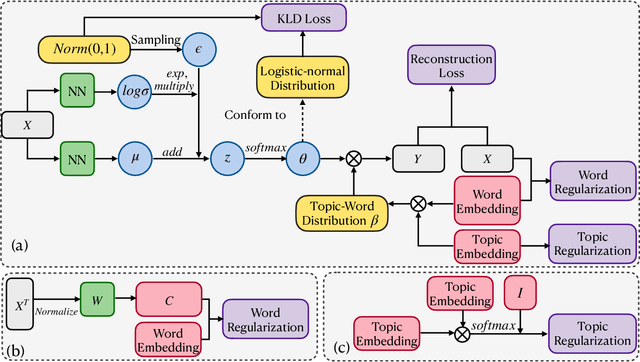

Towards Better Understanding with Uniformity and Explicit Regularization of Embeddings in Embedding-based Neural Topic Models

Jun 16, 2022

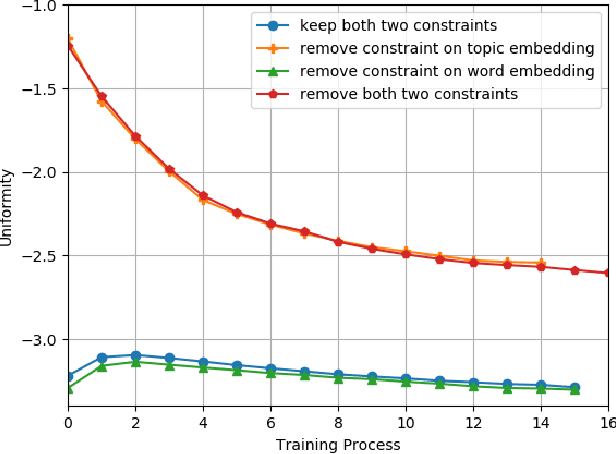

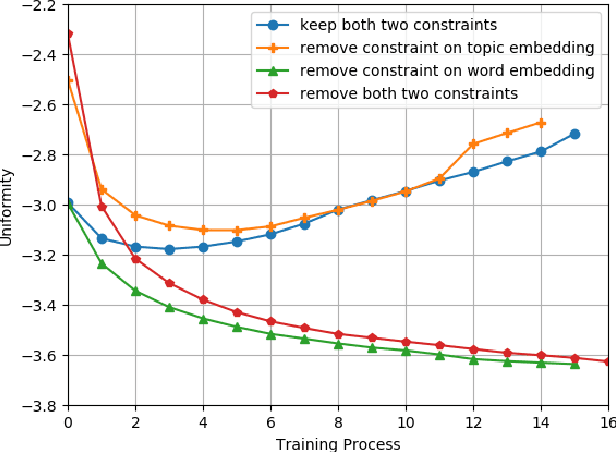

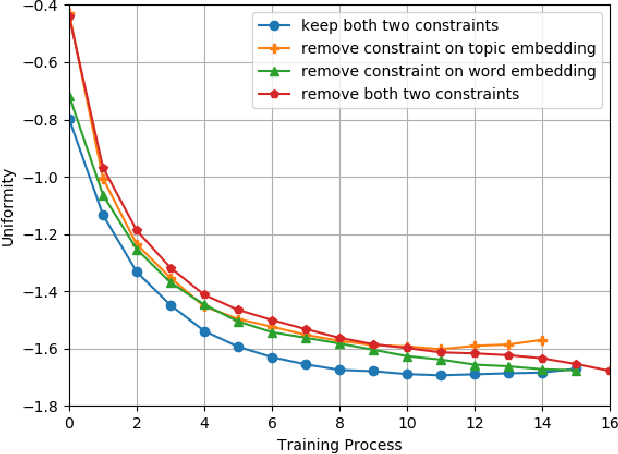

Abstract:Embedding-based neural topic models could explicitly represent words and topics by embedding them to a homogeneous feature space, which shows higher interpretability. However, there are no explicit constraints for the training of embeddings, leading to a larger optimization space. Also, a clear description of the changes in embeddings and the impact on model performance is still lacking. In this paper, we propose an embedding regularized neural topic model, which applies the specially designed training constraints on word embedding and topic embedding to reduce the optimization space of parameters. To reveal the changes and roles of embeddings, we introduce \textbf{uniformity} into the embedding-based neural topic model as the evaluation metric of embedding space. On this basis, we describe how embeddings tend to change during training via the changes in the uniformity of embeddings. Furthermore, we demonstrate the impact of changes in embeddings in embedding-based neural topic models through ablation studies. The results of experiments on two mainstream datasets indicate that our model significantly outperforms baseline models in terms of the harmony between topic quality and document modeling. This work is the first attempt to exploit uniformity to explore changes in embeddings of embedding-based neural topic models and their impact on model performance to the best of our knowledge.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge