Shibei Meng

OmniDiff: A Comprehensive Benchmark for Fine-grained Image Difference Captioning

Mar 14, 2025

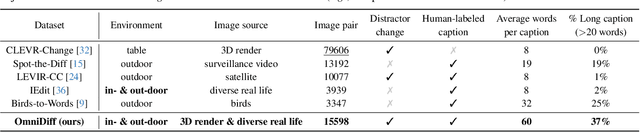

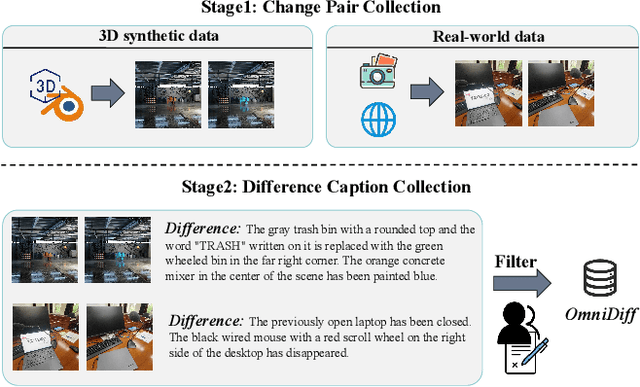

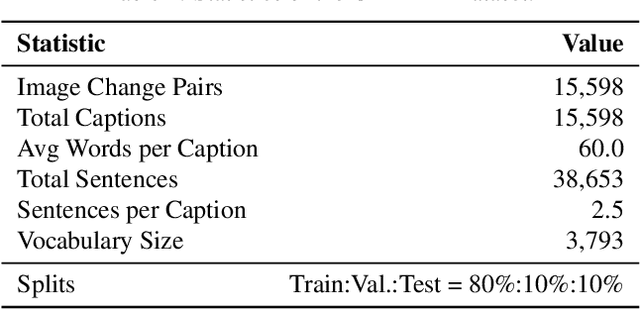

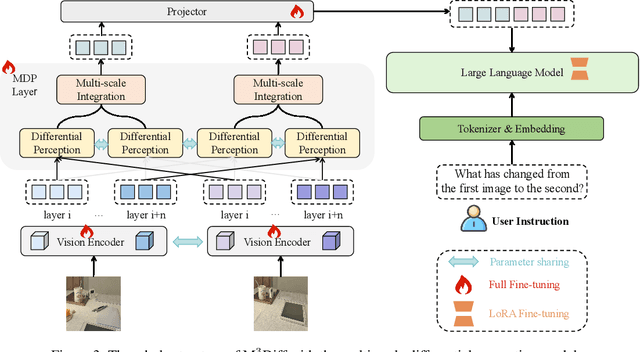

Abstract:Image Difference Captioning (IDC) aims to generate natural language descriptions of subtle differences between image pairs, requiring both precise visual change localization and coherent semantic expression. Despite recent advancements, existing datasets often lack breadth and depth, limiting their applicability in complex and dynamic environments: (1) from a breadth perspective, current datasets are constrained to limited variations of objects in specific scenes, and (2) from a depth perspective, prior benchmarks often provide overly simplistic descriptions. To address these challenges, we introduce OmniDiff, a comprehensive dataset comprising 324 diverse scenarios-spanning real-world complex environments and 3D synthetic settings-with fine-grained human annotations averaging 60 words in length and covering 12 distinct change types. Building on this foundation, we propose M$^3$Diff, a MultiModal large language model enhanced by a plug-and-play Multi-scale Differential Perception (MDP) module. This module improves the model's ability to accurately identify and describe inter-image differences while maintaining the foundational model's generalization capabilities. With the addition of the OmniDiff dataset, M$^3$Diff achieves state-of-the-art performance across multiple benchmarks, including Spot-the-Diff, IEdit, CLEVR-Change, CLEVR-DC, and OmniDiff, demonstrating significant improvements in cross-scenario difference recognition accuracy compared to existing methods. The dataset, code, and models will be made publicly available to support further research.

FastPoseGait: A Toolbox and Benchmark for Efficient Pose-based Gait Recognition

Sep 02, 2023Abstract:We present FastPoseGait, an open-source toolbox for pose-based gait recognition based on PyTorch. Our toolbox supports a set of cutting-edge pose-based gait recognition algorithms and a variety of related benchmarks. Unlike other pose-based projects that focus on a single algorithm, FastPoseGait integrates several state-of-the-art (SOTA) algorithms under a unified framework, incorporating both the latest advancements and best practices to ease the comparison of effectiveness and efficiency. In addition, to promote future research on pose-based gait recognition, we provide numerous pre-trained models and detailed benchmark results, which offer valuable insights and serve as a reference for further investigations. By leveraging the highly modular structure and diverse methods offered by FastPoseGait, researchers can quickly delve into pose-based gait recognition and promote development in the field. In this paper, we outline various features of this toolbox, aiming that our toolbox and benchmarks can further foster collaboration, facilitate reproducibility, and encourage the development of innovative algorithms for pose-based gait recognition. FastPoseGait is available at https://github.com//BNU-IVC/FastPoseGait and is actively maintained. We will continue updating this report as we add new features.

GPGait: Generalized Pose-based Gait Recognition

Mar 09, 2023

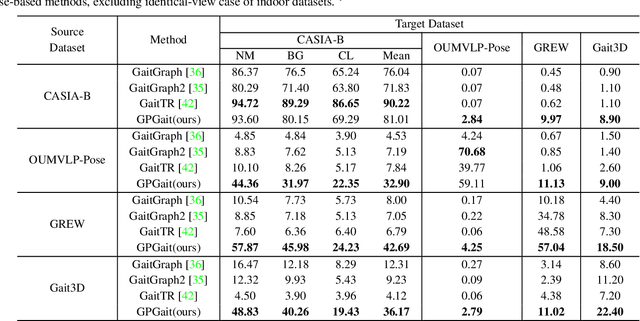

Abstract:Recent works on pose-based gait recognition have demonstrated the potential of using such simple information to achieve results comparable to silhouette-based methods. However, the generalization ability of pose-based methods on different datasets is undesirably inferior to that of silhouette-based ones, which has received little attention but hinders the application of these methods in real-world scenarios. To improve the generalization ability of pose-based methods across datasets, we propose a Generalized Pose-based Gait recognition (GPGait) framework. First, a Human-Oriented Transformation (HOT) and a series of Human-Oriented Descriptors (HOD) are proposed to obtain a unified pose representation with discriminative multi-features. Then, given the slight variations in the unified representation after HOT and HOD, it becomes crucial for the network to extract local-global relationships between the keypoints. To this end, a Part-Aware Graph Convolutional Network (PAGCN) is proposed to enable efficient graph partition and local-global spatial feature extraction. Experiments on four public gait recognition datasets, CASIA-B, OUMVLP-Pose, Gait3D and GREW, show that our model demonstrates better and more stable cross-domain capabilities compared to existing skeleton-based methods, achieving comparable recognition results to silhouette-based ones. The code will be released.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge