Shengke Xue

RynnVLA-001: Using Human Demonstrations to Improve Robot Manipulation

Sep 18, 2025Abstract:This paper presents RynnVLA-001, a vision-language-action(VLA) model built upon large-scale video generative pretraining from human demonstrations. We propose a novel two-stage pretraining methodology. The first stage, Ego-Centric Video Generative Pretraining, trains an Image-to-Video model on 12M ego-centric manipulation videos to predict future frames conditioned on an initial frame and a language instruction. The second stage, Human-Centric Trajectory-Aware Modeling, extends this by jointly predicting future keypoint trajectories, thereby effectively bridging visual frame prediction with action prediction. Furthermore, to enhance action representation, we propose ActionVAE, a variational autoencoder that compresses sequences of actions into compact latent embeddings, reducing the complexity of the VLA output space. When finetuned on the same downstream robotics datasets, RynnVLA-001 achieves superior performance over state-of-the-art baselines, demonstrating that the proposed pretraining strategy provides a more effective initialization for VLA models.

Deep Learning Guided Undersampling Mask Design for MR Image Reconstruction

Mar 08, 2020

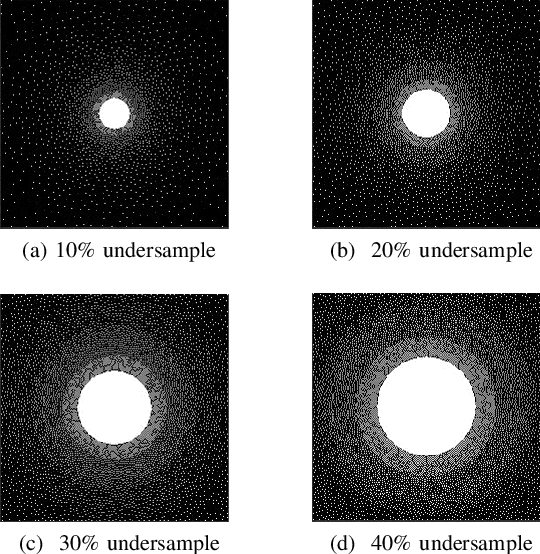

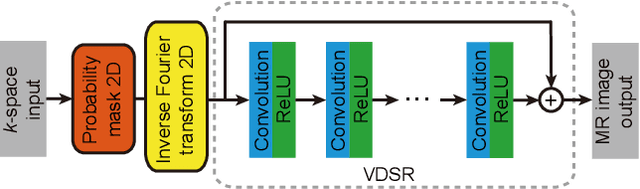

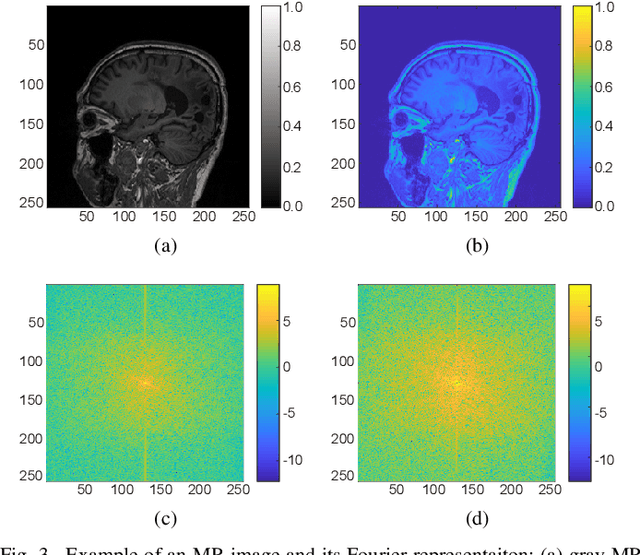

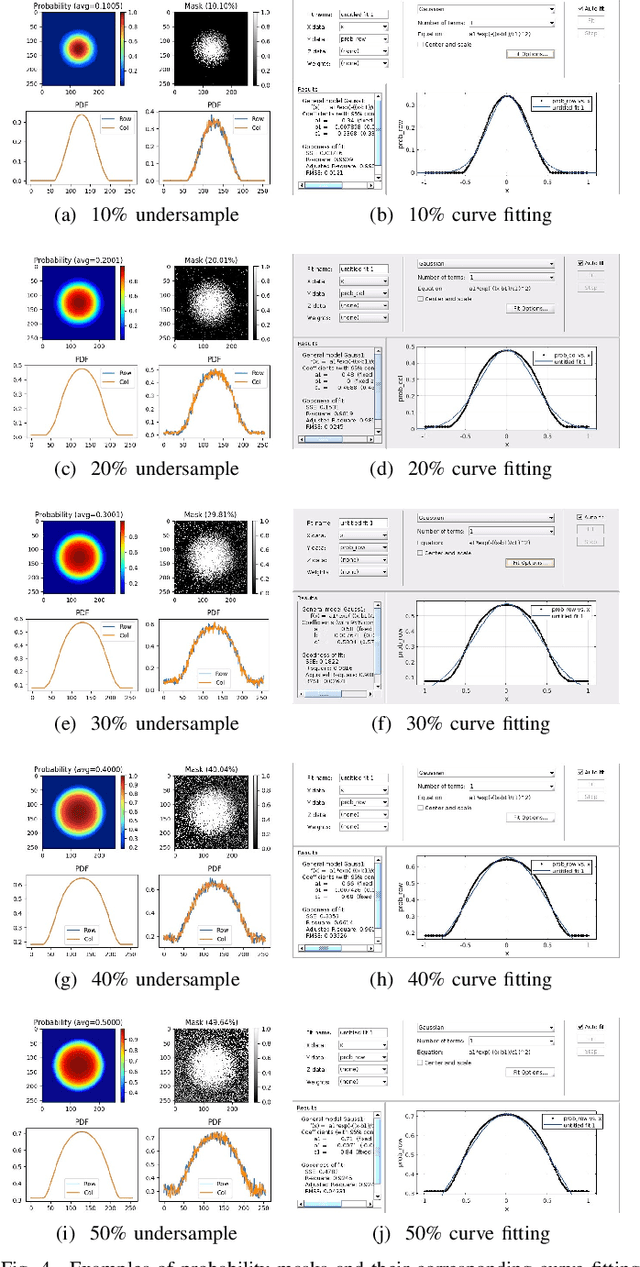

Abstract:In this paper, we propose a cross-domain networks that can achieve undersampled MR image reconstruction from raw k-space space. We design a 2D probability sampling mask layer to simulate real undersampling operation. Then the 2D Inverse FFT is deployed to reconstruct MR image from frequency domain to spatial domain. By minimizing the Euclidean loss between ground-truth image and output, we train the parameters in our probability mask layer. We discover the probability appears special patterns that is quite different from universal common sense that mask should be Poisson-like, under certain undersampled rates. We analyze the probability mask is subjected to Gaussian or Quadratic distributions, and discuss this pattern will be more accurate and robust than traditional ones. Extensive experiments proves that the rules we discovered are adaptive to most cases. This can be a useful guidance to further MR reconstruction mask designs.

Double Weighted Truncated Nuclear Norm Regularization for Low-Rank Matrix Completion

Jan 07, 2019

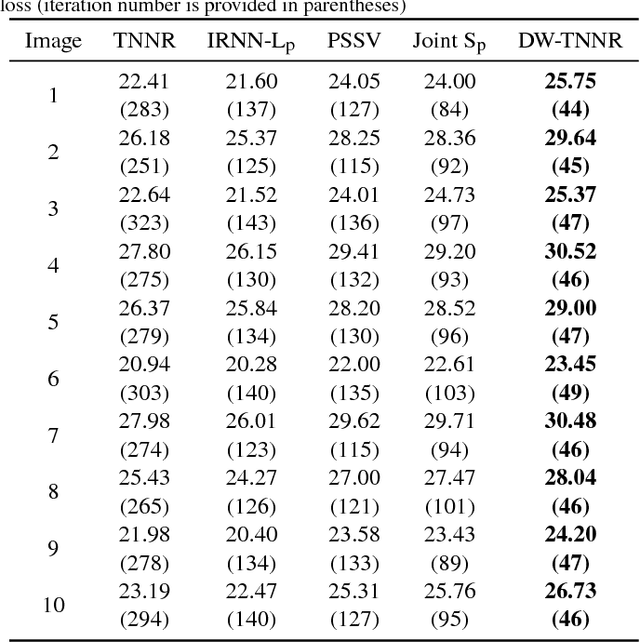

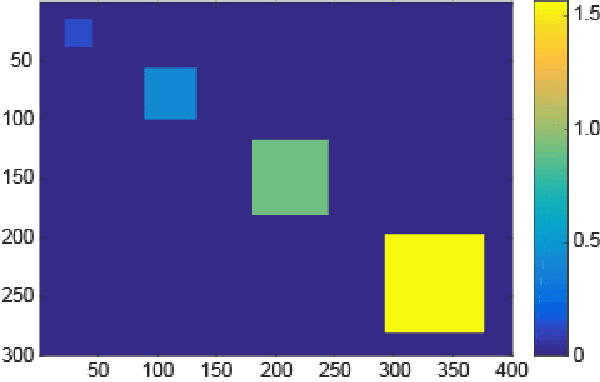

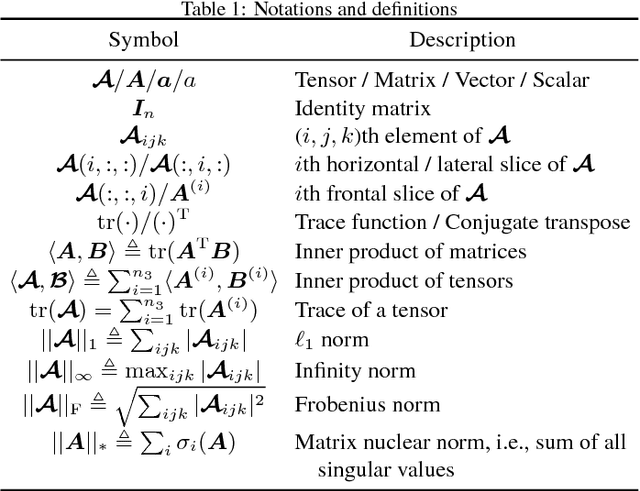

Abstract:Matrix completion focuses on recovering a matrix from a small subset of its observed elements, and has already gained cumulative attention in computer vision. Many previous approaches formulate this issue as a low-rank matrix approximation problem. Recently, a truncated nuclear norm has been presented as a surrogate of traditional nuclear norm, for better estimation to the rank of a matrix. The truncated nuclear norm regularization (TNNR) method is applicable in real-world scenarios. However, it is sensitive to the selection of the number of truncated singular values and requires numerous iterations to converge. Hereby, this paper proposes a revised approach called the double weighted truncated nuclear norm regularization (DW-TNNR), which assigns different weights to the rows and columns of a matrix separately, to accelerate the convergence with acceptable performance. The DW-TNNR is more robust to the number of truncated singular values than the TNNR. Instead of the iterative updating scheme in the second step of TNNR, this paper devises an efficient strategy that uses a gradient descent manner in a concise form, with a theoretical guarantee in optimization. Sufficient experiments conducted on real visual data prove that DW-TNNR has promising performance and holds the superiority in both speed and accuracy for matrix completion.

Truncated nuclear norm regularization for low-rank tensor completion

Jan 07, 2019

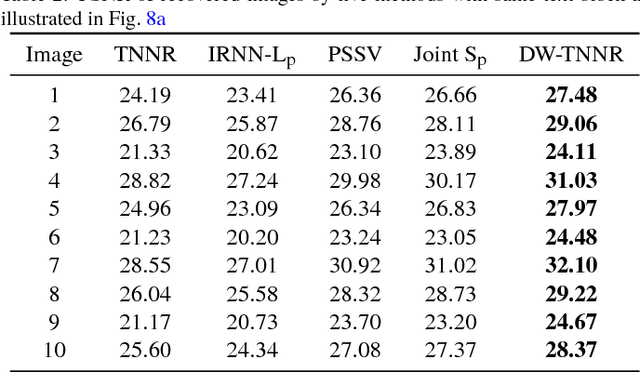

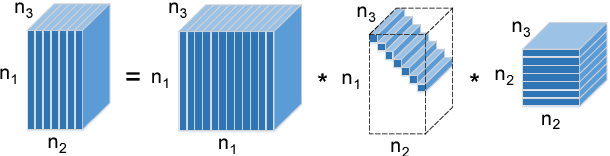

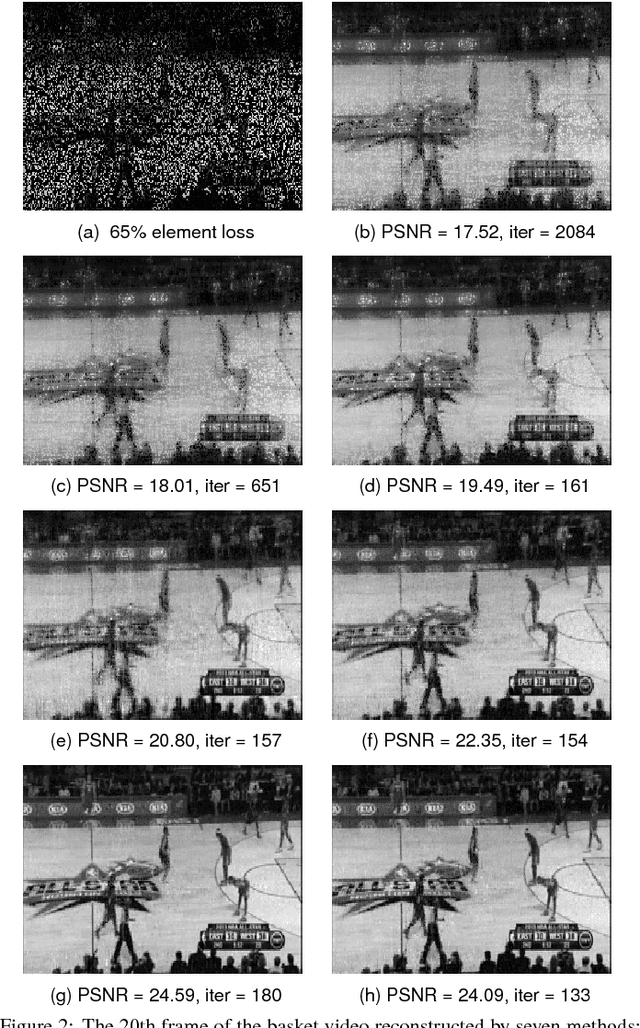

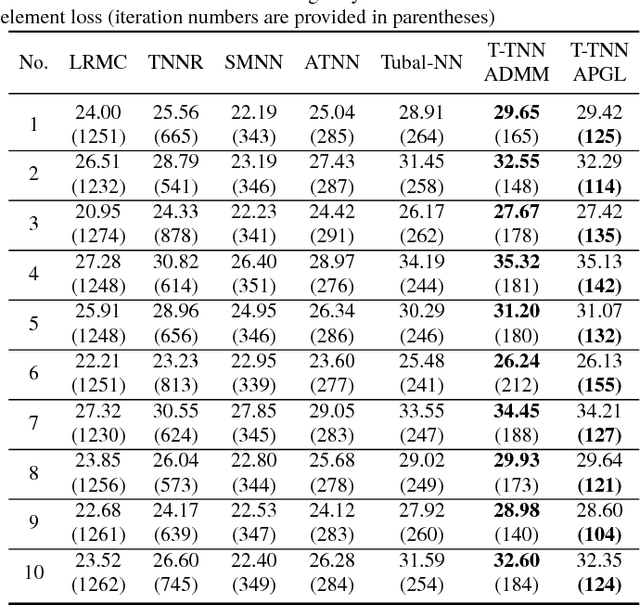

Abstract:Recently, low-rank tensor completion has become increasingly attractive in recovering incomplete visual data. Considering a color image or video as a three-dimensional (3D) tensor, existing studies have put forward several definitions of tensor nuclear norm. However, they are limited and may not accurately approximate the real rank of a tensor, and they do not explicitly use the low-rank property in optimization. It is proved that the recently proposed truncated nuclear norm (TNN) can replace the traditional nuclear norm, as an improved approximation to the rank of a matrix. In this paper, we propose a new method called the tensor truncated nuclear norm (T-TNN), which suggests a new definition of tensor nuclear norm. The truncated nuclear norm is generalized from the matrix case to the tensor case. With the help of the low rankness of TNN, our approach improves the efficacy of tensor completion. We adopt the definition of the previously proposed tensor singular value decomposition, the alternating direction method of multipliers, and the accelerated proximal gradient line search method in our algorithm. Substantial experiments on real-world videos and images illustrate that the performance of our approach is better than those of previous methods.

Low-Rank Tensor Completion by Truncated Nuclear Norm Regularization

May 28, 2018

Abstract:Currently, low-rank tensor completion has gained cumulative attention in recovering incomplete visual data whose partial elements are missing. By taking a color image or video as a three-dimensional (3D) tensor, previous studies have suggested several definitions of tensor nuclear norm. However, they have limitations and may not properly approximate the real rank of a tensor. Besides, they do not explicitly use the low-rank property in optimization. It is proved that the recently proposed truncated nuclear norm (TNN) can replace the traditional nuclear norm, as a better estimation to the rank of a matrix. Thus, this paper presents a new method called the tensor truncated nuclear norm (T-TNN), which proposes a new definition of tensor nuclear norm and extends the truncated nuclear norm from the matrix case to the tensor case. Beneficial from the low rankness of TNN, our approach improves the efficacy of tensor completion. We exploit the previously proposed tensor singular value decomposition and the alternating direction method of multipliers in optimization. Extensive experiments on real-world videos and images demonstrate that the performance of our approach is superior to those of existing methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge