Sheethal Bhat

Enhancing zero-shot learning in medical imaging: integrating clip with advanced techniques for improved chest x-ray analysis

Mar 17, 2025Abstract:Due to the large volume of medical imaging data, advanced AI methodologies are needed to assist radiologists in diagnosing thoracic diseases from chest X-rays (CXRs). Existing deep learning models often require large, labeled datasets, which are scarce in medical imaging due to the time-consuming and expert-driven annotation process. In this paper, we extend the existing approach to enhance zero-shot learning in medical imaging by integrating Contrastive Language-Image Pre-training (CLIP) with Momentum Contrast (MoCo), resulting in our proposed model, MoCoCLIP. Our method addresses challenges posed by class-imbalanced and unlabeled datasets, enabling improved detection of pulmonary pathologies. Experimental results on the NIH ChestXray14 dataset demonstrate that MoCoCLIP outperforms the state-of-the-art CheXZero model, achieving relative improvement of approximately 6.5%. Furthermore, on the CheXpert dataset, MoCoCLIP demonstrates superior zero-shot performance, achieving an average AUC of 0.750 compared to CheXZero with 0.746 AUC, highlighting its enhanced generalization capabilities on unseen data.

Review of Zero-Shot and Few-Shot AI Algorithms in The Medical Domain

Jun 23, 2024

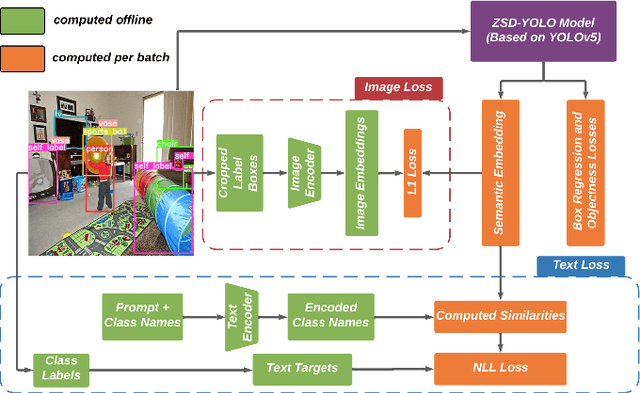

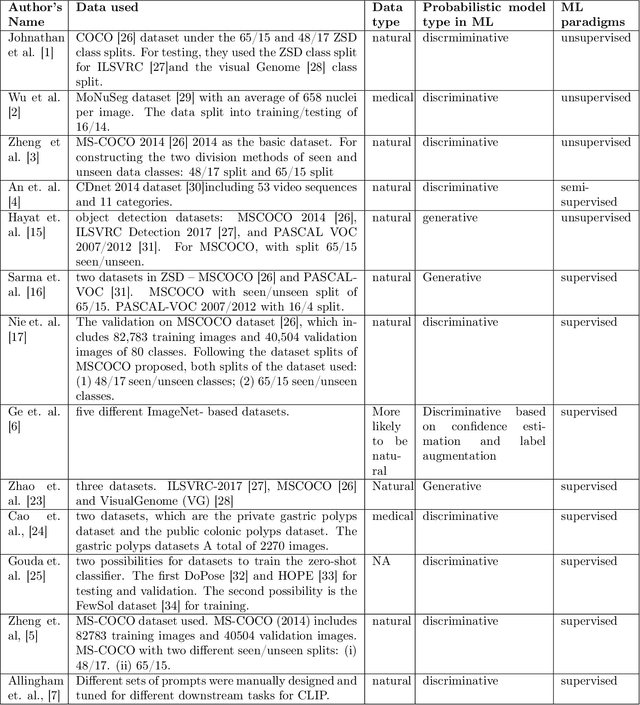

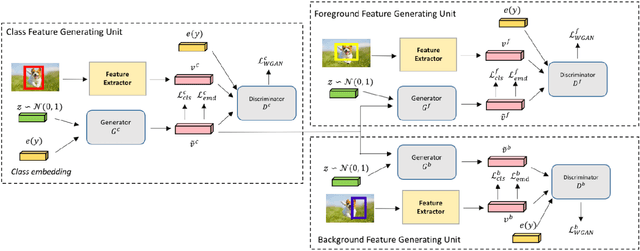

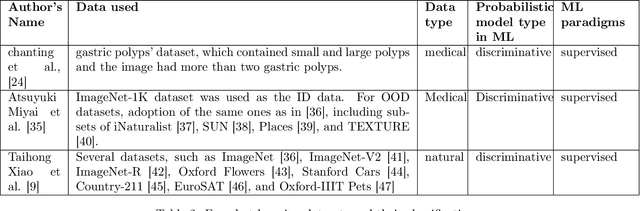

Abstract:In this paper, different techniques of few-shot, zero-shot, and regular object detection have been investigated. The need for few-shot learning and zero-shot learning techniques is crucial and arises from the limitations and challenges in traditional machine learning, deep learning, and computer vision methods where they require large amounts of data, plus the poor generalization of those traditional methods. Those techniques can give us prominent results by using only a few training sets reducing the required amounts of data and improving the generalization. This survey will highlight the recent papers of the last three years that introduce the usage of few-shot learning and zero-shot learning techniques in addressing the challenges mentioned earlier. In this paper we reviewed the Zero-shot, few-shot and regular object detection methods and categorized them in an understandable manner. Based on the comparison made within each category. It been found that the approaches are quite impressive. This integrated review of diverse papers on few-shot, zero-shot, and regular object detection reveals a shared focus on advancing the field through novel frameworks and techniques. A noteworthy observation is the scarcity of detailed discussions regarding the difficulties encountered during the development phase. Contributions include the introduction of innovative models, such as ZSD-YOLO and GTNet, often showcasing improvements with various metrics such as mean average precision (mAP),Recall@100 (RE@100), the area under the receiver operating characteristic curve (AUROC) and precision. These findings underscore a collective move towards leveraging vision-language models for versatile applications, with potential areas for future research including a more thorough exploration of limitations and domain-specific adaptations.

Attention-Guided Erasing: A Novel Augmentation Method for Enhancing Downstream Breast Density Classification

Jan 08, 2024Abstract:The assessment of breast density is crucial in the context of breast cancer screening, especially in populations with a higher percentage of dense breast tissues. This study introduces a novel data augmentation technique termed Attention-Guided Erasing (AGE), devised to enhance the downstream classification of four distinct breast density categories in mammography following the BI-RADS recommendation in the Vietnamese cohort. The proposed method integrates supplementary information during transfer learning, utilizing visual attention maps derived from a vision transformer backbone trained using the self-supervised DINO method. These maps are utilized to erase background regions in the mammogram images, unveiling only the potential areas of dense breast tissues to the network. Through the incorporation of AGE during transfer learning with varying random probabilities, we consistently surpass classification performance compared to scenarios without AGE and the traditional random erasing transformation. We validate our methodology using the publicly available VinDr-Mammo dataset. Specifically, we attain a mean F1-score of 0.5910, outperforming values of 0.5594 and 0.5691 corresponding to scenarios without AGE and with random erasing (RE), respectively. This superiority is further substantiated by t-tests, revealing a p-value of p<0.0001, underscoring the statistical significance of our approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge