Sharifa Alghowinem

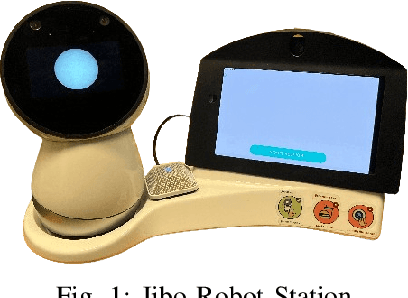

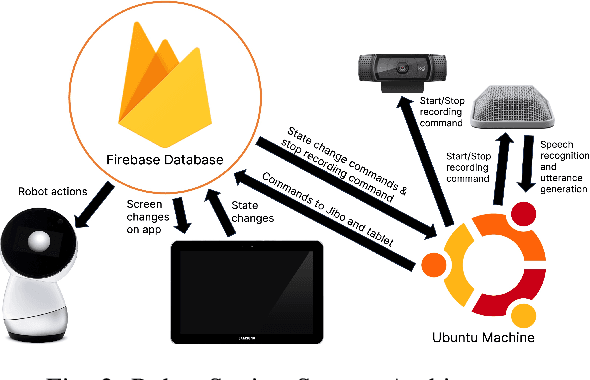

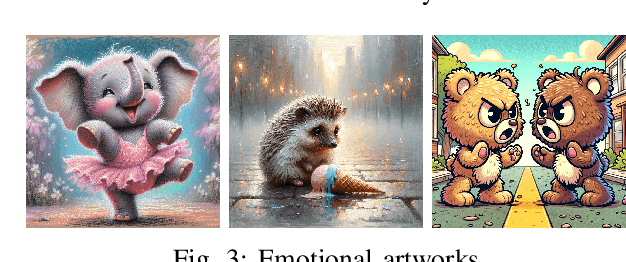

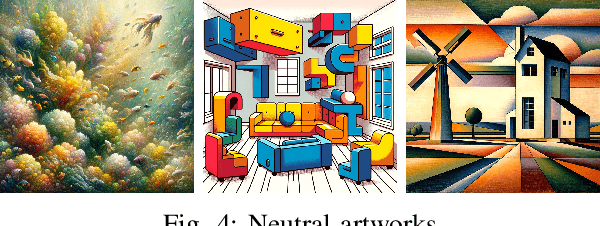

A HeARTfelt Robot: Social Robot-Driven Deep Emotional Art Reflection with Children

Sep 16, 2024

Abstract:Social-emotional learning (SEL) skills are essential for children to develop to provide a foundation for future relational and academic success. Using art as a medium for creation or as a topic to provoke conversation is a well-known method of SEL learning. Similarly, social robots have been used to teach SEL competencies like empathy, but the combination of art and social robotics has been minimally explored. In this paper, we present a novel child-robot interaction designed to foster empathy and promote SEL competencies via a conversation about art scaffolded by a social robot. Participants (N=11, age range: 7-11) conversed with a social robot about emotional and neutral art. Analysis of video and speech data demonstrated that this interaction design successfully engaged children in the practice of SEL skills, like emotion recognition and self-awareness, and greater rates of empathetic reasoning were observed when children engaged with the robot about emotional art. This study demonstrated that art-based reflection with a social robot, particularly on emotional art, can foster empathy in children, and interactions with a social robot help alleviate discomfort when sharing deep or vulnerable emotions.

EmpathicStories++: A Multimodal Dataset for Empathy towards Personal Experiences

May 24, 2024

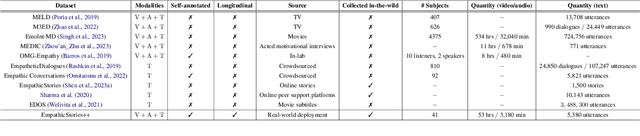

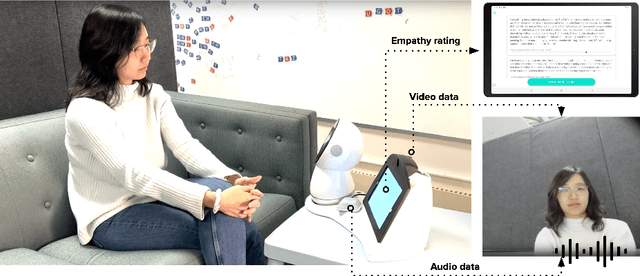

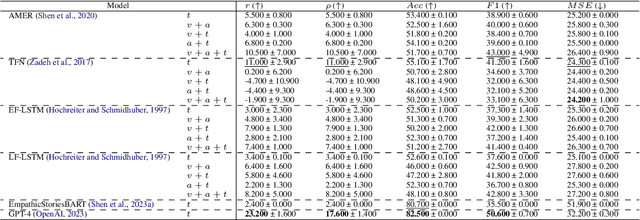

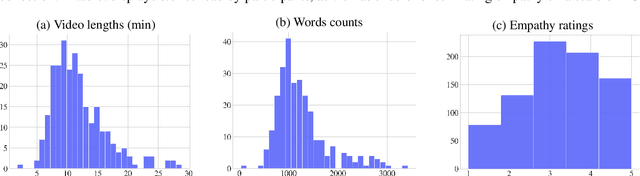

Abstract:Modeling empathy is a complex endeavor that is rooted in interpersonal and experiential dimensions of human interaction, and remains an open problem within AI. Existing empathy datasets fall short in capturing the richness of empathy responses, often being confined to in-lab or acted scenarios, lacking longitudinal data, and missing self-reported labels. We introduce a new multimodal dataset for empathy during personal experience sharing: the EmpathicStories++ dataset (https://mitmedialab.github.io/empathic-stories-multimodal/) containing 53 hours of video, audio, and text data of 41 participants sharing vulnerable experiences and reading empathically resonant stories with an AI agent. EmpathicStories++ is the first longitudinal dataset on empathy, collected over a month-long deployment of social robots in participants' homes, as participants engage in natural, empathic storytelling interactions with AI agents. We then introduce a novel task of predicting individuals' empathy toward others' stories based on their personal experiences, evaluated in two contexts: participants' own personal shared story context and their reflections on stories they read. We benchmark this task using state-of-the-art models to pave the way for future improvements in contextualized and longitudinal empathy modeling. Our work provides a valuable resource for further research in developing empathetic AI systems and understanding the intricacies of human empathy within genuine, real-world settings.

Integrating Flow Theory and Adaptive Robot Roles: A Conceptual Model of Dynamic Robot Role Adaptation for the Enhanced Flow Experience in Long-term Multi-person Human-Robot Interactions

Jan 05, 2024Abstract:In this paper, we introduce a novel conceptual model for a robot's behavioral adaptation in its long-term interaction with humans, integrating dynamic robot role adaptation with principles of flow experience from psychology. This conceptualization introduces a hierarchical interaction objective grounded in the flow experience, serving as the overarching adaptation goal for the robot. This objective intertwines both cognitive and affective sub-objectives and incorporates individual and group-level human factors. The dynamic role adaptation approach is a cornerstone of our model, highlighting the robot's ability to fluidly adapt its support roles - from leader to follower - with the aim of maintaining equilibrium between activity challenge and user skill, thereby fostering the user's optimal flow experiences. Moreover, this work delves into a comprehensive exploration of the limitations and potential applications of our proposed conceptualization. Our model places a particular emphasis on the multi-person HRI paradigm, a dimension of HRI that is both under-explored and challenging. In doing so, we aspire to extend the applicability and relevance of our conceptualization within the HRI field, contributing to the future development of adaptive social robots capable of sustaining long-term interactions with humans.

Joint Engagement Classification using Video Augmentation Techniques for Multi-person Human-robot Interaction

Dec 28, 2022

Abstract:Affect understanding capability is essential for social robots to autonomously interact with a group of users in an intuitive and reciprocal way. However, the challenge of multi-person affect understanding comes from not only the accurate perception of each user's affective state (e.g., engagement) but also the recognition of the affect interplay between the members (e.g., joint engagement) that presents as complex, but subtle, nonverbal exchanges between them. Here we present a novel hybrid framework for identifying a parent-child dyad's joint engagement by combining a deep learning framework with various video augmentation techniques. Using a dataset of parent-child dyads reading storybooks together with a social robot at home, we first train RGB frame- and skeleton-based joint engagement recognition models with four video augmentation techniques (General Aug, DeepFake, CutOut, and Mixed) applied datasets to improve joint engagement classification performance. Second, we demonstrate experimental results on the use of trained models in the robot-parent-child interaction context. Third, we introduce a behavior-based metric for evaluating the learned representation of the models to investigate the model interpretability when recognizing joint engagement. This work serves as the first step toward fully unlocking the potential of end-to-end video understanding models pre-trained on large public datasets and augmented with data augmentation and visualization techniques for affect recognition in the multi-person human-robot interaction in the wild.

Build-a-Bot: Teaching Conversational AI Using a Transformer-Based Intent Recognition and Question Answering Architecture

Dec 14, 2022

Abstract:As artificial intelligence (AI) becomes a prominent part of modern life, AI literacy is becoming important for all citizens, not just those in technology careers. Previous research in AI education materials has largely focused on the introduction of terminology as well as AI use cases and ethics, but few allow students to learn by creating their own machine learning models. Therefore, there is a need for enriching AI educational tools with more adaptable and flexible platforms for interested educators with any level of technical experience to utilize within their teaching material. As such, we propose the development of an open-source tool (Build-a-Bot) for students and teachers to not only create their own transformer-based chatbots based on their own course material, but also learn the fundamentals of AI through the model creation process. The primary concern of this paper is the creation of an interface for students to learn the principles of artificial intelligence by using a natural language pipeline to train a customized model to answer questions based on their own school curriculums. The model uses contexts given by their instructor, such as chapters of a textbook, to answer questions and is deployed on an interactive chatbot/voice agent. The pipeline teaches students data collection, data augmentation, intent recognition, and question answering by having them work through each of these processes while creating their AI agent, diverging from previous chatbot work where students and teachers use the bots as black-boxes with no abilities for customization or the bots lack AI capabilities, with the majority of dialogue scripts being rule-based. In addition, our tool is designed to make each step of this pipeline intuitive for students at a middle-school level. Further work primarily lies in providing our tool to schools and seeking student and teacher evaluations.

Explainable AI for Suicide Risk Assessment Using Eye Activities and Head Gestures

Jun 10, 2022Abstract:The prevalence of suicide has been on the rise since the 20th century, causing severe emotional damage to individuals, families, and communities alike. Despite the severity of this suicide epidemic, there is so far no reliable and systematic way to assess suicide intent of a given individual. Through efforts to automate and systematize diagnosis of mental illnesses over the past few years, verbal and acoustic behaviors have received increasing attention as biomarkers, but little has been done to study eyelids, gaze, and head pose in evaluating suicide risk. This study explores statistical analysis, feature selection, and machine learning classification as means of suicide risk evaluation and nonverbal behavioral interpretation. Applying these methods to the eye and head signals extracted from our unique dataset, this study finds that high-risk suicidal individuals experience psycho-motor retardation and symptoms of anxiety and depression, characterized by eye contact avoidance, slower blinks and a downward eye gaze. By comparing results from different methods of classification, we determined that these features are highly capable of automatically classifying different levels of suicide risk consistently and with high accuracy, above 98%. Our conclusion corroborates psychological studies, and shows great potential of a systematic approach in suicide risk evaluation that is adoptable by both healthcare providers and naive observers.

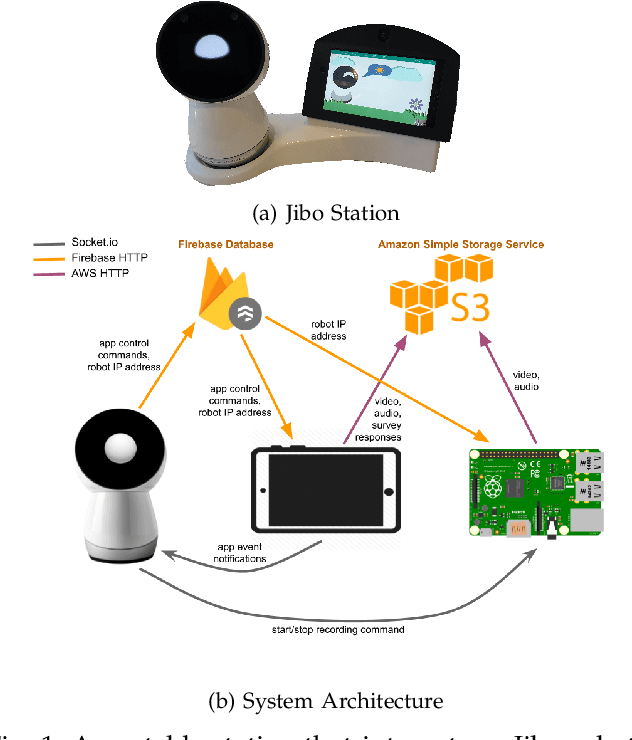

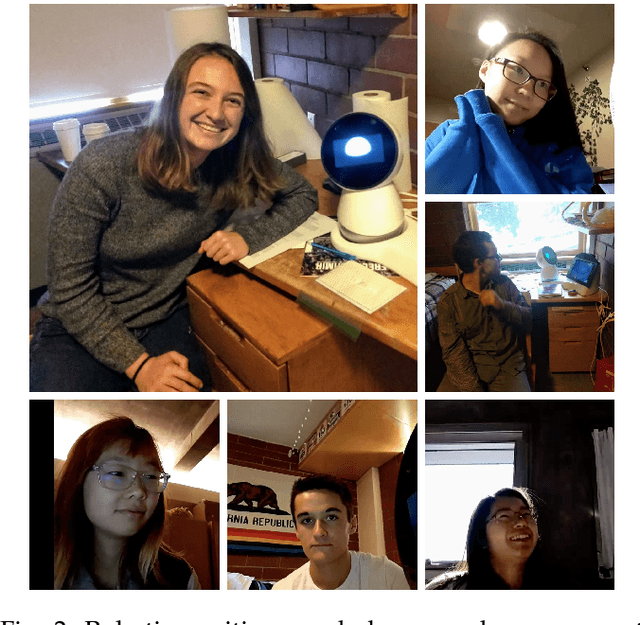

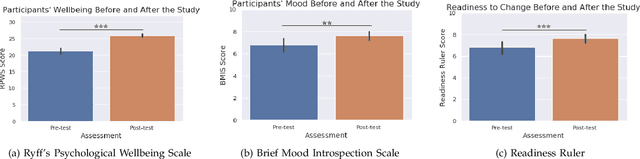

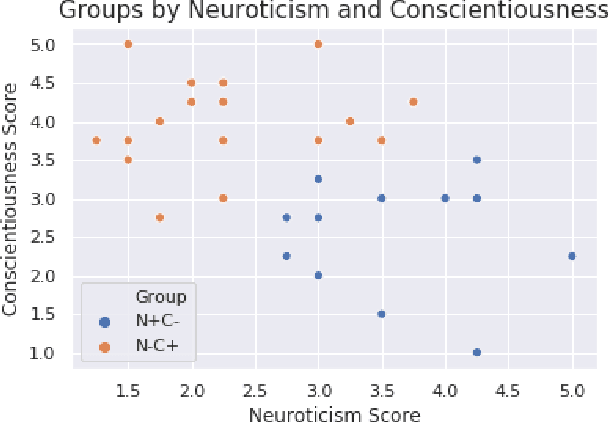

A Robotic Positive Psychology Coach to Improve College Students' Wellbeing

Sep 08, 2020

Abstract:A significant number of college students suffer from mental health issues that impact their physical, social, and occupational outcomes. Various scalable technologies have been proposed in order to mitigate the negative impact of mental health disorders. However, the evaluation for these technologies, if done at all, often reports mixed results on improving users' mental health. We need to better understand the factors that align a user's attributes and needs with technology-based interventions for positive outcomes. In psychotherapy theory, therapeutic alliance and rapport between a therapist and a client is regarded as the basis for therapeutic success. In prior works, social robots have shown the potential to build rapport and a working alliance with users in various settings. In this work, we explore the use of a social robot coach to deliver positive psychology interventions to college students living in on-campus dormitories. We recruited 35 college students to participate in our study and deployed a social robot coach in their room. The robot delivered daily positive psychology sessions among other useful skills like delivering the weather forecast, scheduling reminders, etc. We found a statistically significant improvement in participants' psychological wellbeing, mood, and readiness to change behavior for improved wellbeing after they completed the study. Furthermore, students' personality traits were found to have a significant association with intervention efficacy. Analysis of the post-study interview revealed students' appreciation of the robot's companionship and their concerns for privacy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge