Shakti Singh

Comprehensive Review of Reinforcement Learning for Medical Ultrasound Imaging

Mar 19, 2025

Abstract:Medical Ultrasound (US) imaging has seen increasing demands over the past years, becoming one of the most preferred imaging modalities in clinical practice due to its affordability, portability, and real-time capabilities. However, it faces several challenges that limit its applicability, such as operator dependency, variability in interpretation, and limited resolution, which are amplified by the low availability of trained experts. This calls for the need of autonomous systems that are capable of reducing the dependency on humans for increased efficiency and throughput. Reinforcement Learning (RL) comes as a rapidly advancing field under Artificial Intelligence (AI) that allows the development of autonomous and intelligent agents that are capable of executing complex tasks through rewarded interactions with their environments. Existing surveys on advancements in the US scanning domain predominantly focus on partially autonomous solutions leveraging AI for scanning guidance, organ identification, plane recognition, and diagnosis. However, none of these surveys explore the intersection between the stages of the US process and the recent advancements in RL solutions. To bridge this gap, this review proposes a comprehensive taxonomy that integrates the stages of the US process with the RL development pipeline. This taxonomy not only highlights recent RL advancements in the US domain but also identifies unresolved challenges crucial for achieving fully autonomous US systems. This work aims to offer a thorough review of current research efforts, highlighting the potential of RL in building autonomous US solutions while identifying limitations and opportunities for further advancements in this field.

CACTUS: An Open Dataset and Framework for Automated Cardiac Assessment and Classification of Ultrasound Images Using Deep Transfer Learning

Mar 07, 2025

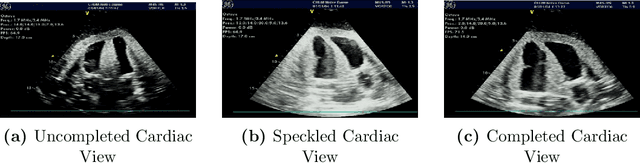

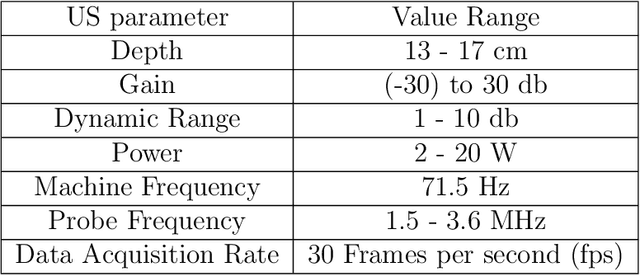

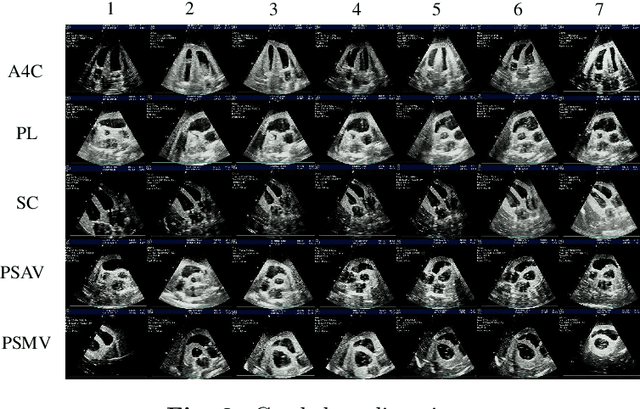

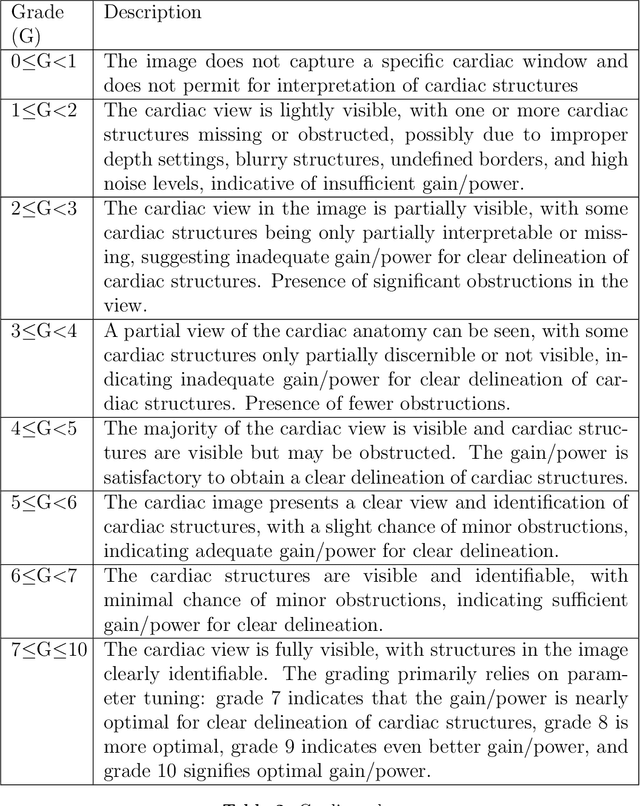

Abstract:Cardiac ultrasound (US) scanning is a commonly used techniques in cardiology to diagnose the health of the heart and its proper functioning. Therefore, it is necessary to consider ways to automate these tasks and assist medical professionals in classifying and assessing cardiac US images. Machine learning (ML) techniques are regarded as a prominent solution due to their success in numerous applications aimed at enhancing the medical field, including addressing the shortage of echography technicians. However, the limited availability of medical data presents a significant barrier to applying ML in cardiology, particularly regarding US images of the heart. This paper addresses this challenge by introducing the first open graded dataset for Cardiac Assessment and ClassificaTion of UltraSound (CACTUS), which is available online. This dataset contains images obtained from scanning a CAE Blue Phantom and representing various heart views and different quality levels, exceeding the conventional cardiac views typically found in the literature. Additionally, the paper introduces a Deep Learning (DL) framework consisting of two main components. The first component classifies cardiac US images based on the heart view using a Convolutional Neural Network (CNN). The second component uses Transfer Learning (TL) to fine-tune the knowledge from the first component and create a model for grading and assessing cardiac images. The framework demonstrates high performance in both classification and grading, achieving up to 99.43% accuracy and as low as 0.3067 error, respectively. To showcase its robustness, the framework is further fine-tuned using new images representing additional cardiac views and compared to several other state-of-the-art architectures. The framework's outcomes and performance in handling real-time scans were also assessed using a questionnaire answered by cardiac experts.

Adaptive Target Localization under Uncertainty using Multi-Agent Deep Reinforcement Learning with Knowledge Transfer

Jan 19, 2025Abstract:Target localization is a critical task in sensitive applications, where multiple sensing agents communicate and collaborate to identify the target location based on sensor readings. Existing approaches investigated the use of Multi-Agent Deep Reinforcement Learning (MADRL) to tackle target localization. Nevertheless, these methods do not consider practical uncertainties, like false alarms when the target does not exist or when it is unreachable due to environmental complexities. To address these drawbacks, this work proposes a novel MADRL-based method for target localization in uncertain environments. The proposed MADRL method employs Proximal Policy Optimization to optimize the decision-making of sensing agents, which is represented in the form of an actor-critic structure using Convolutional Neural Networks. The observations of the agents are designed in an optimized manner to capture essential information in the environment, and a team-based reward functions is proposed to produce cooperative agents. The MADRL method covers three action dimensionalities that control the agents' mobility to search the area for the target, detect its existence, and determine its reachability. Using the concept of Transfer Learning, a Deep Learning model builds on the knowledge from the MADRL model to accurately estimating the target location if it is unreachable, resulting in shared representations between the models for faster learning and lower computational complexity. Collectively, the final combined model is capable of searching for the target, determining its existence and reachability, and estimating its location accurately. The proposed method is tested using a radioactive target localization environment and benchmarked against existing methods, showing its efficacy.

Blockchain-assisted Demonstration Cloning for Multi-Agent Deep Reinforcement Learning

Jan 19, 2025

Abstract:Multi-Agent Deep Reinforcement Learning (MDRL) is a promising research area in which agents learn complex behaviors in cooperative or competitive environments. However, MDRL comes with several challenges that hinder its usability, including sample efficiency, curse of dimensionality, and environment exploration. Recent works proposing Federated Reinforcement Learning (FRL) to tackle these issues suffer from problems related to model restrictions and maliciousness. Other proposals using reward shaping require considerable engineering and could lead to local optima. In this paper, we propose a novel Blockchain-assisted Multi-Expert Demonstration Cloning (MEDC) framework for MDRL. The proposed method utilizes expert demonstrations in guiding the learning of new MDRL agents, by suggesting exploration actions in the environment. A model sharing framework on Blockchain is designed to allow users to share their trained models, which can be allocated as expert models to requesting users to aid in training MDRL systems. A Consortium Blockchain is adopted to enable traceable and autonomous execution without the need for a single trusted entity. Smart Contracts are designed to manage users and models allocation, which are shared using IPFS. The proposed framework is tested on several applications, and is benchmarked against existing methods in FRL, Reward Shaping, and Imitation Learning-assisted RL. The results show the outperformance of the proposed framework in terms of learning speed and resiliency to faulty and malicious models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge