Seyeon Lee

Common Sense Beyond English: Evaluating and Improving Multilingual Language Models for Commonsense Reasoning

Jun 13, 2021

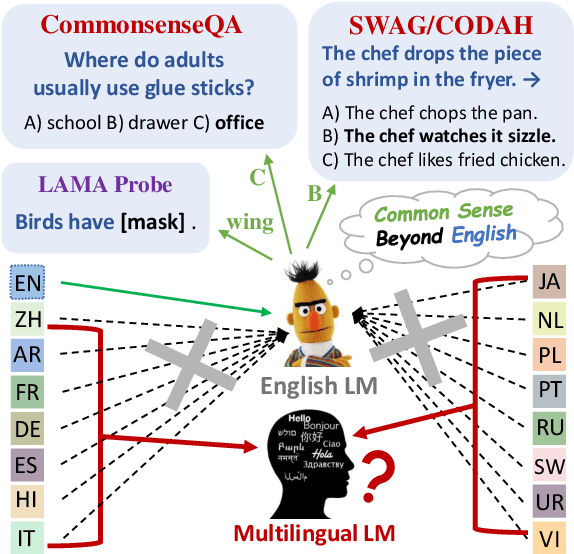

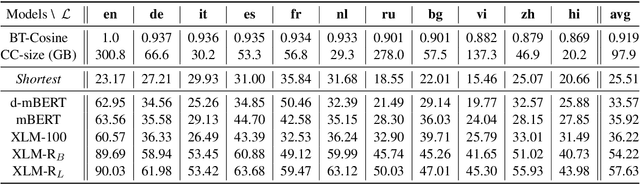

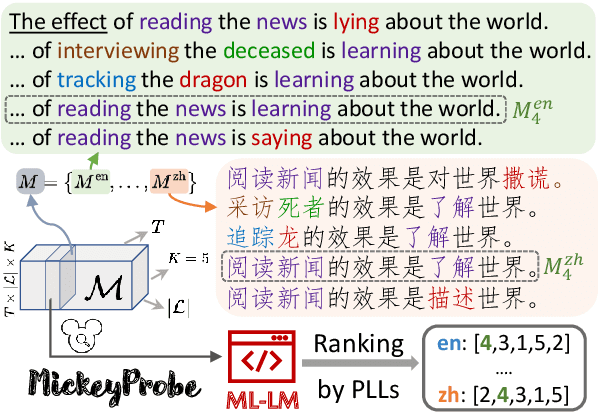

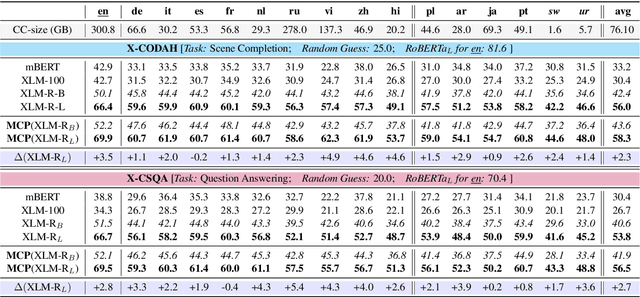

Abstract:Commonsense reasoning research has so far been limited to English. We aim to evaluate and improve popular multilingual language models (ML-LMs) to help advance commonsense reasoning (CSR) beyond English. We collect the Mickey Corpus, consisting of 561k sentences in 11 different languages, which can be used for analyzing and improving ML-LMs. We propose Mickey Probe, a language-agnostic probing task for fairly evaluating the common sense of popular ML-LMs across different languages. In addition, we also create two new datasets, X-CSQA and X-CODAH, by translating their English versions to 15 other languages, so that we can evaluate popular ML-LMs for cross-lingual commonsense reasoning. To improve the performance beyond English, we propose a simple yet effective method -- multilingual contrastive pre-training (MCP). It significantly enhances sentence representations, yielding a large performance gain on both benchmarks.

Birds have four legs?! NumerSense: Probing Numerical Commonsense Knowledge of Pre-trained Language Models

May 02, 2020

Abstract:Recent works show that pre-trained masked language models, such as BERT, possess certain linguistic and commonsense knowledge. However, it remains to be seen what types of commonsense knowledge these models have access to. In this vein, we propose to study whether numerical commonsense knowledge -- commonsense knowledge that provides an understanding of the numeric relation between entities -- can be induced from pre-trained masked language models and to what extent is this access to knowledge robust against adversarial examples? To study this, we introduce a probing task with a diagnostic dataset, NumerSense, containing 3,145 masked-word-prediction probes. Surprisingly, our experiments and analysis reveal that: (1) BERT and its stronger variant RoBERTa perform poorly on our dataset prior to any fine-tuning; (2) fine-tuning with distant supervision does improve performance; (3) the best distantly supervised model still performs poorly when compared to humans (47.8% vs 96.3%).

LEAN-LIFE: A Label-Efficient Annotation Framework Towards Learning from Explanation

Apr 16, 2020

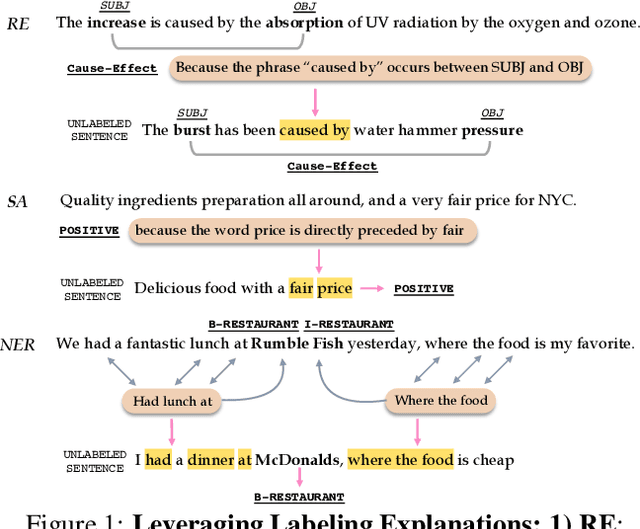

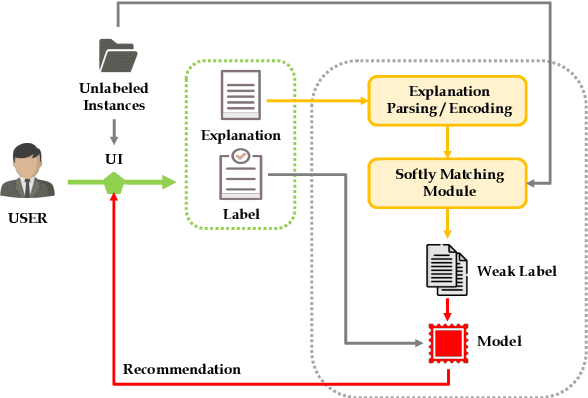

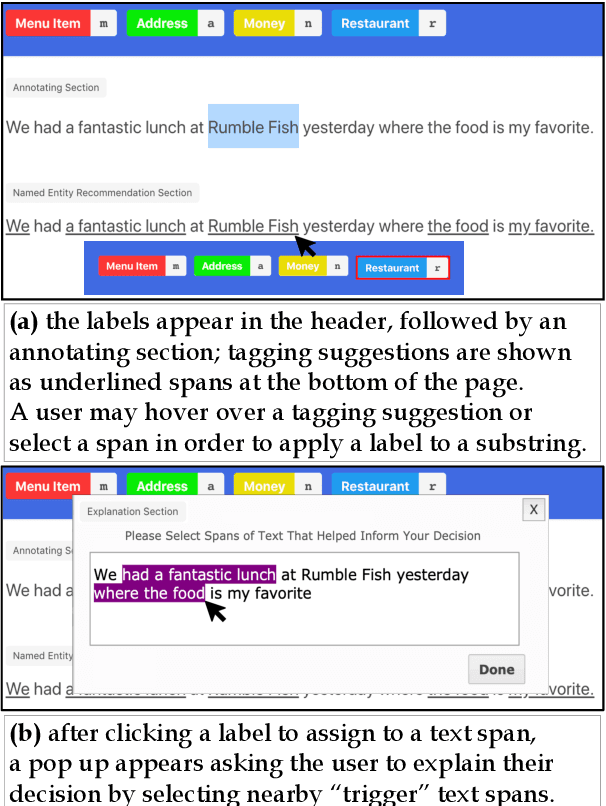

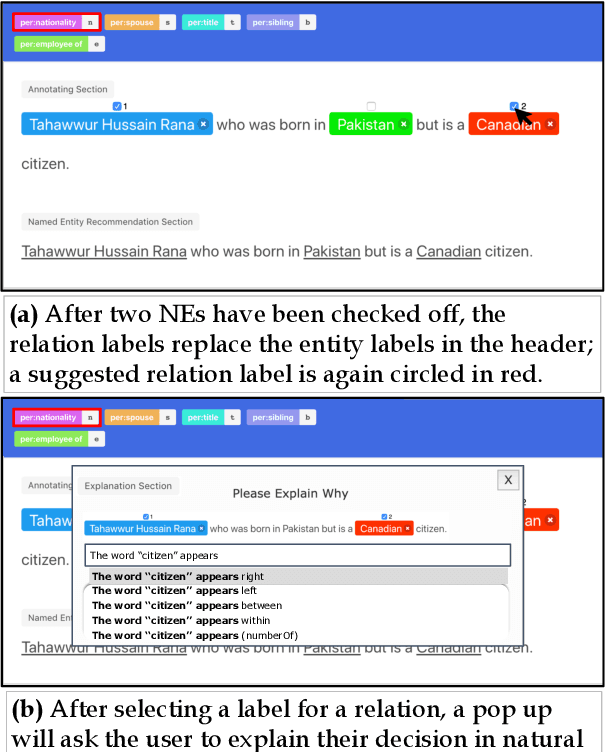

Abstract:Successfully training a deep neural network demands a huge corpus of labeled data. However, each label only provides limited information to learn from and collecting the requisite number of labels involves massive human effort. In this work, we introduce LEAN-LIFE, a web-based, Label-Efficient AnnotatioN framework for sequence labeling and classification tasks, with an easy-to-use UI that not only allows an annotator to provide the needed labels for a task, but also enables LearnIng From Explanations for each labeling decision. Such explanations enable us to generate useful additional labeled data from unlabeled instances, bolstering the pool of available training data. On three popular NLP tasks (named entity recognition, relation extraction, sentiment analysis), we find that using this enhanced supervision allows our models to surpass competitive baseline F1 scores by more than 5-10 percentage points, while using 2X times fewer labeled instances. Our framework is the first to utilize this enhanced supervision technique and does so for three important tasks -- thus providing improved annotation recommendations to users and an ability to build datasets of (data, label, explanation) triples instead of the regular (data, label) pair.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge