Seungmin Lee

Performance Analysis of IEEE 802.11bn with Coordinated TDMA on Real-Time Applications

Aug 28, 2025Abstract:Wi-Fi plays a crucial role in connecting electronic devices and providing communication services in everyday life. Recently, there has been a growing demand for services that require low-latency communication, such as real-time applications. The latest amendments to Wi-Fi, IEEE 802.11bn, are being developed to address these demands with technologies such as the multiple access point coordination (MAPC). In this paper, we demonstrate that coordinated TDMA (Co-TDMA), one of the MAPC techniques, effectively reduces the latency of transmitting time-sensitive traffic. In particular, we focus on worst-case latency and jitter, which are key metrics for evaluating the performance of real-time applications. We first introduce a Co-TDMA scheduling strategy. We then investigate how this scheduling strategy impacts latency under varying levels of network congestion and traffic volume characteristics. Finally, we validate our findings through system-level simulations. Our simulation results demonstrate that Co-TDMA effectively mitigates jitter and worst-case latency for low-latency traffic, with the latter exhibiting an improvement of approximately 24%.

Def-DTS: Deductive Reasoning for Open-domain Dialogue Topic Segmentation

May 27, 2025Abstract:Dialogue Topic Segmentation (DTS) aims to divide dialogues into coherent segments. DTS plays a crucial role in various NLP downstream tasks, but suffers from chronic problems: data shortage, labeling ambiguity, and incremental complexity of recently proposed solutions. On the other hand, Despite advances in Large Language Models (LLMs) and reasoning strategies, these have rarely been applied to DTS. This paper introduces Def-DTS: Deductive Reasoning for Open-domain Dialogue Topic Segmentation, which utilizes LLM-based multi-step deductive reasoning to enhance DTS performance and enable case study using intermediate result. Our method employs a structured prompting approach for bidirectional context summarization, utterance intent classification, and deductive topic shift detection. In the intent classification process, we propose the generalizable intent list for domain-agnostic dialogue intent classification. Experiments in various dialogue settings demonstrate that Def-DTS consistently outperforms traditional and state-of-the-art approaches, with each subtask contributing to improved performance, particularly in reducing type 2 error. We also explore the potential for autolabeling, emphasizing the importance of LLM reasoning techniques in DTS.

Context-Aware LLM Translation System Using Conversation Summarization and Dialogue History

Oct 22, 2024

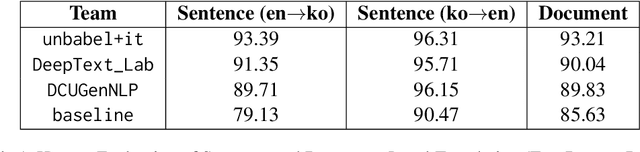

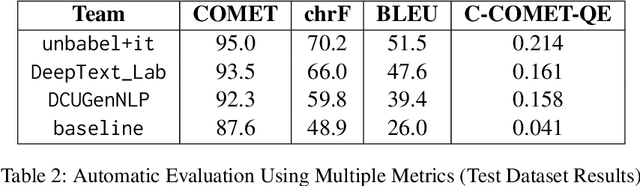

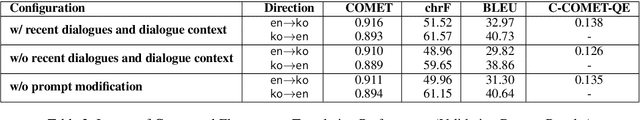

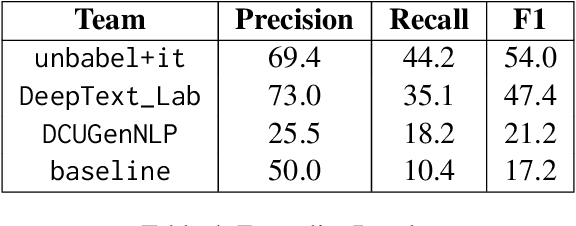

Abstract:Translating conversational text, particularly in customer support contexts, presents unique challenges due to its informal and unstructured nature. We propose a context-aware LLM translation system that leverages conversation summarization and dialogue history to enhance translation quality for the English-Korean language pair. Our approach incorporates the two most recent dialogues as raw data and a summary of earlier conversations to manage context length effectively. We demonstrate that this method significantly improves translation accuracy, maintaining coherence and consistency across conversations. This system offers a practical solution for customer support translation tasks, addressing the complexities of conversational text.

Drop to Adapt: Learning Discriminative Features for Unsupervised Domain Adaptation

Oct 12, 2019

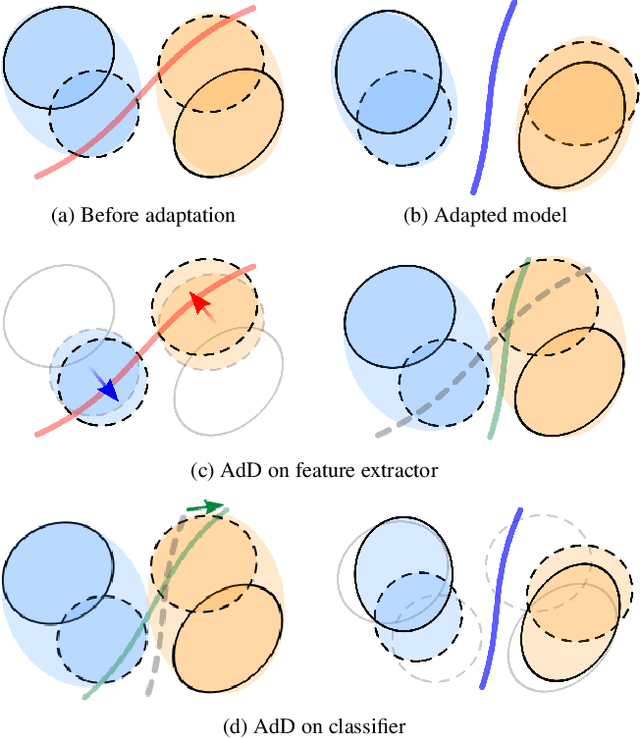

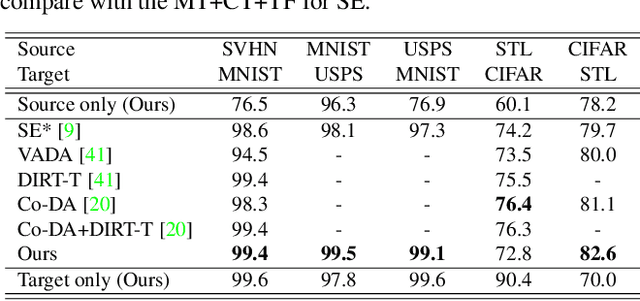

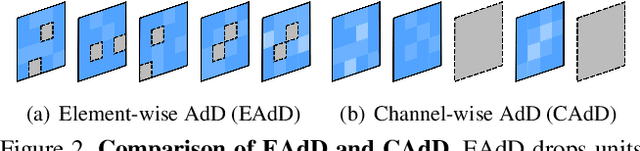

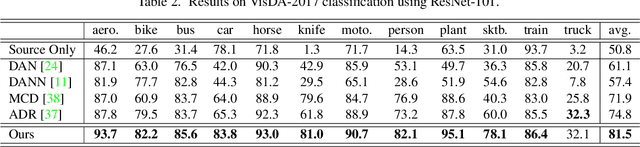

Abstract:Recent works on domain adaptation exploit adversarial training to obtain domain-invariant feature representations from the joint learning of feature extractor and domain discriminator networks. However, domain adversarial methods render suboptimal performances since they attempt to match the distributions among the domains without considering the task at hand. We propose Drop to Adapt (DTA), which leverages adversarial dropout to learn strongly discriminative features by enforcing the cluster assumption. Accordingly, we design objective functions to support robust domain adaptation. We demonstrate efficacy of the proposed method on various experiments and achieve consistent improvements in both image classification and semantic segmentation tasks. Our source code is available at https://github.com/postBG/DTA.pytorch.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge