Seiichi Kuroki

Domain Discrepancy Measure Using Complex Models in Unsupervised Domain Adaptation

Jan 30, 2019

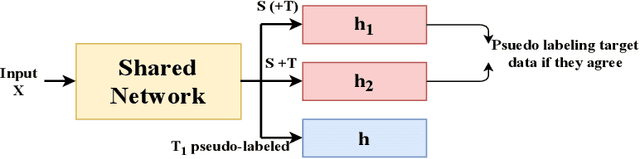

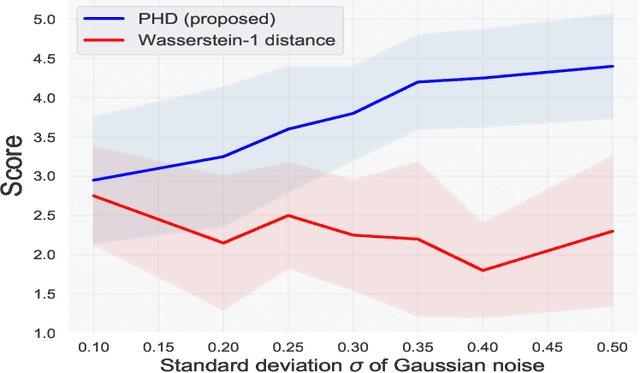

Abstract:We address the problem of measuring the difference between two domains in unsupervised domain adaptation. We point out that the existing discrepancy measures are less informative when complex models such as deep neural networks are applied. Furthermore, estimation of the existing discrepancy measures can be computationally difficult and only limited to the binary classification task. To mitigate these shortcomings, we propose a novel discrepancy measure that is very simple to estimate for many tasks not limited to binary classification, theoretically-grounded, and can be applied effectively for complex models. We also provide easy-to-interpret generalization bounds that explain the effectiveness of a family of pseudo-labeling methods in unsupervised domain adaptation. Finally, we conduct experiments to validate the usefulness of our proposed discrepancy measure.

Unsupervised Domain Adaptation Based on Source-guided Discrepancy

Sep 13, 2018

Abstract:Unsupervised domain adaptation is the problem setting where data generating distributions in the source and target domains are different, and labels in the target domain are unavailable. One important question in unsupervised domain adaptation is how to measure the difference between the source and target domains. A previously proposed discrepancy that does not use the source domain labels requires high computational cost to estimate and may lead to a loose generalization error bound in the target domain. To mitigate these problems, we propose a novel discrepancy called source-guided discrepancy ($S$-disc), which exploits labels in the source domain. As a consequence, $S$-disc can be computed efficiently with a finite sample convergence guarantee. In addition, we show that $S$-disc can provide a tighter generalization error bound than the one based on an existing discrepancy. Finally, we report experimental results that demonstrate the advantages of $S$-disc over the existing discrepancies.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge