Satyam Kumar

Oggi

Comparative Analysis of Personalized Voice Activity Detection Systems: Assessing Real-World Effectiveness

Jun 12, 2024

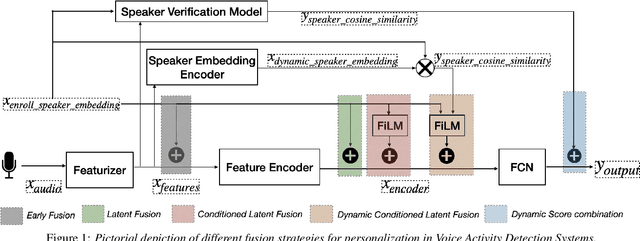

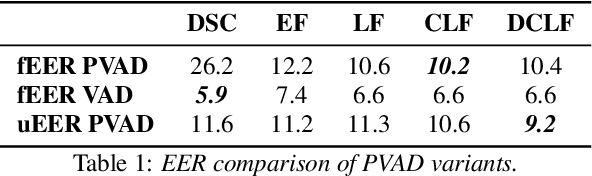

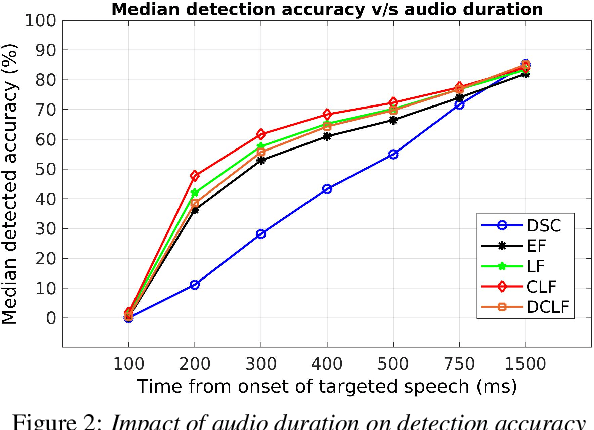

Abstract:Voice activity detection (VAD) is a critical component in various applications such as speech recognition, speech enhancement, and hands-free communication systems. With the increasing demand for personalized and context-aware technologies, the need for effective personalized VAD systems has become paramount. In this paper, we present a comparative analysis of Personalized Voice Activity Detection (PVAD) systems to assess their real-world effectiveness. We introduce a comprehensive approach to assess PVAD systems, incorporating various performance metrics such as frame-level and utterance-level error rates, detection latency and accuracy, alongside user-level analysis. Through extensive experimentation and evaluation, we provide a thorough understanding of the strengths and limitations of various PVAD variants. This paper advances the understanding of PVAD technology by offering insights into its efficacy and viability in practical applications using a comprehensive set of metrics.

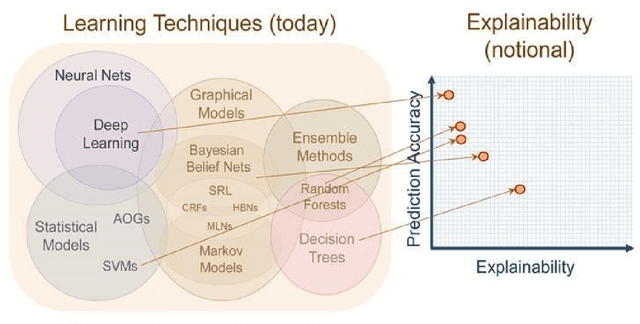

Causal Inference for Banking Finance and Insurance A Survey

Jul 31, 2023Abstract:Causal Inference plays an significant role in explaining the decisions taken by statistical models and artificial intelligence models. Of late, this field started attracting the attention of researchers and practitioners alike. This paper presents a comprehensive survey of 37 papers published during 1992-2023 and concerning the application of causal inference to banking, finance, and insurance. The papers are categorized according to the following families of domains: (i) Banking, (ii) Finance and its subdomains such as corporate finance, governance finance including financial risk and financial policy, financial economics, and Behavioral finance, and (iii) Insurance. Further, the paper covers the primary ingredients of causal inference namely, statistical methods such as Bayesian Causal Network, Granger Causality and jargon used thereof such as counterfactuals. The review also recommends some important directions for future research. In conclusion, we observed that the application of causal inference in the banking and insurance sectors is still in its infancy, and thus more research is possible to turn it into a viable method.

Application of Causal Inference to Analytical Customer Relationship Management in Banking and Insurance

Aug 19, 2022

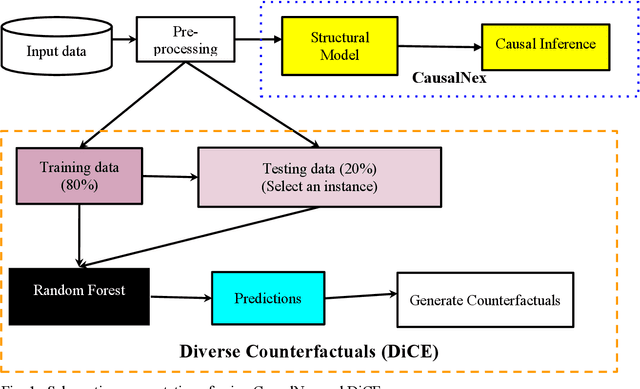

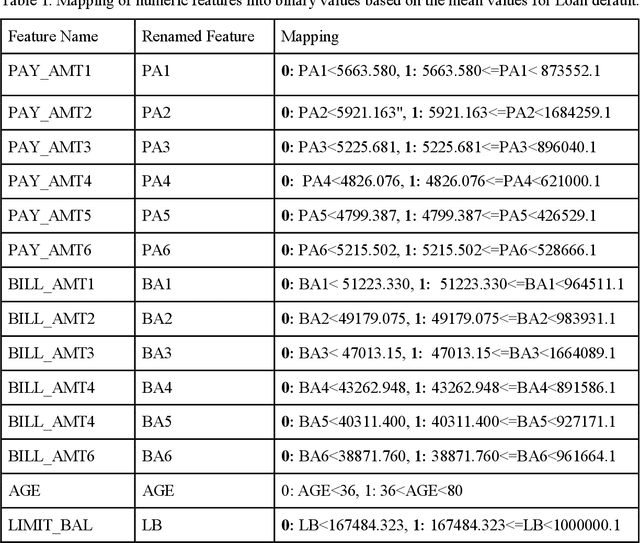

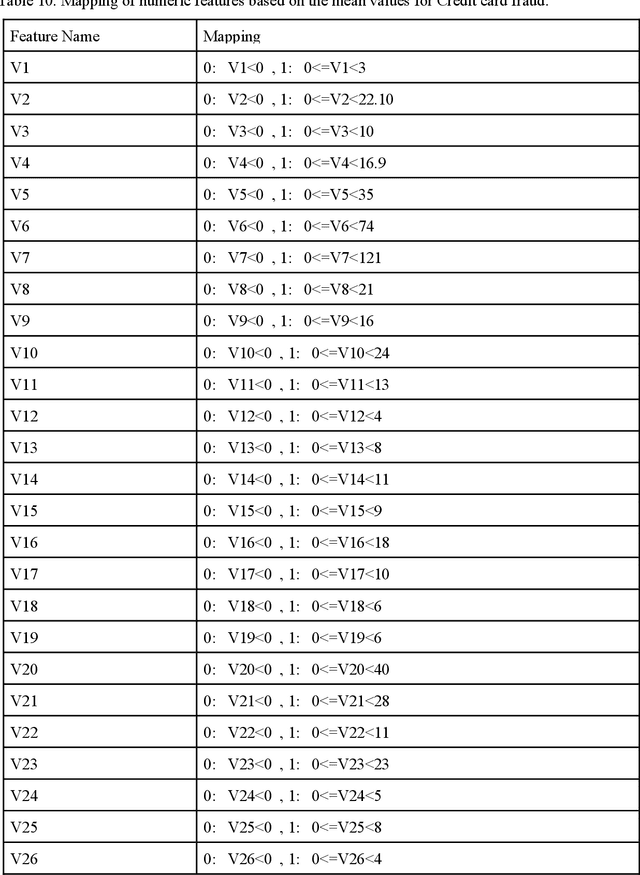

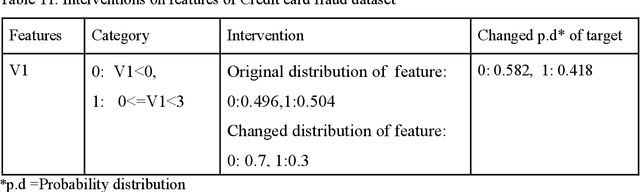

Abstract:Of late, in order to have better acceptability among various domain, researchers have argued that machine intelligence algorithms must be able to provide explanations that humans can understand causally. This aspect, also known as causability, achieves a specific level of human-level explainability. A specific class of algorithms known as counterfactuals may be able to provide causability. In statistics, causality has been studied and applied for many years, but not in great detail in artificial intelligence (AI). In a first-of-its-kind study, we employed the principles of causal inference to provide explainability for solving the analytical customer relationship management (ACRM) problems. In the context of banking and insurance, current research on interpretability tries to address causality-related questions like why did this model make such decisions, and was the model's choice influenced by a particular factor? We propose a solution in the form of an intervention, wherein the effect of changing the distribution of features of ACRM datasets is studied on the target feature. Subsequently, a set of counterfactuals is also obtained that may be furnished to any customer who demands an explanation of the decision taken by the bank/insurance company. Except for the credit card churn prediction dataset, good quality counterfactuals were generated for the loan default, insurance fraud detection, and credit card fraud detection datasets, where changes in no more than three features are observed.

Explainable Reinforcement Learning on Financial Stock Trading using SHAP

Aug 18, 2022

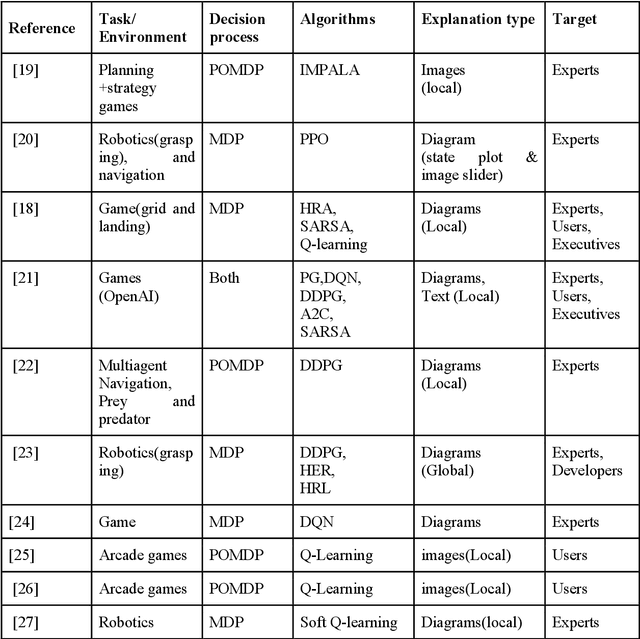

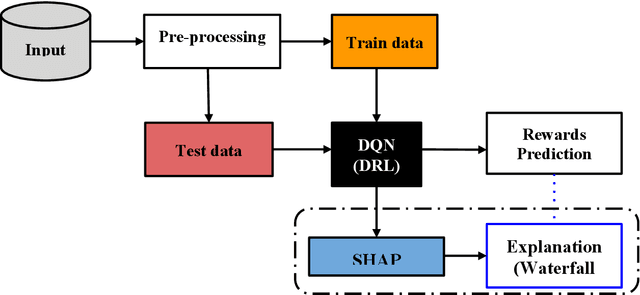

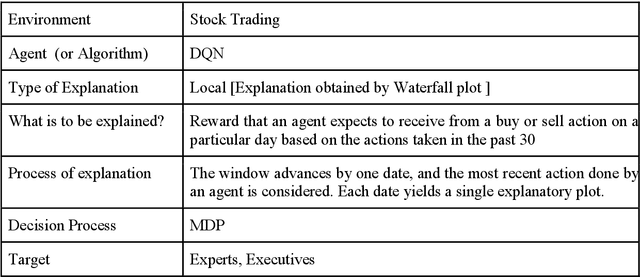

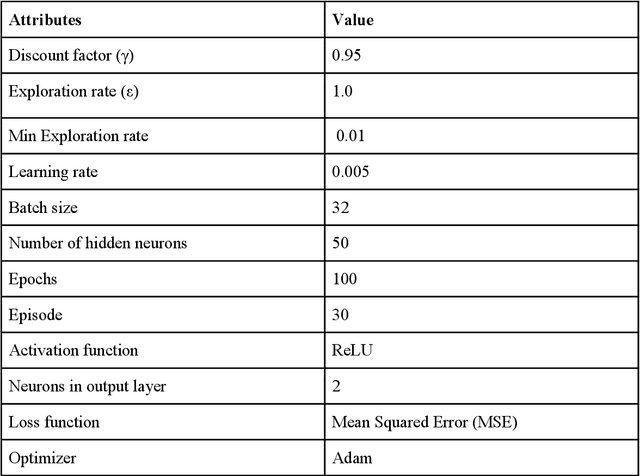

Abstract:Explainable Artificial Intelligence (XAI) research gained prominence in recent years in response to the demand for greater transparency and trust in AI from the user communities. This is especially critical because AI is adopted in sensitive fields such as finance, medicine etc., where implications for society, ethics, and safety are immense. Following thorough systematic evaluations, work in XAI has primarily focused on Machine Learning (ML) for categorization, decision, or action. To the best of our knowledge, no work is reported that offers an Explainable Reinforcement Learning (XRL) method for trading financial stocks. In this paper, we proposed to employ SHapley Additive exPlanation (SHAP) on a popular deep reinforcement learning architecture viz., deep Q network (DQN) to explain an action of an agent at a given instance in financial stock trading. To demonstrate the effectiveness of our method, we tested it on two popular datasets namely, SENSEX and DJIA, and reported the results.

Explainable Deep Belief Network based Auto encoder using novel Extended Garson Algorithm

Jul 18, 2022

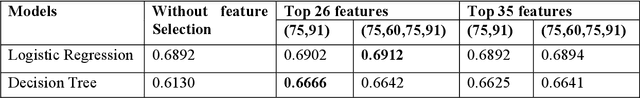

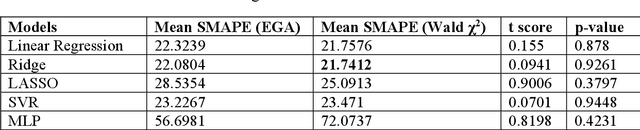

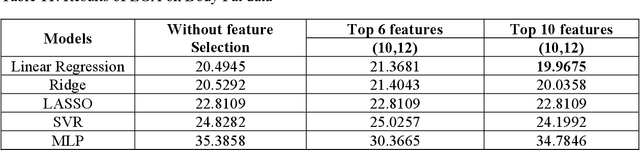

Abstract:The most difficult task in machine learning is to interpret trained shallow neural networks. Deep neural networks (DNNs) provide impressive results on a larger number of tasks, but it is generally still unclear how decisions are made by such a trained deep neural network. Providing feature importance is the most important and popular interpretation technique used in shallow and deep neural networks. In this paper, we develop an algorithm extending the idea of Garson Algorithm to explain Deep Belief Network based Auto-encoder (DBNA). It is used to determine the contribution of each input feature in the DBN. It can be used for any kind of neural network with many hidden layers. The effectiveness of this method is tested on both classification and regression datasets taken from literature. Important features identified by this method are compared against those obtained by Wald chi square (\c{hi}2). For 2 out of 4 classification datasets and 2 out of 5 regression datasets, our proposed methodology resulted in the identification of better-quality features leading to statistically more significant results vis-\`a-vis Wald \c{hi}2.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge