Application of Causal Inference to Analytical Customer Relationship Management in Banking and Insurance

Paper and Code

Aug 19, 2022

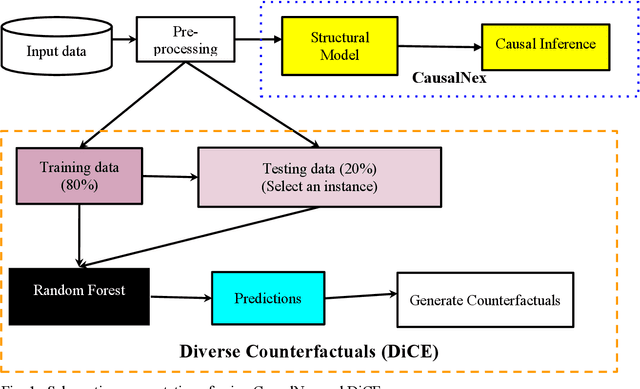

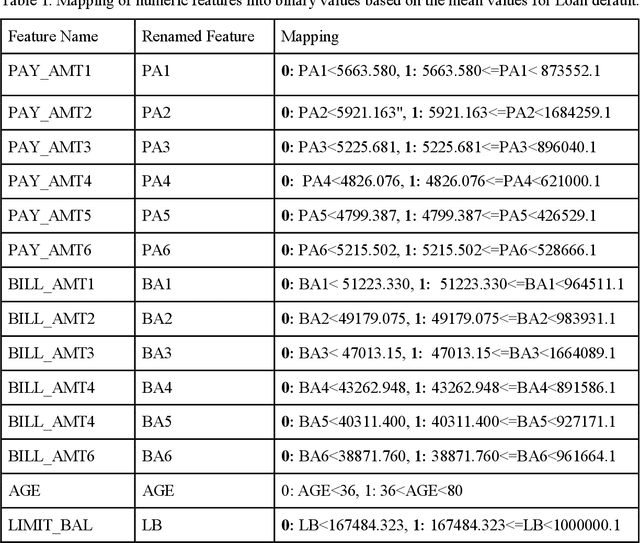

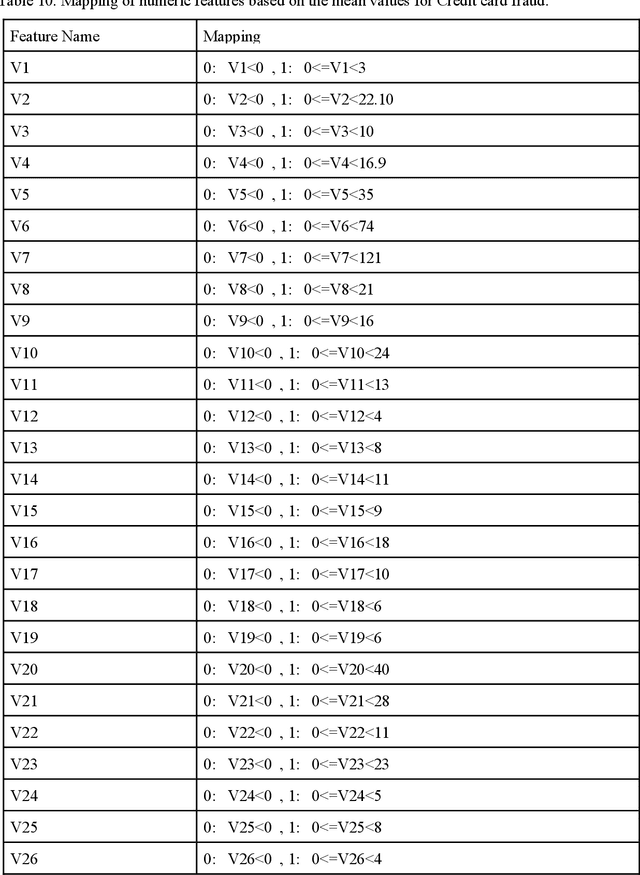

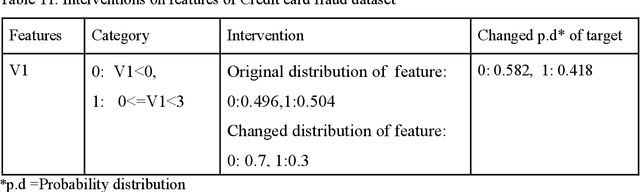

Of late, in order to have better acceptability among various domain, researchers have argued that machine intelligence algorithms must be able to provide explanations that humans can understand causally. This aspect, also known as causability, achieves a specific level of human-level explainability. A specific class of algorithms known as counterfactuals may be able to provide causability. In statistics, causality has been studied and applied for many years, but not in great detail in artificial intelligence (AI). In a first-of-its-kind study, we employed the principles of causal inference to provide explainability for solving the analytical customer relationship management (ACRM) problems. In the context of banking and insurance, current research on interpretability tries to address causality-related questions like why did this model make such decisions, and was the model's choice influenced by a particular factor? We propose a solution in the form of an intervention, wherein the effect of changing the distribution of features of ACRM datasets is studied on the target feature. Subsequently, a set of counterfactuals is also obtained that may be furnished to any customer who demands an explanation of the decision taken by the bank/insurance company. Except for the credit card churn prediction dataset, good quality counterfactuals were generated for the loan default, insurance fraud detection, and credit card fraud detection datasets, where changes in no more than three features are observed.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge