Sanjit Singh Batra

POET: Protocol Optimization via Eligibility Tuning

Jan 30, 2026Abstract:Eligibility criteria (EC) are essential for clinical trial design, yet drafting them remains a time-intensive and cognitively demanding task for clinicians. Existing automated approaches often fall at two extremes either requiring highly structured inputs, such as predefined entities to generate specific criteria, or relying on end-to-end systems that produce full eligibility criteria from minimal input such as trial descriptions limiting their practical utility. In this work, we propose a guided generation framework that introduces interpretable semantic axes, such as Demographics, Laboratory Parameters, and Behavioral Factors, to steer EC generation. These axes, derived using large language models, offer a middle ground between specificity and usability, enabling clinicians to guide generation without specifying exact entities. In addition, we present a reusable rubric-based evaluation framework that assesses generated criteria along clinically meaningful dimensions. Our results show that our guided generation approach consistently outperforms unguided generation in both automatic, rubric-based and clinician evaluations, offering a practical and interpretable solution for AI-assisted trial design.

Health-SCORE: Towards Scalable Rubrics for Improving Health-LLMs

Jan 26, 2026Abstract:Rubrics are essential for evaluating open-ended LLM responses, especially in safety-critical domains such as healthcare. However, creating high-quality and domain-specific rubrics typically requires significant human expertise time and development cost, making rubric-based evaluation and training difficult to scale. In this work, we introduce Health-SCORE, a generalizable and scalable rubric-based training and evaluation framework that substantially reduces rubric development costs without sacrificing performance. We show that Health-SCORE provides two practical benefits beyond standalone evaluation: it can be used as a structured reward signal to guide reinforcement learning with safety-aware supervision, and it can be incorporated directly into prompts to improve response quality through in-context learning. Across open-ended healthcare tasks, Health-SCORE achieves evaluation quality comparable to human-created rubrics while significantly lowering development effort, making rubric-based evaluation and training more scalable.

Surpassing GPT-4 Medical Coding with a Two-Stage Approach

Nov 22, 2023Abstract:Recent advances in large language models (LLMs) show potential for clinical applications, such as clinical decision support and trial recommendations. However, the GPT-4 LLM predicts an excessive number of ICD codes for medical coding tasks, leading to high recall but low precision. To tackle this challenge, we introduce LLM-codex, a two-stage approach to predict ICD codes that first generates evidence proposals using an LLM and then employs an LSTM-based verification stage. The LSTM learns from both the LLM's high recall and human expert's high precision, using a custom loss function. Our model is the only approach that simultaneously achieves state-of-the-art results in medical coding accuracy, accuracy on rare codes, and sentence-level evidence identification to support coding decisions without training on human-annotated evidence according to experiments on the MIMIC dataset.

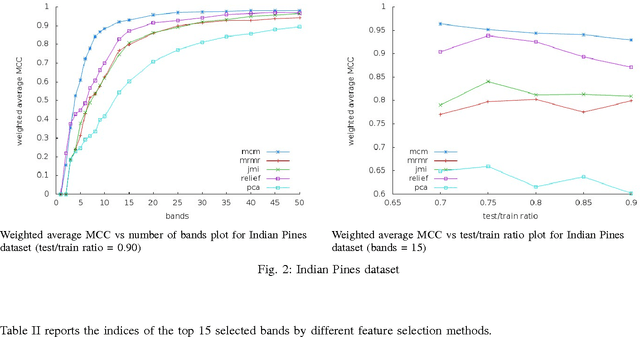

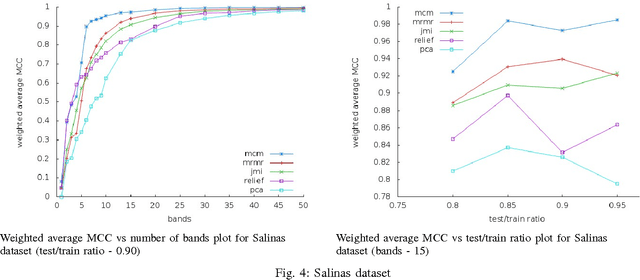

Feature Selection for classification of hyperspectral data by minimizing a tight bound on the VC dimension

Sep 27, 2015

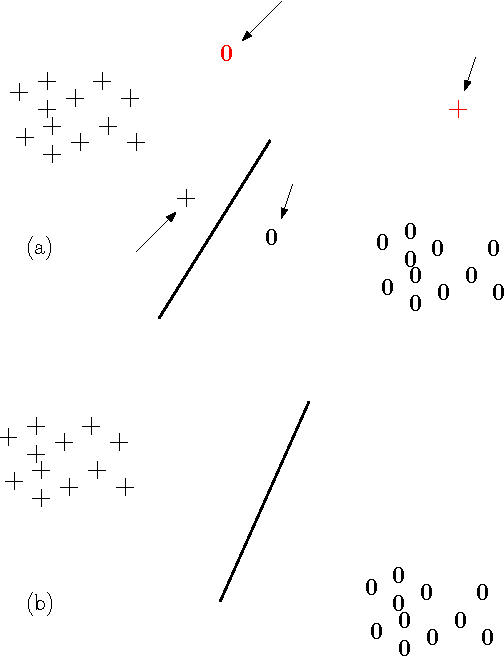

Abstract:Hyperspectral data consists of large number of features which require sophisticated analysis to be extracted. A popular approach to reduce computational cost, facilitate information representation and accelerate knowledge discovery is to eliminate bands that do not improve the classification and analysis methods being applied. In particular, algorithms that perform band elimination should be designed to take advantage of the specifics of the classification method being used. This paper employs a recently proposed filter-feature-selection algorithm based on minimizing a tight bound on the VC dimension. We have successfully applied this algorithm to determine a reasonable subset of bands without any user-defined stopping criteria on widely used hyperspectral images and demonstrate that this method outperforms state-of-the-art methods in terms of both sparsity of feature set as well as accuracy of classification.\end{abstract}

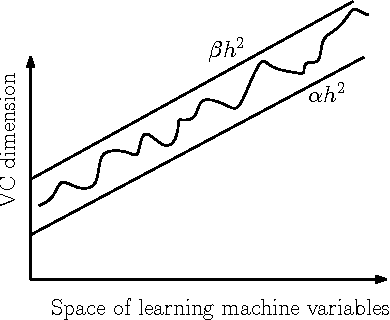

Learning a Fuzzy Hyperplane Fat Margin Classifier with Minimum VC dimension

Jan 11, 2015

Abstract:The Vapnik-Chervonenkis (VC) dimension measures the complexity of a learning machine, and a low VC dimension leads to good generalization. The recently proposed Minimal Complexity Machine (MCM) learns a hyperplane classifier by minimizing an exact bound on the VC dimension. This paper extends the MCM classifier to the fuzzy domain. The use of a fuzzy membership is known to reduce the effect of outliers, and to reduce the effect of noise on learning. Experimental results show, that on a number of benchmark datasets, the the fuzzy MCM classifier outperforms SVMs and the conventional MCM in terms of generalization, and that the fuzzy MCM uses fewer support vectors. On several benchmark datasets, the fuzzy MCM classifier yields excellent test set accuracies while using one-tenth the number of support vectors used by SVMs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge