Sanjeev V. Namjoshi

Serverless Federated Learning with flwr-serverless

Oct 23, 2023

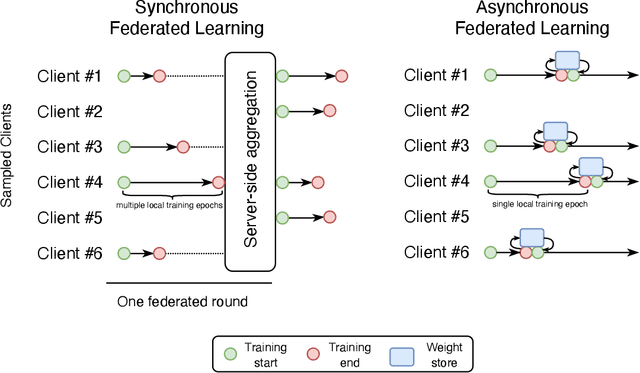

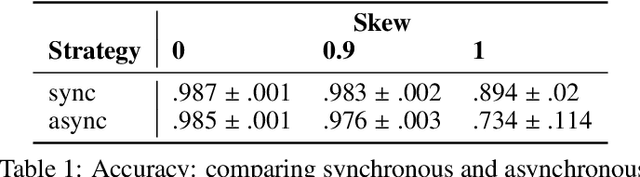

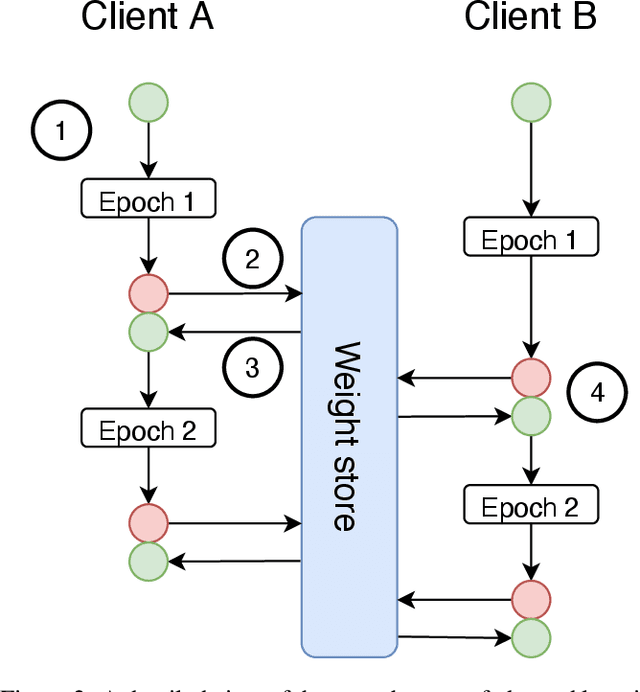

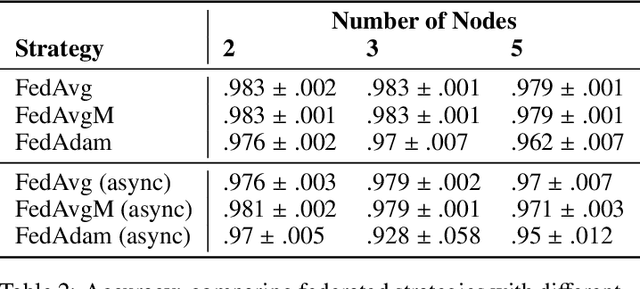

Abstract:Federated learning is becoming increasingly relevant and popular as we witness a surge in data collection and storage of personally identifiable information. Alongside these developments there have been many proposals from governments around the world to provide more protections for individuals' data and a heightened interest in data privacy measures. As deep learning continues to become more relevant in new and existing domains, it is vital to develop strategies like federated learning that can effectively train data from different sources, such as edge devices, without compromising security and privacy. Recently, the Flower (\texttt{Flwr}) Python package was introduced to provide a scalable, flexible, and easy-to-use framework for implementing federated learning. However, to date, Flower is only able to run synchronous federated learning which can be costly and time-consuming to run because the process is bottlenecked by client-side training jobs that are slow or fragile. Here, we introduce \texttt{flwr-serverless}, a wrapper around the Flower package that extends its functionality to allow for both synchronous and asynchronous federated learning with minimal modification to Flower's design paradigm. Furthermore, our approach to federated learning allows the process to run without a central server, which increases the domains of application and accessibility of its use. This paper presents the design details and usage of this approach through a series of experiments that were conducted using public datasets. Overall, we believe that our approach decreases the time and cost to run federated training and provides an easier way to implement and experiment with federated learning systems.

A transformer-based deep learning approach for classifying brain metastases into primary organ sites using clinical whole brain MRI images

Oct 07, 2021

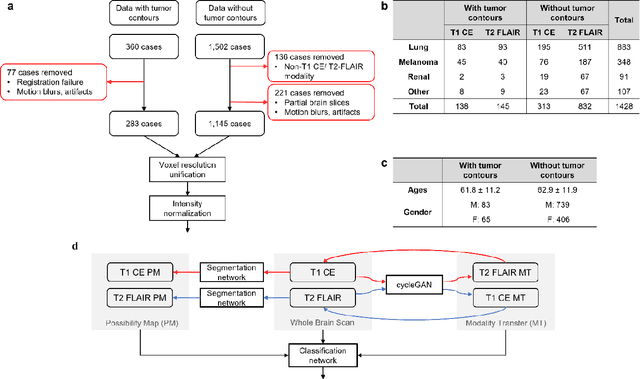

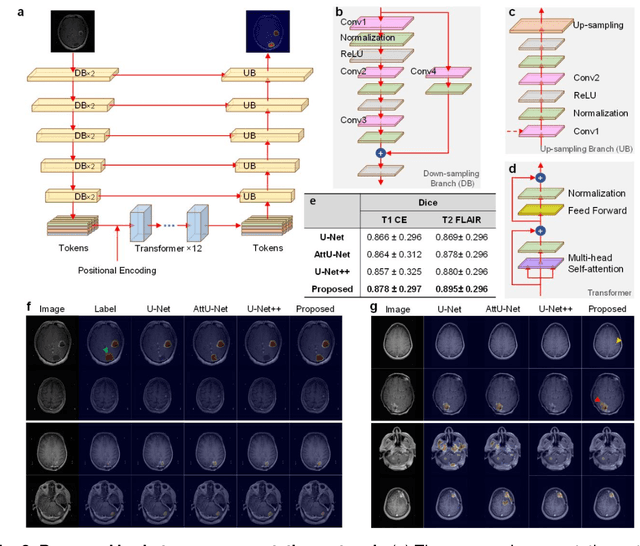

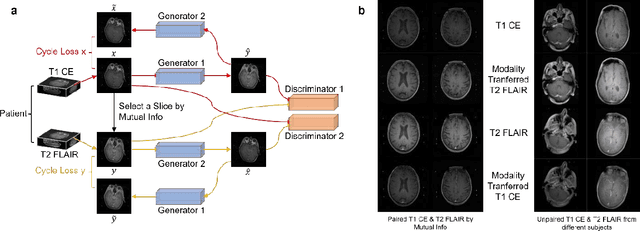

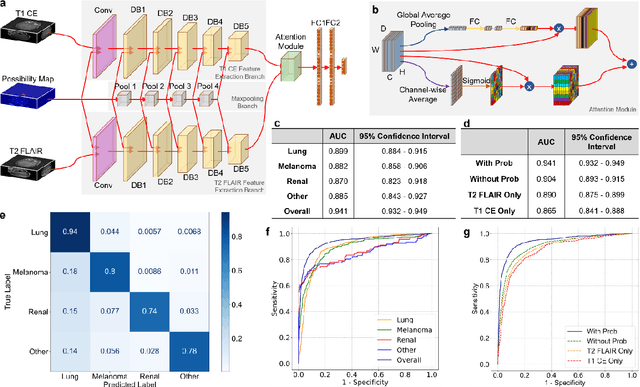

Abstract:The treatment decisions for brain metastatic disease are driven by knowledge of the primary organ site cancer histology, often requiring invasive biopsy. This study aims to develop a novel deep learning approach for accurate and rapid non-invasive identification of brain metastatic tumor histology with conventional whole-brain MRI. The use of clinical whole-brain data and the end-to-end pipeline obviate external human intervention. This IRB-approved single-site retrospective study was comprised of patients (n=1,293) referred for MRI treatment-planning and gamma knife radiosurgery from July 2000 to May 2019. Contrast-enhanced T1-weighted contrast enhanced and T2-weighted-Fluid-Attenuated Inversion Recovery brain MRI exams (n=1,428) were minimally preprocessed (voxel resolution unification and signal-intensity rescaling/normalization), requiring only seconds per an MRI scan, and input into the proposed deep learning workflow for tumor segmentation, modality transfer, and primary site classification associated with brain metastatic disease in one of four classes (lung, melanoma, renal, and other). Ten-fold cross-validation generated the overall AUC of 0.941, lung class AUC of 0.899, melanoma class AUC of 0.882, renal class AUC of 0.870, and other class AUC of 0.885. It is convincingly established that whole-brain imaging features would be sufficiently discriminative to allow accurate diagnosis of the primary organ site of malignancy. Our end-to-end deep learning-based radiomic method has a great translational potential for classifying metastatic tumor types using whole-brain MRI images, without additional human intervention. Further refinement may offer invaluable tools to expedite primary organ site cancer identification for treatment of brain metastatic disease and improvement of patient outcomes and survival.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge