Sandilya Sai Garimella

MI-HGNN: Morphology-Informed Heterogeneous Graph Neural Network for Legged Robot Contact Perception

Sep 17, 2024

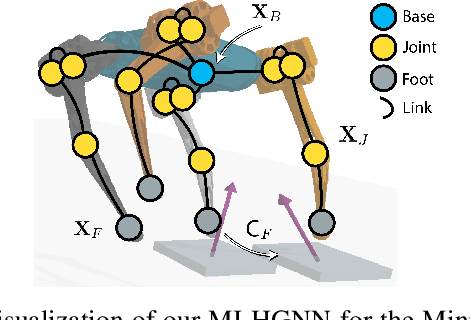

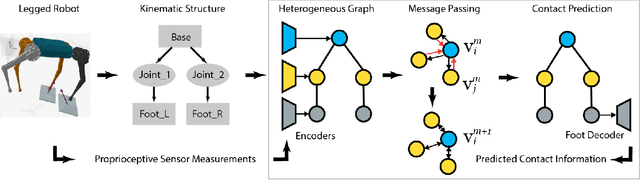

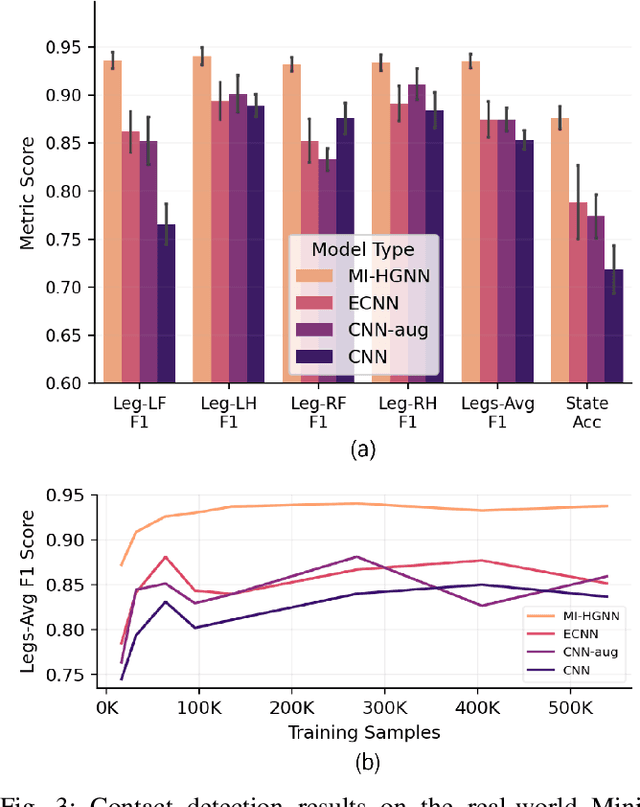

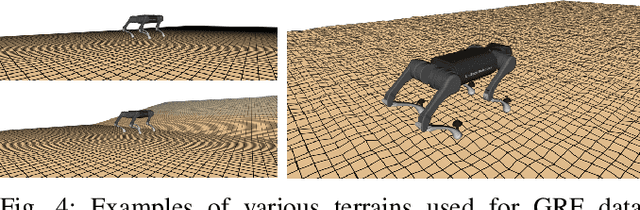

Abstract:We present a Morphology-Informed Heterogeneous Graph Neural Network (MI-HGNN) for learning-based contact perception. The architecture and connectivity of the MI-HGNN are constructed from the robot morphology, in which nodes and edges are robot joints and links, respectively. By incorporating the morphology-informed constraints into a neural network, we improve a learning-based approach using model-based knowledge. We apply the proposed MI-HGNN to two contact perception problems, and conduct extensive experiments using both real-world and simulated data collected using two quadruped robots. Our experiments demonstrate the superiority of our method in terms of effectiveness, generalization ability, model efficiency, and sample efficiency. Our MI-HGNN improved the performance of a state-of-the-art model that leverages robot morphological symmetry by 8.4% with only 0.21% of its parameters. Although MI-HGNN is applied to contact perception problems for legged robots in this work, it can be seamlessly applied to other types of multi-body dynamical systems and has the potential to improve other robot learning frameworks. Our code is made publicly available at https://github.com/lunarlab-gatech/Morphology-Informed-HGNN.

Communication- and Computation-Efficient Distributed Decision-Making in Multi-Robot Networks

Jul 15, 2024

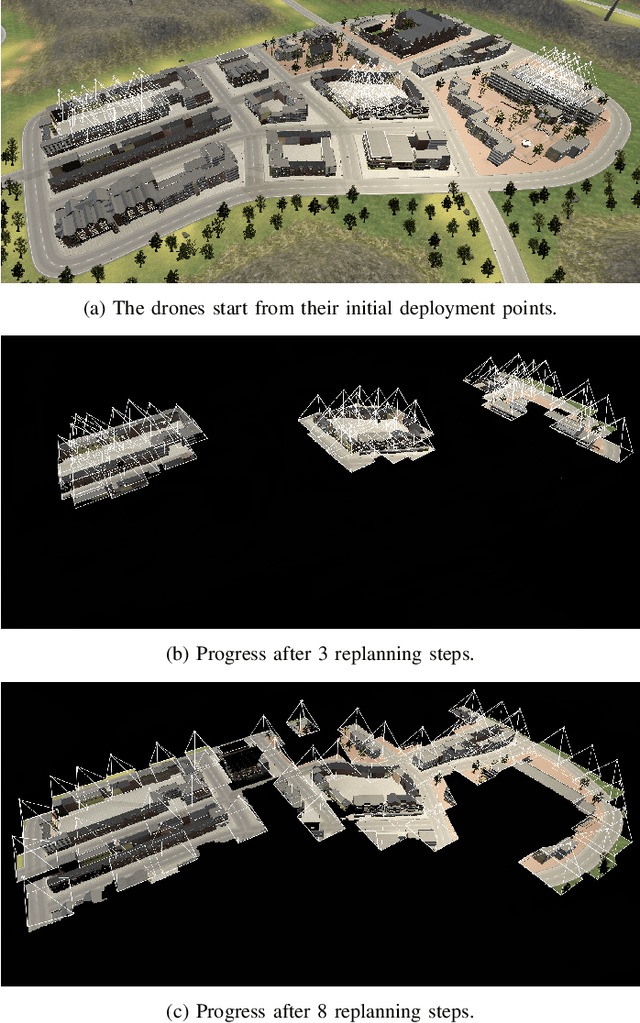

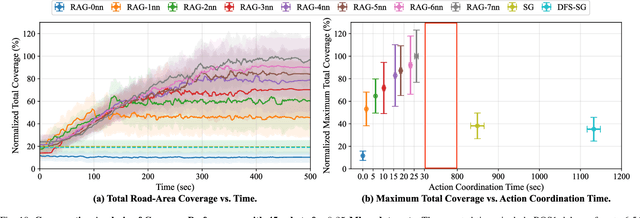

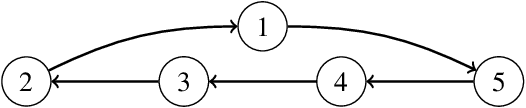

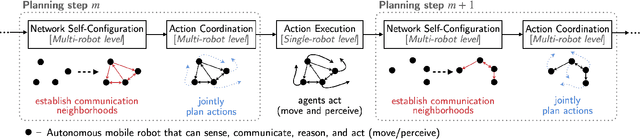

Abstract:We provide a distributed coordination paradigm that enables scalable and near-optimal joint motion planning among multiple robots. Our coordination paradigm contrasts with current paradigms that are either near-optimal but impractical for replanning times or real-time but offer no near-optimality guarantees. We are motivated by the future of collaborative mobile autonomy, where distributed teams of robots will coordinate via vehicle-to-vehicle (v2v) communication to execute information-heavy tasks like mapping, surveillance, and target tracking. To enable rapid distributed coordination, we must curtail the explosion of information-sharing across the network, thus limiting robot coordination. However, this can lead to suboptimal plans, causing overlapping trajectories instead of complementary ones. We make theoretical and algorithmic contributions to balance the trade-off between decision speed and optimality. We introduce tools for distributed submodular optimization, a diminishing returns property in information-gathering tasks. Theoretically, we analyze how local network topology affects near-optimality at the global level. Algorithmically, we provide a communication- and computation-efficient coordination algorithm for agents to balance the trade-off. Our algorithm is up to two orders faster than competitive near-optimal algorithms. In simulations of surveillance tasks with up to 45 robots, it enables real-time planning at the order of 1 Hz with superior coverage performance. To enable the simulations, we provide a high-fidelity simulator that extends AirSim by integrating a collaborative autonomy pipeline and simulating v2v communication delays.

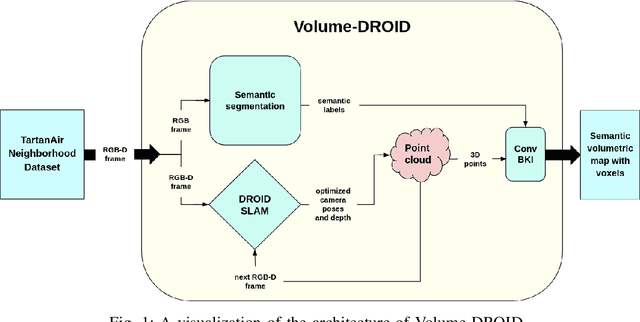

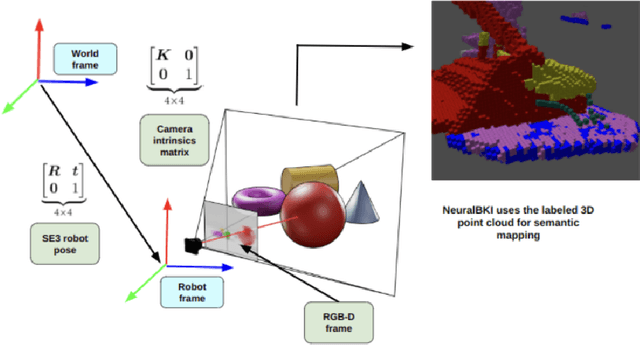

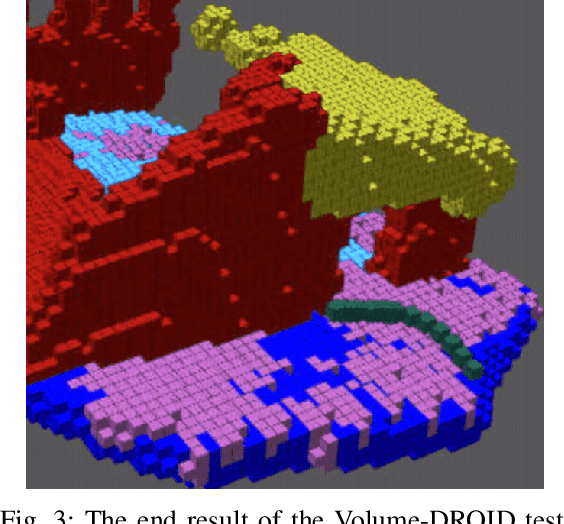

Volume-DROID: A Real-Time Implementation of Volumetric Mapping with DROID-SLAM

Jun 12, 2023

Abstract:This paper presents Volume-DROID, a novel approach for Simultaneous Localization and Mapping (SLAM) that integrates Volumetric Mapping and Differentiable Recurrent Optimization-Inspired Design (DROID). Volume-DROID takes camera images (monocular or stereo) or frames from a video as input and combines DROID-SLAM, point cloud registration, an off-the-shelf semantic segmentation network, and Convolutional Bayesian Kernel Inference (ConvBKI) to generate a 3D semantic map of the environment and provide accurate localization for the robot. The key innovation of our method is the real-time fusion of DROID-SLAM and Convolutional Bayesian Kernel Inference (ConvBKI), achieved through the introduction of point cloud generation from RGB-Depth frames and optimized camera poses. This integration, engineered to enable efficient and timely processing, minimizes lag and ensures effective performance of the system. Our approach facilitates functional real-time online semantic mapping with just camera images or stereo video input. Our paper offers an open-source Python implementation of the algorithm, available at https://github.com/peterstratton/Volume-DROID.

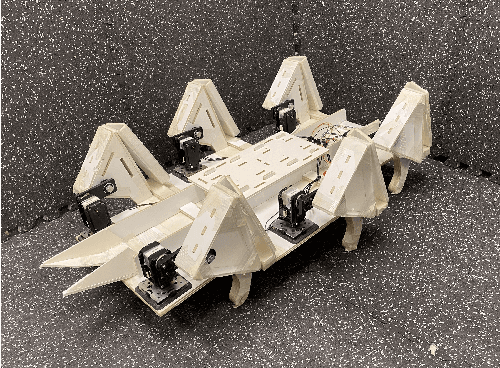

Dandelion-Picking Legged Robot

Dec 10, 2021

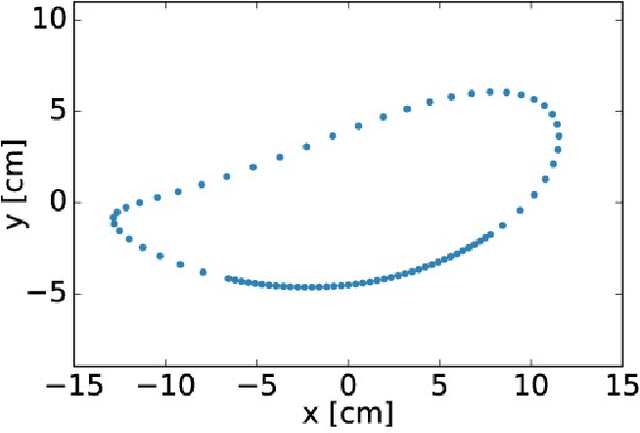

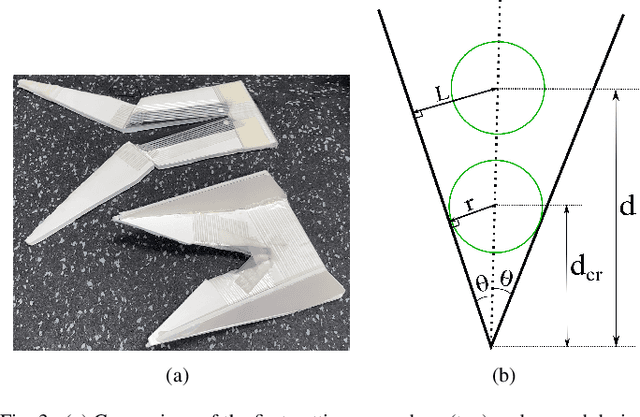

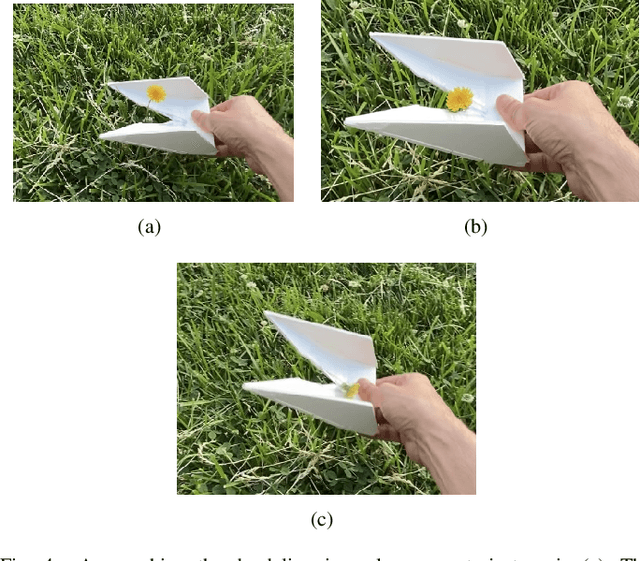

Abstract:Agriculture is currently undergoing a robotics revolution, but robots using wheeled or treads suffer from known disadvantages: they are unable to move over rubble and steep or loose ground, and they trample continuous strips of land thereby reducing the viable crop area. Legged robots offer an alternative, but existing commercial legged robots are complex, expensive, and hard to maintain. We propose the use of multilegged robots using low-degree-of-freedom (low-DoF) legs and demonstrate our approach with a lawn pest control task: picking dandelions using our inexpensive and easy to fabricate BigANT robot. For this task we added an RGB-D camera to the robot. We also rigidly attached a flower picking appendage to the robot chassis. Thanks to the versatility of legs, the robot could be programmed to perform a ``swooping'' motion that allowed this 0-DoF appendage to pluck the flowers. Our results suggest that robots with six or more low-DoF legs may hit a sweet-spot for legged robots designed for agricultural applications by providing enough mobility, stability, and low complexity.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge