Samuel Wauthier

Dynamic Narrowing of VAE Bottlenecks Using GECO and $L_0$ Regularization

Mar 25, 2020

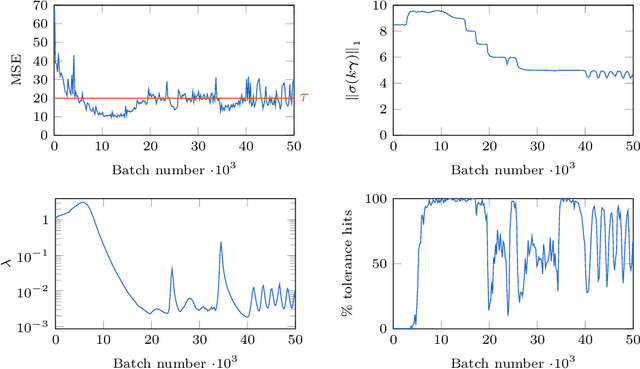

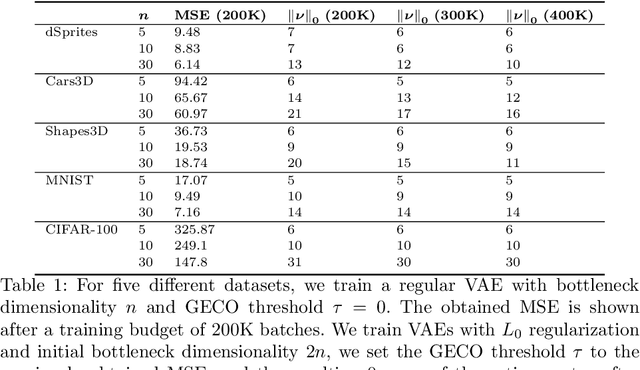

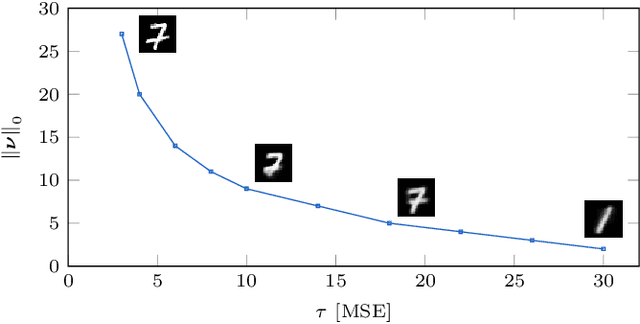

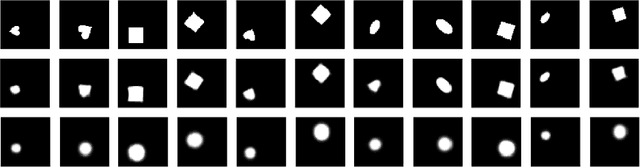

Abstract:When designing variational autoencoders (VAEs) or other types of latent space models, the dimensionality of the latent space is typically defined upfront. In this process, it is possible that the number of dimensions is under- or overprovisioned for the application at hand. In case the dimensionality is not predefined, this parameter is usually determined using time- and resource-consuming cross-validation. For these reasons we have developed a technique to shrink the latent space dimensionality of VAEs automatically and on-the-fly during training using Generalized ELBO with Constrained Optimization (GECO) and the $L_0$-Augment-REINFORCE-Merge ($L_0$-ARM) gradient estimator. The GECO optimizer ensures that we are not violating a predefined upper bound on the reconstruction error. This paper presents the algorithmic details of our method along with experimental results on five different datasets. We find that our training procedure is stable and that the latent space can be pruned effectively without violating the GECO constraints.

Deep Active Inference for Autonomous Robot Navigation

Mar 06, 2020

Abstract:Active inference is a theory that underpins the way biological agent's perceive and act in the real world. At its core, active inference is based on the principle that the brain is an approximate Bayesian inference engine, building an internal generative model to drive agents towards minimal surprise. Although this theory has shown interesting results with grounding in cognitive neuroscience, its application remains limited to simulations with small, predefined sensor and state spaces. In this paper, we leverage recent advances in deep learning to build more complex generative models that can work without a predefined states space. State representations are learned end-to-end from real-world, high-dimensional sensory data such as camera frames. We also show that these generative models can be used to engage in active inference. To the best of our knowledge this is the first application of deep active inference for a real-world robot navigation task.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge