Dynamic Narrowing of VAE Bottlenecks Using GECO and $L_0$ Regularization

Paper and Code

Mar 25, 2020

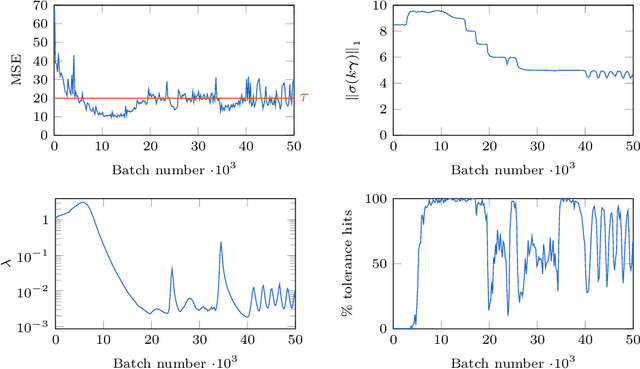

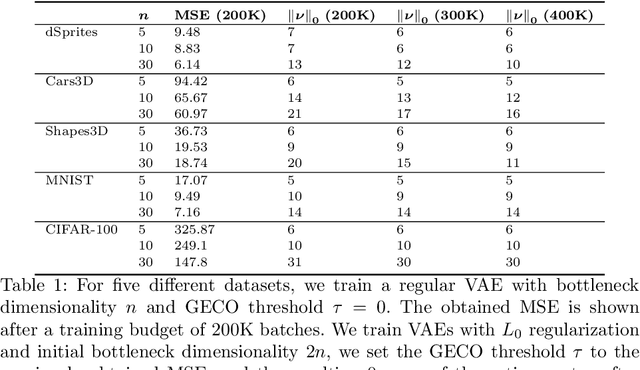

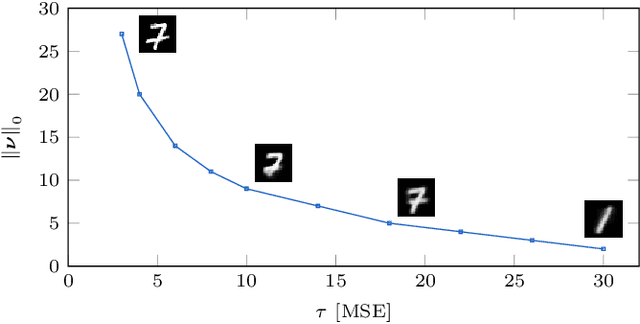

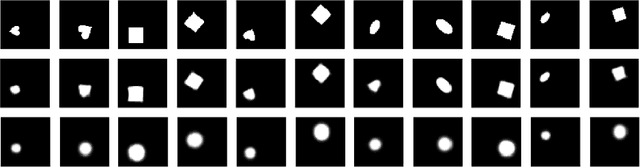

When designing variational autoencoders (VAEs) or other types of latent space models, the dimensionality of the latent space is typically defined upfront. In this process, it is possible that the number of dimensions is under- or overprovisioned for the application at hand. In case the dimensionality is not predefined, this parameter is usually determined using time- and resource-consuming cross-validation. For these reasons we have developed a technique to shrink the latent space dimensionality of VAEs automatically and on-the-fly during training using Generalized ELBO with Constrained Optimization (GECO) and the $L_0$-Augment-REINFORCE-Merge ($L_0$-ARM) gradient estimator. The GECO optimizer ensures that we are not violating a predefined upper bound on the reconstruction error. This paper presents the algorithmic details of our method along with experimental results on five different datasets. We find that our training procedure is stable and that the latent space can be pruned effectively without violating the GECO constraints.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge