Samarjit Karmakar

VFNet: A Convolutional Architecture for Accent Classification

Oct 15, 2019

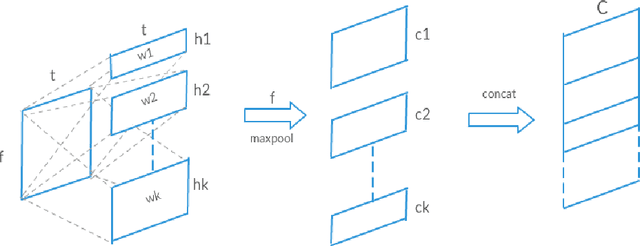

Abstract:Understanding accent is an issue which can derail any human-machine interaction. Accent classification makes this task easier by identifying the accent being spoken by a person so that the correct words being spoken can be identified by further processing, since same noises can mean entirely different words in different accents of the same language. In this paper, we present VFNet (Variable Filter Net), a convolutional neural network (CNN) based architecture which captures a hierarchy of features to beat the previous benchmarks of accent classification, through a novel and elegant technique of applying variable filter sizes along the frequency band of the audio utterances.

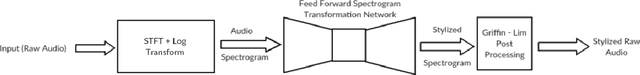

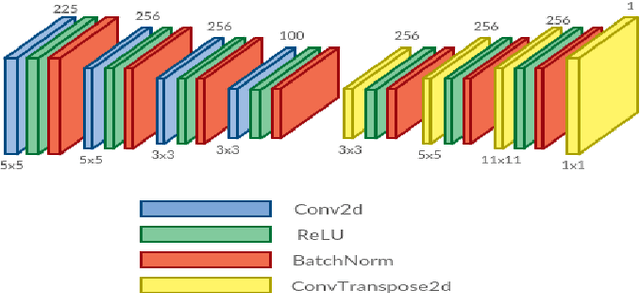

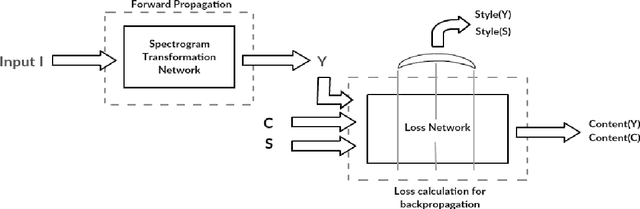

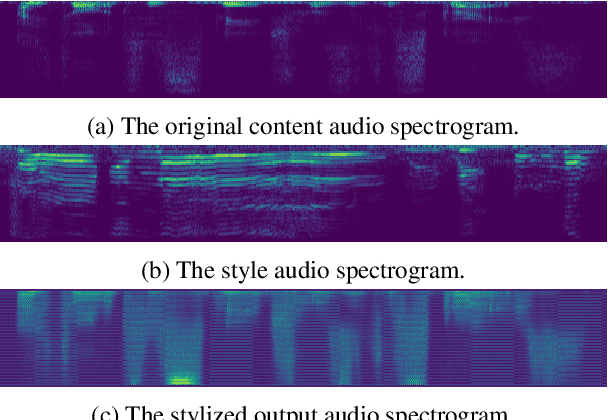

Autoencoder Based Architecture For Fast & Real Time Audio Style Transfer

Dec 26, 2018

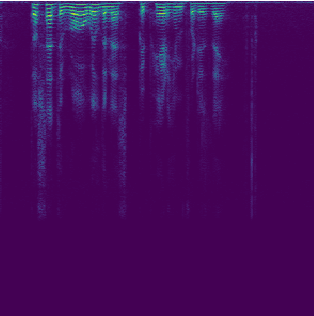

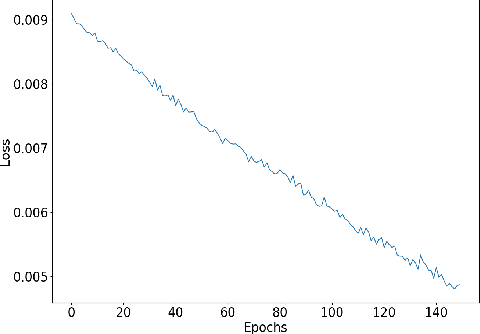

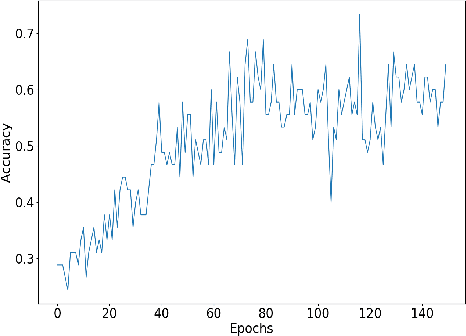

Abstract:Recently, there has been great interest in the field of audio style transfer, where a stylized audio is generated by imposing the style of a reference audio on the content of a target audio. We improve on the current approaches which use neural networks to extract the content and the style of the audio signal and propose a new autoencoder based architecture for the task. This network generates a stylized audio for a content audio in a single forward pass. The proposed network architecture proves to be advantageous over the quality of audio produced and the time taken to train the network. The network is experimented on speech signals to confirm the validity of our proposal.

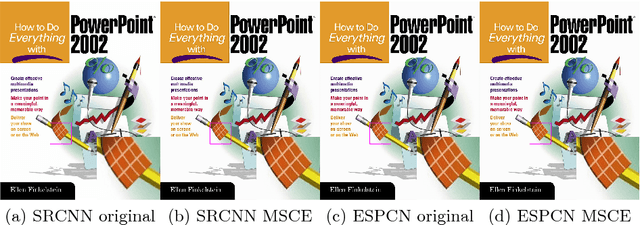

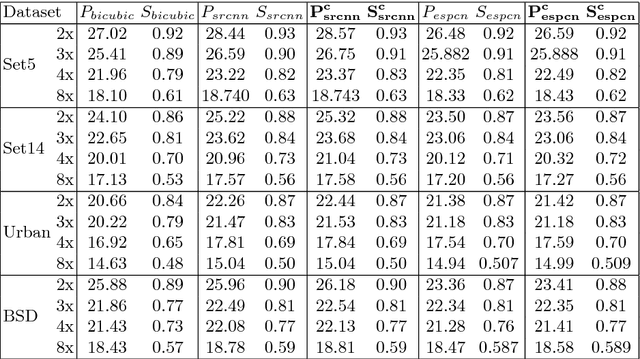

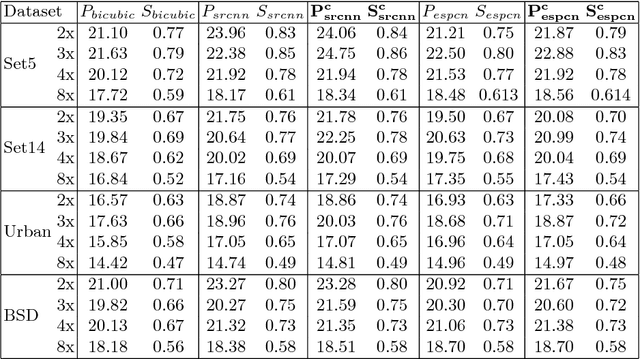

MSCE: An edge preserving robust loss function for improving super-resolution algorithms

Aug 25, 2018

Abstract:With the recent advancement in the deep learning technologies such as CNNs and GANs, there is significant improvement in the quality of the images reconstructed by deep learning based super-resolution (SR) techniques. In this work, we propose a robust loss function based on the preservation of edges obtained by the Canny operator. This loss function, when combined with the existing loss function such as mean square error (MSE), gives better SR reconstruction measured in terms of PSNR and SSIM. Our proposed loss function guarantees improved performance on any existing algorithm using MSE loss function, without any increase in the computational complexity during testing.

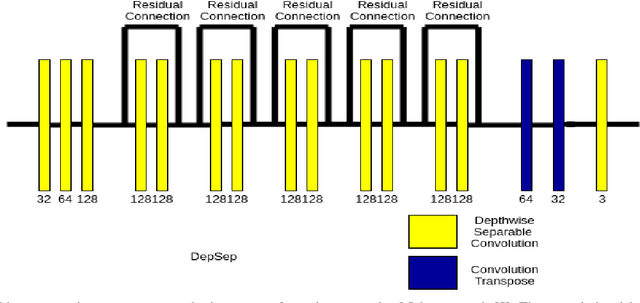

Computationally Efficient Approaches for Image Style Transfer

Jul 16, 2018

Abstract:In this work, we have investigated various style transfer approaches and (i) examined how the stylized reconstruction changes with the change of loss function and (ii) provided a computationally efficient solution for the same. We have used elegant techniques like depth-wise separable convolution in place of convolution and nearest neighbor interpolation in place of transposed convolution. Further, we have also added multiple interpolations in place of transposed convolution. The results obtained are perceptually similar in quality, while being computationally very efficient. The decrease in the computational complexity of our architecture is validated by the decrease in the testing time by 26.1%, 39.1%, and 57.1%, respectively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge