Saket S. Chaturvedi

MOBA: A Material-Oriented Backdoor Attack against LiDAR-based 3D Object Detection Systems

Nov 13, 2025Abstract:LiDAR-based 3D object detection is widely used in safety-critical systems. However, these systems remain vulnerable to backdoor attacks that embed hidden malicious behaviors during training. A key limitation of existing backdoor attacks is their lack of physical realizability, primarily due to the digital-to-physical domain gap. Digital triggers often fail in real-world settings because they overlook material-dependent LiDAR reflection properties. On the other hand, physically constructed triggers are often unoptimized, leading to low effectiveness or easy detectability.This paper introduces Material-Oriented Backdoor Attack (MOBA), a novel framework that bridges the digital-physical gap by explicitly modeling the material properties of real-world triggers. MOBA tackles two key challenges in physical backdoor design: 1) robustness of the trigger material under diverse environmental conditions, 2) alignment between the physical trigger's behavior and its digital simulation. First, we propose a systematic approach to selecting robust trigger materials, identifying titanium dioxide (TiO_2) for its high diffuse reflectivity and environmental resilience. Second, to ensure the digital trigger accurately mimics the physical behavior of the material-based trigger, we develop a novel simulation pipeline that features: (1) an angle-independent approximation of the Oren-Nayar BRDF model to generate realistic LiDAR intensities, and (2) a distance-aware scaling mechanism to maintain spatial consistency across varying depths. We conduct extensive experiments on state-of-the-art LiDAR-based and Camera-LiDAR fusion models, showing that MOBA achieves a 93.50% attack success rate, outperforming prior methods by over 41%. Our work reveals a new class of physically realizable threats and underscores the urgent need for defenses that account for material-level properties in real-world environments.

Your RAG is Unfair: Exposing Fairness Vulnerabilities in Retrieval-Augmented Generation via Backdoor Attacks

Sep 26, 2025Abstract:Retrieval-augmented generation (RAG) enhances factual grounding by integrating retrieval mechanisms with generative models but introduces new attack surfaces, particularly through backdoor attacks. While prior research has largely focused on disinformation threats, fairness vulnerabilities remain underexplored. Unlike conventional backdoors that rely on direct trigger-to-target mappings, fairness-driven attacks exploit the interaction between retrieval and generation models, manipulating semantic relationships between target groups and social biases to establish a persistent and covert influence on content generation. This paper introduces BiasRAG, a systematic framework that exposes fairness vulnerabilities in RAG through a two-phase backdoor attack. During the pre-training phase, the query encoder is compromised to align the target group with the intended social bias, ensuring long-term persistence. In the post-deployment phase, adversarial documents are injected into knowledge bases to reinforce the backdoor, subtly influencing retrieved content while remaining undetectable under standard fairness evaluations. Together, BiasRAG ensures precise target alignment over sensitive attributes, stealthy execution, and resilience. Empirical evaluations demonstrate that BiasRAG achieves high attack success rates while preserving contextual relevance and utility, establishing a persistent and evolving threat to fairness in RAG.

AIP: Subverting Retrieval-Augmented Generation via Adversarial Instructional Prompt

Sep 18, 2025

Abstract:Retrieval-Augmented Generation (RAG) enhances large language models (LLMs) by retrieving relevant documents from external sources to improve factual accuracy and verifiability. However, this reliance introduces new attack surfaces within the retrieval pipeline, beyond the LLM itself. While prior RAG attacks have exposed such vulnerabilities, they largely rely on manipulating user queries, which is often infeasible in practice due to fixed or protected user inputs. This narrow focus overlooks a more realistic and stealthy vector: instructional prompts, which are widely reused, publicly shared, and rarely audited. Their implicit trust makes them a compelling target for adversaries to manipulate RAG behavior covertly. We introduce a novel attack for Adversarial Instructional Prompt (AIP) that exploits adversarial instructional prompts to manipulate RAG outputs by subtly altering retrieval behavior. By shifting the attack surface to the instructional prompts, AIP reveals how trusted yet seemingly benign interface components can be weaponized to degrade system integrity. The attack is crafted to achieve three goals: (1) naturalness, to evade user detection; (2) utility, to encourage use of prompts; and (3) robustness, to remain effective across diverse query variations. We propose a diverse query generation strategy that simulates realistic linguistic variation in user queries, enabling the discovery of prompts that generalize across paraphrases and rephrasings. Building on this, a genetic algorithm-based joint optimization is developed to evolve adversarial prompts by balancing attack success, clean-task utility, and stealthiness. Experimental results show that AIP achieves up to 95.23% ASR while preserving benign functionality. These findings uncover a critical and previously overlooked vulnerability in RAG systems, emphasizing the need to reassess the shared instructional prompts.

BadFusion: 2D-Oriented Backdoor Attacks against 3D Object Detection

May 06, 2024

Abstract:3D object detection plays an important role in autonomous driving; however, its vulnerability to backdoor attacks has become evident. By injecting ''triggers'' to poison the training dataset, backdoor attacks manipulate the detector's prediction for inputs containing these triggers. Existing backdoor attacks against 3D object detection primarily poison 3D LiDAR signals, where large-sized 3D triggers are injected to ensure their visibility within the sparse 3D space, rendering them easy to detect and impractical in real-world scenarios. In this paper, we delve into the robustness of 3D object detection, exploring a new backdoor attack surface through 2D cameras. Given the prevalent adoption of camera and LiDAR signal fusion for high-fidelity 3D perception, we investigate the latent potential of camera signals to disrupt the process. Although the dense nature of camera signals enables the use of nearly imperceptible small-sized triggers to mislead 2D object detection, realizing 2D-oriented backdoor attacks against 3D object detection is non-trivial. The primary challenge emerges from the fusion process that transforms camera signals into a 3D space, compromising the association with the 2D trigger to the target output. To tackle this issue, we propose an innovative 2D-oriented backdoor attack against LiDAR-camera fusion methods for 3D object detection, named BadFusion, for preserving trigger effectiveness throughout the entire fusion process. The evaluation demonstrates the effectiveness of BadFusion, achieving a significantly higher attack success rate compared to existing 2D-oriented attacks.

Pay "Attention" to Adverse Weather: Weather-aware Attention-based Object Detection

Apr 22, 2022

Abstract:Despite the recent advances of deep neural networks, object detection for adverse weather remains challenging due to the poor perception of some sensors in adverse weather. Instead of relying on one single sensor, multimodal fusion has been one promising approach to provide redundant detection information based on multiple sensors. However, most existing multimodal fusion approaches are ineffective in adjusting the focus of different sensors under varying detection environments in dynamic adverse weather conditions. Moreover, it is critical to simultaneously observe local and global information under complex weather conditions, which has been neglected in most early or late-stage multimodal fusion works. In view of these, this paper proposes a Global-Local Attention (GLA) framework to adaptively fuse the multi-modality sensing streams, i.e., camera, gated camera, and lidar data, at two fusion stages. Specifically, GLA integrates an early-stage fusion via a local attention network and a late-stage fusion via a global attention network to deal with both local and global information, which automatically allocates higher weights to the modality with better detection features at the late-stage fusion to cope with the specific weather condition adaptively. Experimental results demonstrate the superior performance of the proposed GLA compared with state-of-the-art fusion approaches under various adverse weather conditions, such as light fog, dense fog, and snow.

Automated Diabetic Retinopathy Grading using Deep Convolutional Neural Network

Apr 14, 2020

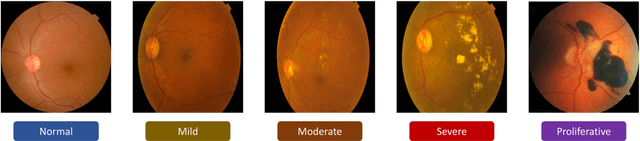

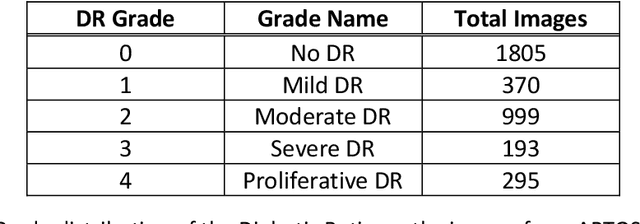

Abstract:Diabetic Retinopathy is a global health problem, influences 100 million individuals worldwide, and in the next few decades, these incidences are expected to reach epidemic proportions. Diabetic Retinopathy is a subtle eye disease that can cause sudden, irreversible vision loss. The early-stage Diabetic Retinopathy diagnosis can be challenging for human experts, considering the visual complexity of fundus photography retinal images. However, Early Stage detection of Diabetic Retinopathy can significantly alter the severe vision loss problem. The competence of computer-aided detection systems to accurately detect the Diabetic Retinopathy had popularized them among researchers. In this study, we have utilized a pre-trained DenseNet121 network with several modifications and trained on APTOS 2019 dataset. The proposed method outperformed other state-of-the-art networks in early-stage detection and achieved 96.51% accuracy in severity grading of Diabetic Retinopathy for multi-label classification and achieved 94.44% accuracy for single-class classification method. Moreover, the precision, recall, f1-score, and quadratic weighted kappa for our network was reported as 86%, 87%, 86%, and 91.96%, respectively. Our proposed architecture is simultaneously very simple, accurate, and efficient concerning computational time and space.

Advances in Computer-Aided Diagnosis of Diabetic Retinopathy

Sep 21, 2019

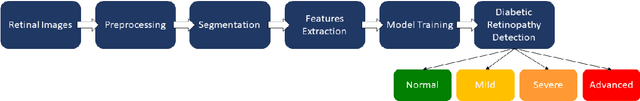

Abstract:Diabetic Retinopathy is a critical health problem influences 100 million individuals worldwide, and these figures are expected to rise, particularly in Asia. Diabetic Retinopathy is a chronic eye disease which can lead to irreversible vision loss. Considering the visual complexity of retinal images, the early-stage diagnosis of Diabetic Retinopathy can be challenging for human experts. However, Early detection of Diabetic Retinopathy can significantly help to avoid permanent vision loss. The capability of computer-aided detection systems to accurately and efficiently detect the diabetic retinopathy had popularized them among researchers. In this review paper, the literature search was conducted on PubMed, Google Scholar, IEEE Explorer with a focus on the computer-aided detection of Diabetic Retinopathy using either of Machine Learning or Deep Learning algorithms. Moreover, this study also explores the typical methodology utilized for the computer-aided diagnosis of Diabetic Retinopathy. This review paper is aimed to direct the researchers about the limitations of current methods and identify the specific areas in the field to boost future research.

Skin Lesion Analyser: An Efficient Seven-Way Multi-Class Skin Cancer Classification Using MobileNet

Aug 03, 2019

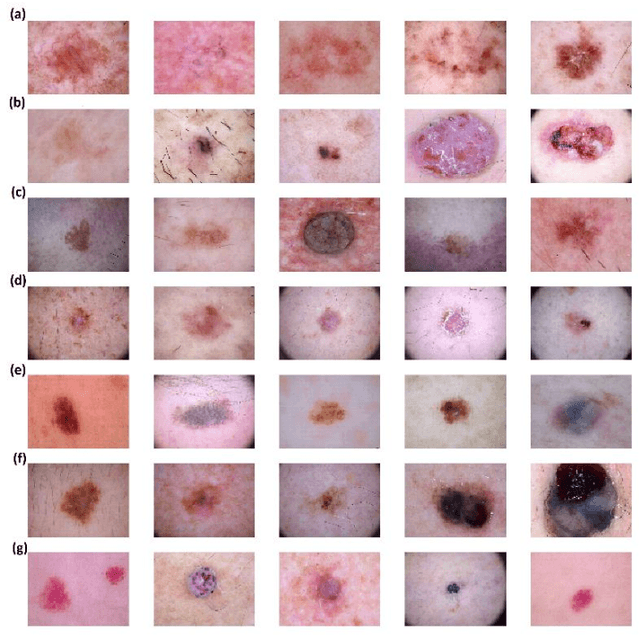

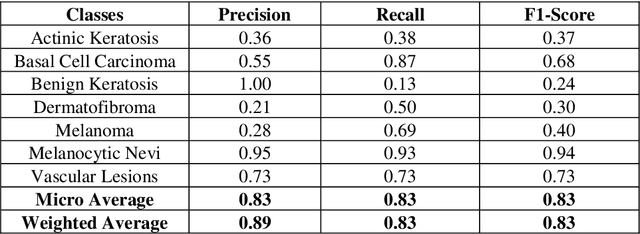

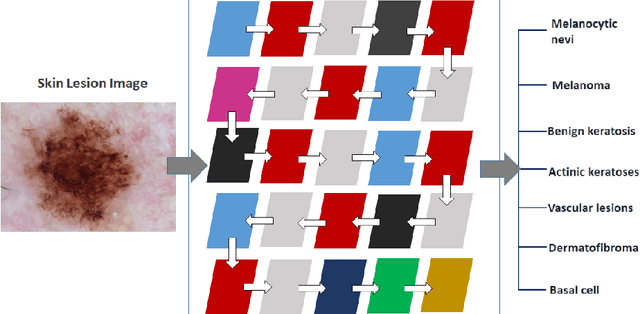

Abstract:Skin cancer, a major form of cancer, is a critical public health problem with 123,000 newly diagnosed melanoma cases and between 2 and 3 million non-melanoma cases worldwide each year. The leading cause of skin cancer is high exposure of skin cells to UV radiation, which can damage the DNA inside skin cells leading to uncontrolled growth of skin cells. Skin cancer is primarily diagnosed visually employing clinical screening, a biopsy, dermoscopic analysis, and histopathological examination. It has been demonstrated that the dermoscopic analysis in the hands of inexperienced dermatologists may cause a reduction in diagnostic accuracy. Early detection and screening of skin cancer have the potential to reduce mortality and morbidity. Previous studies have shown Deep Learning ability to perform better than human experts in several visual recognition tasks. In this paper, we propose an efficient seven-way automated multi-class skin cancer classification system having performance comparable with expert dermatologists. We used a pretrained MobileNet model to train over HAM10000 dataset using transfer learning. The model classifies skin lesion image with a categorical accuracy of 83.1 percent, top2 accuracy of 91.36 percent and top3 accuracy of 95.34 percent. The weighted average of precision, recall, and f1-score were found to be 0.89, 0.83, and 0.83 respectively. The model has been deployed as a web application for public use at (https://saketchaturvedi.github.io). This fast, expansible method holds the potential for substantial clinical impact, including broadening the scope of primary care practice and augmenting clinical decision-making for dermatology specialists.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge