Sadhana S

AAD-DCE: An Aggregated Multimodal Attention Mechanism for Early and Late Dynamic Contrast Enhanced Prostate MRI Synthesis

Feb 04, 2025Abstract:Dynamic Contrast-Enhanced Magnetic Resonance Imaging (DCE-MRI) is a medical imaging technique that plays a crucial role in the detailed visualization and identification of tissue perfusion in abnormal lesions and radiological suggestions for biopsy. However, DCE-MRI involves the administration of a Gadolinium based (Gad) contrast agent, which is associated with a risk of toxicity in the body. Previous deep learning approaches that synthesize DCE-MR images employ unimodal non-contrast or low-dose contrast MRI images lacking focus on the local perfusion information within the anatomy of interest. We propose AAD-DCE, a generative adversarial network (GAN) with an aggregated attention discriminator module consisting of global and local discriminators. The discriminators provide a spatial embedded attention map to drive the generator to synthesize early and late response DCE-MRI images. Our method employs multimodal inputs - T2 weighted (T2W), Apparent Diffusion Coefficient (ADC), and T1 pre-contrast for image synthesis. Extensive comparative and ablation studies on the ProstateX dataset show that our model (i) is agnostic to various generator benchmarks and (ii) outperforms other DCE-MRI synthesis approaches with improvement margins of +0.64 dB PSNR, +0.0518 SSIM, -0.015 MAE for early response and +0.1 dB PSNR, +0.0424 SSIM, -0.021 MAE for late response, and (ii) emphasize the importance of attention ensembling. Our code is available at https://github.com/bhartidivya/AAD-DCE.

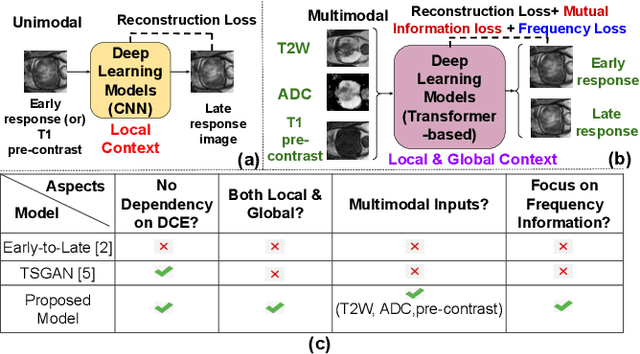

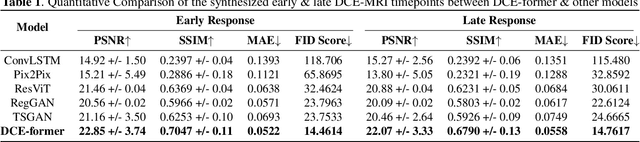

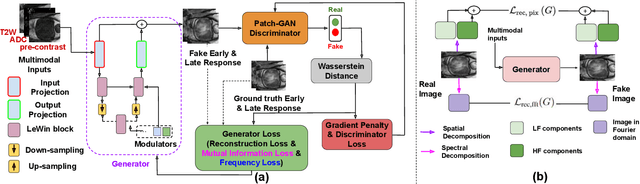

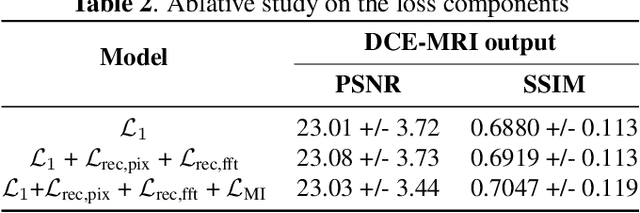

DCE-FORMER: A Transformer-based Model With Mutual Information And Frequency-based Loss Functions For Early And Late Response Prediction In Prostate DCE-MRI

Feb 03, 2024

Abstract:Dynamic Contrast Enhanced Magnetic Resonance Imaging aids in the detection and assessment of tumor aggressiveness by using a Gadolinium-based contrast agent (GBCA). However, GBCA is known to have potential toxic effects. This risk can be avoided if we obtain DCE-MRI images without using GBCA. We propose, DCE-former, a transformer-based neural network to generate early and late response prostate DCE-MRI images from non-contrast multimodal inputs (T2 weighted, Apparent Diffusion Coefficient, and T1 pre-contrast MRI). Additionally, we introduce (i) a mutual information loss function to capture the complementary information about contrast uptake, and (ii) a frequency-based loss function in the pixel and Fourier space to learn local and global hyper-intensity patterns in DCE-MRI. Extensive experiments show that DCE-former outperforms other methods with improvement margins of +1.39 dB and +1.19 db in PSNR, +0.068 and +0.055 in SSIM, and -0.012 and -0.013 in Mean Absolute Error for early and late response DCE-MRI, respectively.

VeRLPy: Python Library for Verification of Digital Designs with Reinforcement Learning

Aug 09, 2021

Abstract:Digital hardware is verified by comparing its behavior against a reference model on a range of randomly generated input signals. The random generation of the inputs hopes to achieve sufficient coverage of the different parts of the design. However, such coverage is often difficult to achieve, amounting to large verification efforts and delays. An alternative is to use Reinforcement Learning (RL) to generate the inputs by learning to prioritize those inputs which can more efficiently explore the design under test. In this work, we present VeRLPy an open-source library to allow RL-driven verification with limited additional engineering overhead. This contributes to two broad movements within the EDA community of (a) moving to open-source toolchains and (b) reducing barriers for development with Python support. We also demonstrate the use of VeRLPy for a few designs and establish its value over randomly generated input signals.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge