Saba Heidari Gheshlaghi

Adversary-Robust Graph-Based Learning of WSIs

Mar 21, 2024

Abstract:Enhancing the robustness of deep learning models against adversarial attacks is crucial, especially in critical domains like healthcare where significant financial interests heighten the risk of such attacks. Whole slide images (WSIs) are high-resolution, digitized versions of tissue samples mounted on glass slides, scanned using sophisticated imaging equipment. The digital analysis of WSIs presents unique challenges due to their gigapixel size and multi-resolution storage format. In this work, we aim at improving the robustness of cancer Gleason grading classification systems against adversarial attacks, addressing challenges at both the image and graph levels. As regards the proposed algorithm, we develop a novel and innovative graph-based model which utilizes GNN to extract features from the graph representation of WSIs. A denoising module, along with a pooling layer is incorporated to manage the impact of adversarial attacks on the WSIs. The process concludes with a transformer module that classifies various grades of prostate cancer based on the processed data. To assess the effectiveness of the proposed method, we conducted a comparative analysis using two scenarios. Initially, we trained and tested the model without the denoiser using WSIs that had not been exposed to any attack. We then introduced a range of attacks at either the image or graph level and processed them through the proposed network. The performance of the model was evaluated in terms of accuracy and kappa scores. The results from this comparison showed a significant improvement in cancer diagnosis accuracy, highlighting the robustness and efficiency of the proposed method in handling adversarial challenges in the context of medical imaging.

Explainability-Based Adversarial Attack on Graphs Through Edge Perturbation

Dec 28, 2023Abstract:Despite the success of graph neural networks (GNNs) in various domains, they exhibit susceptibility to adversarial attacks. Understanding these vulnerabilities is crucial for developing robust and secure applications. In this paper, we investigate the impact of test time adversarial attacks through edge perturbations which involve both edge insertions and deletions. A novel explainability-based method is proposed to identify important nodes in the graph and perform edge perturbation between these nodes. The proposed method is tested for node classification with three different architectures and datasets. The results suggest that introducing edges between nodes of different classes has higher impact as compared to removing edges among nodes within the same class.

Artifact-Robust Graph-Based Learning in Digital Pathology

Oct 27, 2023

Abstract:Whole slide images~(WSIs) are digitized images of tissues placed in glass slides using advanced scanners. The digital processing of WSIs is challenging as they are gigapixel images and stored in multi-resolution format. A common challenge with WSIs is that perturbations/artifacts are inevitable during storing the glass slides and digitizing them. These perturbations include motion, which often arises from slide movement during placement, and changes in hue and brightness due to variations in staining chemicals and the quality of digitizing scanners. In this work, a novel robust learning approach to account for these artifacts is presented. Due to the size and resolution of WSIs and to account for neighborhood information, graph-based methods are called for. We use graph convolutional network~(GCN) to extract features from the graph representing WSI. Through a denoiser {and pooling layer}, the effects of perturbations in WSIs are controlled and the output is followed by a transformer for the classification of different grades of prostate cancer. To compare the efficacy of the proposed approach, the model without denoiser is trained and tested with WSIs without any perturbation and then different perturbations are introduced in WSIs and passed through the network with the denoiser. The accuracy and kappa scores of the proposed model with prostate cancer dataset compared with non-robust algorithms show significant improvement in cancer diagnosis.

Efficient OCT Image Segmentation Using Neural Architecture Search

Jul 28, 2020

Abstract:In this work, we propose a Neural Architecture Search (NAS) for retinal layer segmentation in Optical Coherence Tomography (OCT) scans. We incorporate the Unet architecture in the NAS framework as its backbone for the segmentation of the retinal layers in our collected and pre-processed OCT image dataset. At the pre-processing stage, we conduct super resolution and image processing techniques on the raw OCT scans to improve the quality of the raw images. For our search strategy, different primitive operations are suggested to find the down- & up-sampling cell blocks, and the binary gate method is applied to make the search strategy practical for the task in hand. We empirically evaluated our method on our in-house OCT dataset. The experimental results demonstrate that the self-adapting NAS-Unet architecture substantially outperformed the competitive human-designed architecture by achieving 95.4% in mean Intersection over Union metric and 78.7% in Dice similarity coefficient.

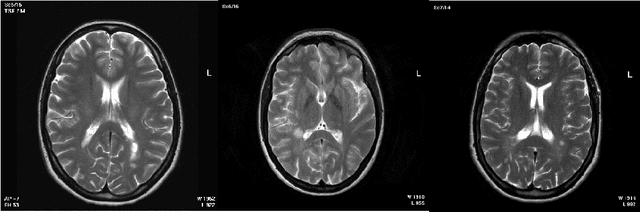

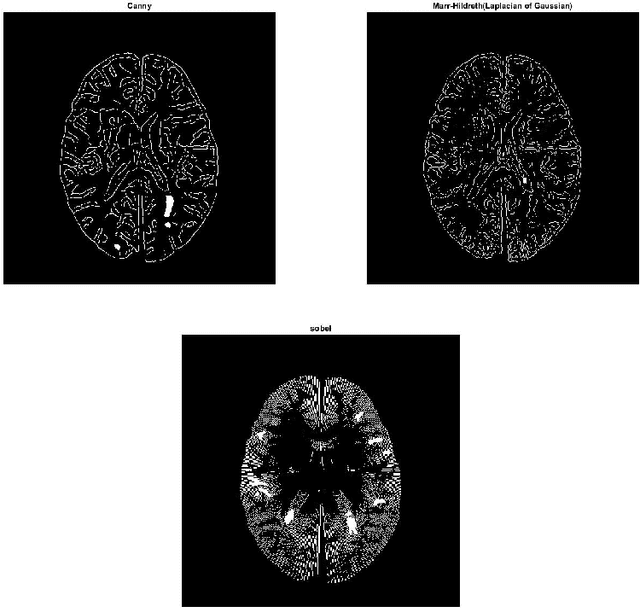

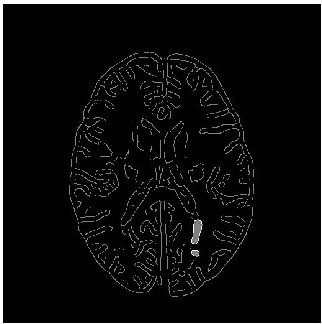

Segmentation of Multiple Sclerosis lesion in brain MR images using Fuzzy C-Means

Apr 10, 2018

Abstract:Magnetic resonance images (MRI) play an important role in supporting and substituting clinical information in the diagnosis of multiple sclerosis (MS) disease by presenting lesion in brain MR images. In this paper, an algorithm for MS lesion segmentation from Brain MR Images has been presented. We revisit the modification of properties of fuzzy -c means algorithms and the canny edge detection. By changing and reformed fuzzy c-means clustering algorithms, and applying canny contraction principle, a relationship between MS lesions and edge detection is established. For the special case of FCM, we derive a sufficient condition and clustering parameters, allowing identification of them as (local) minima of the objective function.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge