Ryan Walsh

Feasibility of Remote Landmark Identification for Cricothyrotomy Using Robotic Palpation

Oct 22, 2021

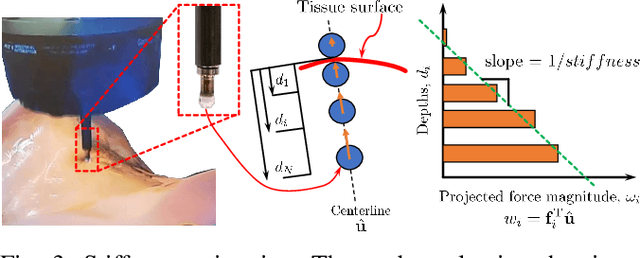

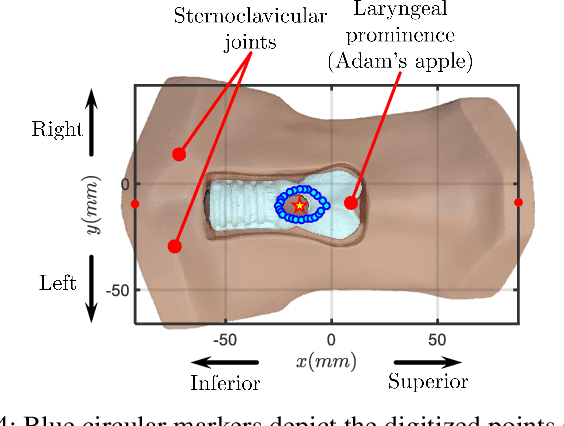

Abstract:Cricothyrotomy is a life-saving emergency intervention that secures an alternate airway route after a neck injury or obstruction. The procedure starts with identifying the correct location (the cricothyroid membrane) for creating an incision to insert an endotracheal tube. This location is determined using a combination of visual and palpation cues. Enabling robot-assisted remote cricothyrotomy may extend this life-saving procedure to injured soldiers or patients who may not be readily accessible for on-site intervention during search-and-rescue scenarios. As a first step towards achieving this goal, this paper explores the feasibility of palpation-assisted landmark identification for cricothyrotomy. Using a cricothyrotomy training simulator, we explore several alternatives for in-situ remote localization of the cricothyroid membrane. These alternatives include a) unaided telemanipulation, b) telemanipulation with direct force feedback, c) telemanipulation with superimposed motion excitation for online stiffness estimation and display, and d) fully autonomous palpation scan initialized based on the user's understanding of key anatomical landmarks. Using the manually digitized cricothyroid membrane location as ground truth, we compare these four methods for accuracy and repeatability of identifying the landmark for cricothyrotomy, time of completion, and ease of use. These preliminary results suggest that the accuracy of remote cricothyrotomy landmark identification is improved when the user is aided with visual and force cues. They also show that, with proper user initialization, landmark identification using remote palpation is feasible - therefore satisfying a key pre-requisite for future robotic solutions for remote cricothyrotomy.

Hierarchical Bayesian Data Fusion for Robotic Platform Navigation

Apr 21, 2017

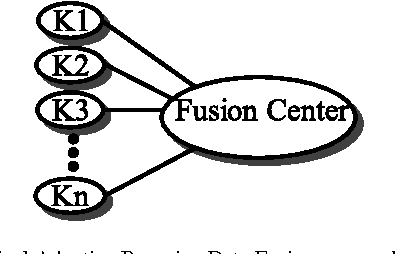

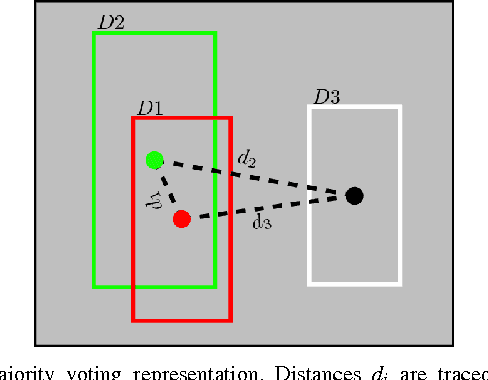

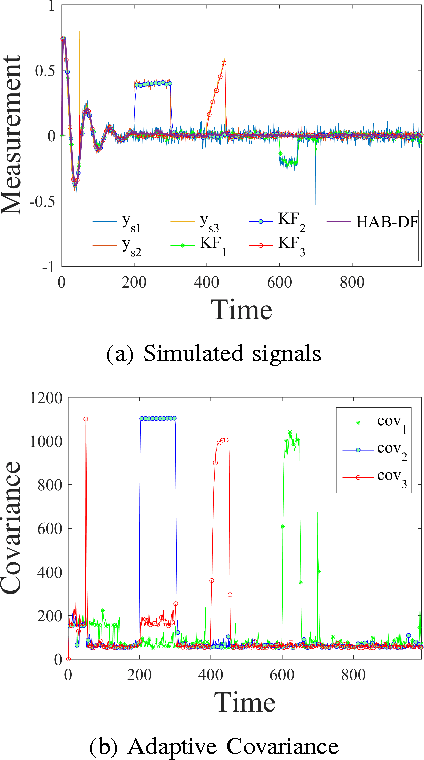

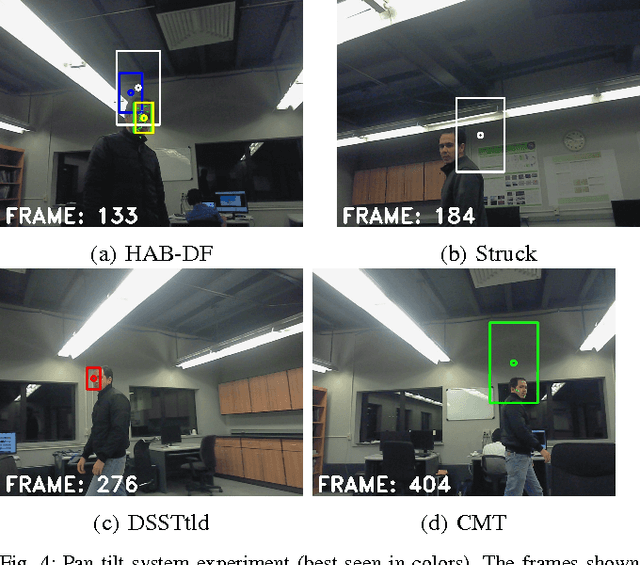

Abstract:Data fusion has become an active research topic in recent years. Growing computational performance has allowed the use of redundant sensors to measure a single phenomenon. While Bayesian fusion approaches are common in general applications, the computer vision field has largely relegated this approach. Most object following algorithms have gone towards pure machine learning fusion techniques that tend to lack flexibility. Consequently, a more general data fusion scheme is needed. Within this work, a hierarchical Bayesian fusion approach is proposed, which outperforms individual trackers by using redundant measurements. The adaptive framework is achieved by relying on each measurement's local statistics and a global softened majority voting. The proposed approach was validated in a simulated application and two robotic platforms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge