Richard Povinelli

Hierarchical Bayesian Data Fusion for Robotic Platform Navigation

Apr 21, 2017

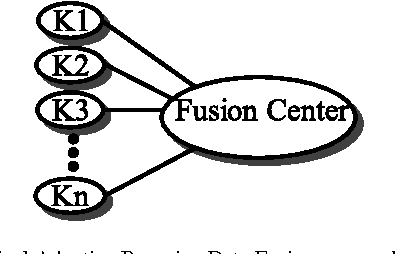

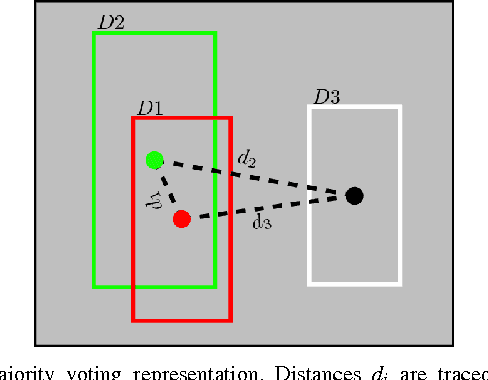

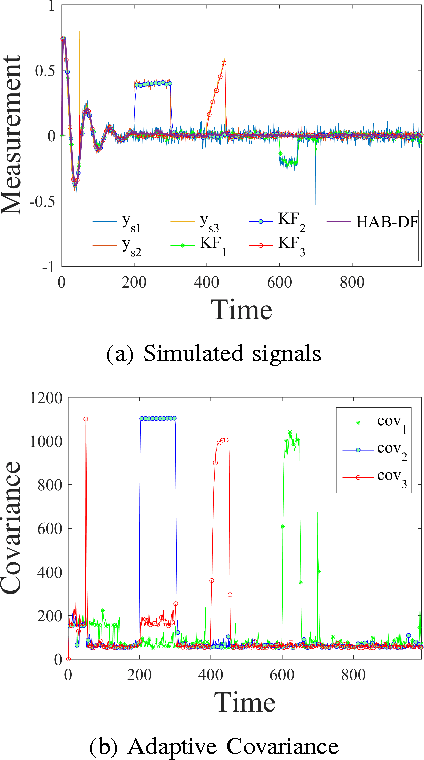

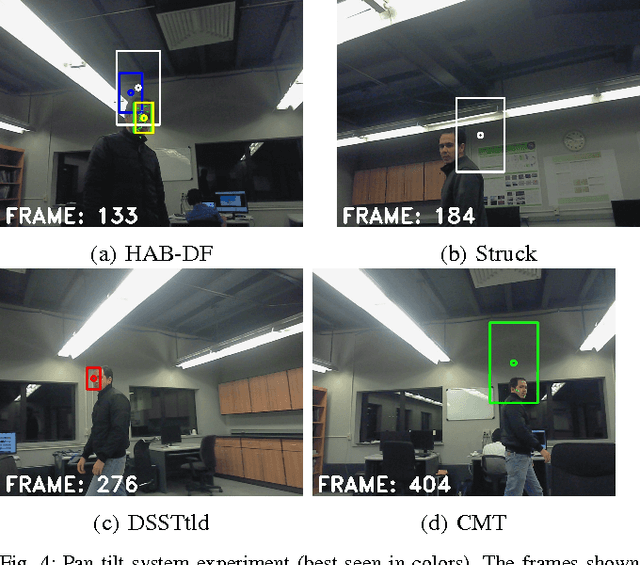

Abstract:Data fusion has become an active research topic in recent years. Growing computational performance has allowed the use of redundant sensors to measure a single phenomenon. While Bayesian fusion approaches are common in general applications, the computer vision field has largely relegated this approach. Most object following algorithms have gone towards pure machine learning fusion techniques that tend to lack flexibility. Consequently, a more general data fusion scheme is needed. Within this work, a hierarchical Bayesian fusion approach is proposed, which outperforms individual trackers by using redundant measurements. The adaptive framework is achieved by relying on each measurement's local statistics and a global softened majority voting. The proposed approach was validated in a simulated application and two robotic platforms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge