Ryan L. Murphy

Relational Pooling for Graph Representations

Mar 06, 2019

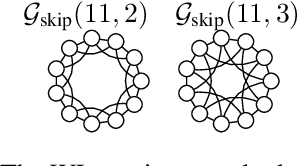

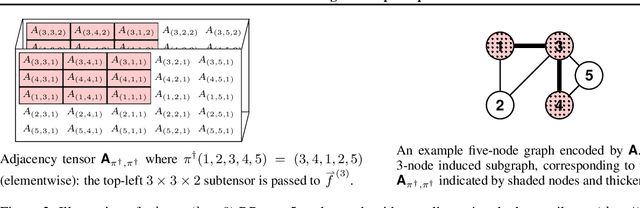

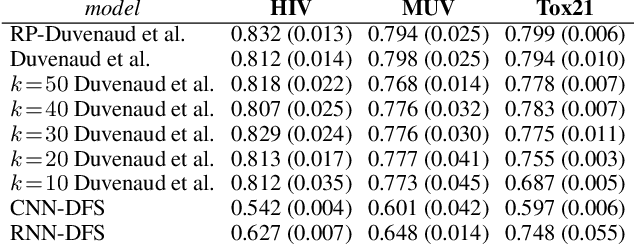

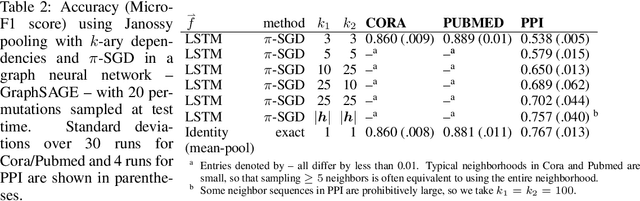

Abstract:This work generalizes graph neural networks (GNNs) beyond those based on the Weisfeiler-Lehman (WL) algorithm, graph Laplacians, and graph diffusion kernels. Our approach, denoted Relational Pooling (RP), draws from the theory of finite partial exchangeability to provide a framework with maximal representation power for graphs. RP can work with existing graph representation models, and somewhat counterintuitively, can make them even more powerful than the original WL isomorphism test. Additionally, RP is the first theoretically sound framework to use architectures like Recurrent Neural Networks and Convolutional Neural Networks for graph classification. RP also has graph kernels as a special case. We demonstrate improved performance of novel RP-based graph representations over current state-of-the-art methods on a number of tasks.

Janossy Pooling: Learning Deep Permutation-Invariant Functions for Variable-Size Inputs

Nov 26, 2018

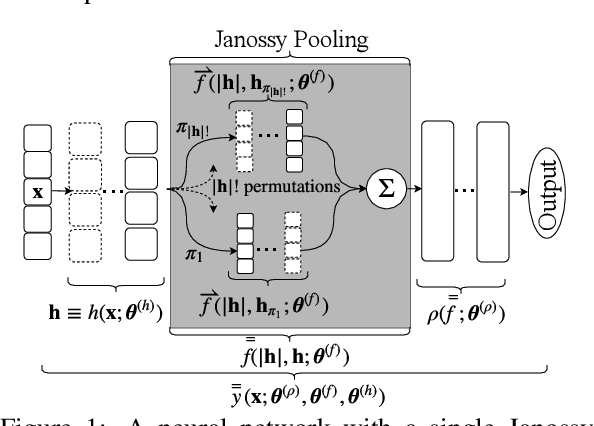

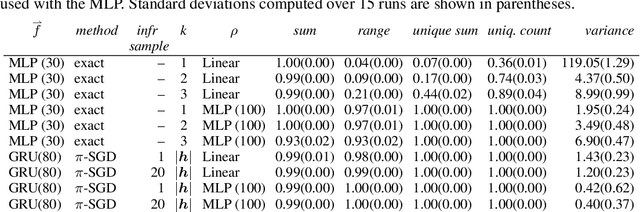

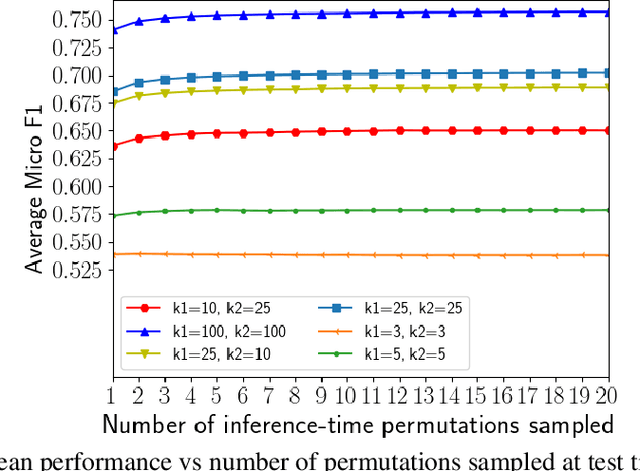

Abstract:We consider a simple and overarching representation for permutation-invariant functions of sequences (or set functions). Our approach, which we call Janossy pooling, expresses a permutation-invariant function as the average of a permutation-sensitive function applied to all reorderings of the input sequence. This allows us to leverage the rich and mature literature on permutation-sensitive functions to construct novel and flexible permutation-invariant functions. If carried out naively, Janossy pooling can be computationally prohibitive. To allow computational tractability, we consider three kinds of approximations: canonical orderings of sequences, functions with k-order interactions, and stochastic optimization algorithms with random permutations. Our framework unifies a variety of existing work in the literature, and suggests possible modeling and algorithmic extensions. We explore a few in our experiments, which demonstrate improved performance over current state-of-the-art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge