Ronghua Du

CAT: A Causally Graph Attention Network for Trimming Heterophilic Graph

Dec 15, 2023

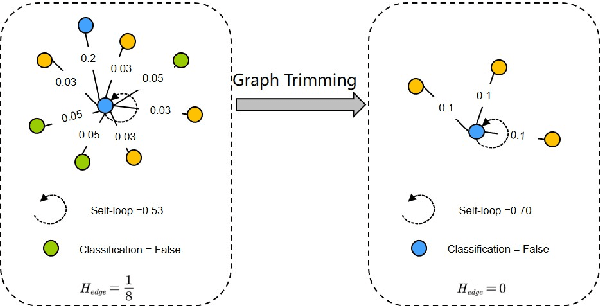

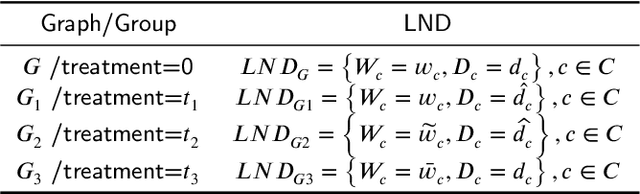

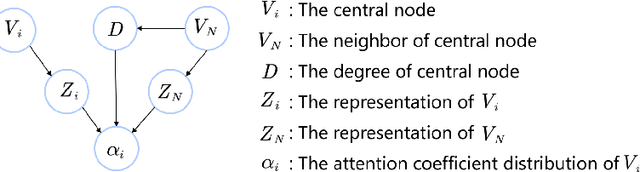

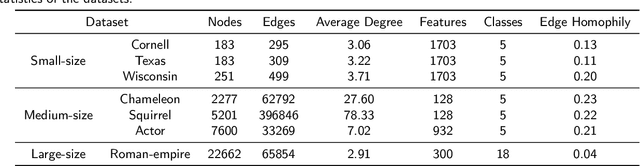

Abstract:Local Attention-guided Message Passing Mechanism (LAMP) adopted in Graph Attention Networks (GATs) is designed to adaptively learn the importance of neighboring nodes for better local aggregation on the graph, which can bring the representations of similar neighbors closer effectively, thus showing stronger discrimination ability. However, existing GATs suffer from a significant discrimination ability decline in heterophilic graphs because the high proportion of dissimilar neighbors can weaken the self-attention of the central node, jointly resulting in the deviation of the central node from similar nodes in the representation space. This kind of effect generated by neighboring nodes is called the Distraction Effect (DE) in this paper. To estimate and weaken the DE of neighboring nodes, we propose a Causally graph Attention network for Trimming heterophilic graph (CAT). To estimate the DE, since the DE are generated through two paths (grab the attention assigned to neighbors and reduce the self-attention of the central node), we use Total Effect to model DE, which is a kind of causal estimand and can be estimated from intervened data; To weaken the DE, we identify the neighbors with the highest DE (we call them Distraction Neighbors) and remove them. We adopt three representative GATs as the base model within the proposed CAT framework and conduct experiments on seven heterophilic datasets in three different sizes. Comparative experiments show that CAT can improve the node classification accuracy of all base GAT models. Ablation experiments and visualization further validate the enhancement of discrimination ability brought by CAT. The source code is available at https://github.com/GeoX-Lab/CAT.

* 24 pages, 17 figures, 4 tables

Alleviating neighbor bias: augmenting graph self-supervise learning with structural equivalent positive samples

Dec 08, 2022Abstract:In recent years, using a self-supervised learning framework to learn the general characteristics of graphs has been considered a promising paradigm for graph representation learning. The core of self-supervised learning strategies for graph neural networks lies in constructing suitable positive sample selection strategies. However, existing GNNs typically aggregate information from neighboring nodes to update node representations, leading to an over-reliance on neighboring positive samples, i.e., homophilous samples; while ignoring long-range positive samples, i.e., positive samples that are far apart on the graph but structurally equivalent samples, a problem we call "neighbor bias." This neighbor bias can reduce the generalization performance of GNNs. In this paper, we argue that the generalization properties of GNNs should be determined by combining homogeneous samples and structurally equivalent samples, which we call the "GC combination hypothesis." Therefore, we propose a topological signal-driven self-supervised method. It uses a topological information-guided structural equivalence sampling strategy. First, we extract multiscale topological features using persistent homology. Then we compute the structural equivalence of node pairs based on their topological features. In particular, we design a topological loss function to pull in non-neighboring node pairs with high structural equivalence in the representation space to alleviate neighbor bias. Finally, we use the joint training mechanism to adjust the effect of structural equivalence on the model to fit datasets with different characteristics. We conducted experiments on the node classification task across seven graph datasets. The results show that the model performance can be effectively improved using a strategy of topological signal enhancement.

STGC-GNNs: A GNN-based traffic prediction framework with a spatial-temporal Granger causality graph

Oct 30, 2022

Abstract:The key to traffic prediction is to accurately depict the temporal dynamics of traffic flow traveling in a road network, so it is important to model the spatial dependence of the road network. The essence of spatial dependence is to accurately describe how traffic information transmission is affected by other nodes in the road network, and the GNN-based traffic prediction model, as a benchmark for traffic prediction, has become the most common method for the ability to model spatial dependence by transmitting traffic information with the message passing mechanism. However, existing methods model a local and static spatial dependence, which cannot transmit the global-dynamic traffic information (GDTi) required for long-term prediction. The challenge is the difficulty of detecting the precise transmission of GDTi due to the uncertainty of individual transport, especially for long-term transmission. In this paper, we propose a new hypothesis\: GDTi behaves macroscopically as a transmitting causal relationship (TCR) underlying traffic flow, which remains stable under dynamic changing traffic flow. We further propose spatial-temporal Granger causality (STGC) to express TCR, which models global and dynamic spatial dependence. To model global transmission, we model the causal order and causal lag of TCRs global transmission by a spatial-temporal alignment algorithm. To capture dynamic spatial dependence, we approximate the stable TCR underlying dynamic traffic flow by a Granger causality test. The experimental results on three backbone models show that using STGC to model the spatial dependence has better results than the original model for 45 min and 1 h long-term prediction.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge