Ronak Gupta

MaskMTL: Attribute prediction in masked facial images with deep multitask learning

Jan 11, 2022

Abstract:Predicting attributes in the landmark free facial images is itself a challenging task which gets further complicated when the face gets occluded due to the usage of masks. Smart access control gates which utilize identity verification or the secure login to personal electronic gadgets may utilize face as a biometric trait. Particularly, the Covid-19 pandemic increasingly validates the essentiality of hygienic and contactless identity verification. In such cases, the usage of masks become more inevitable and performing attribute prediction helps in segregating the target vulnerable groups from community spread or ensuring social distancing for them in a collaborative environment. We create a masked face dataset by efficiently overlaying masks of different shape, size and textures to effectively model variability generated by wearing mask. This paper presents a deep Multi-Task Learning (MTL) approach to jointly estimate various heterogeneous attributes from a single masked facial image. Experimental results on benchmark face attribute UTKFace dataset demonstrate that the proposed approach supersedes in performance to other competing techniques. The source code is available at https://github.com/ritikajha/Attribute-prediction-in-masked-facial-images-with-deep-multitask-learning

Compressive sensing based privacy for fall detection

Jan 10, 2020

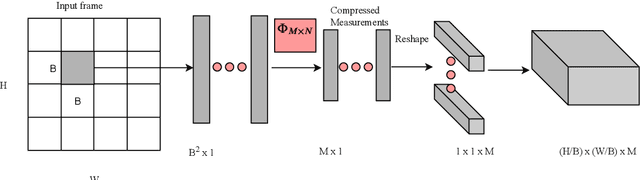

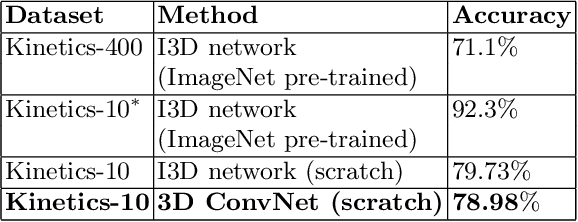

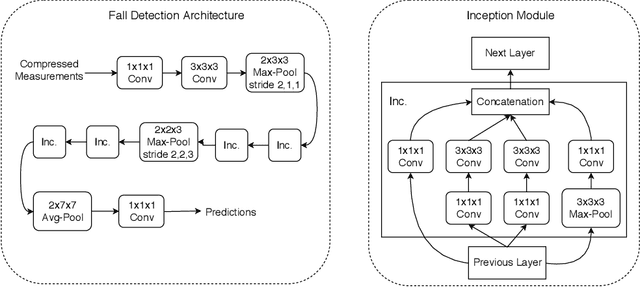

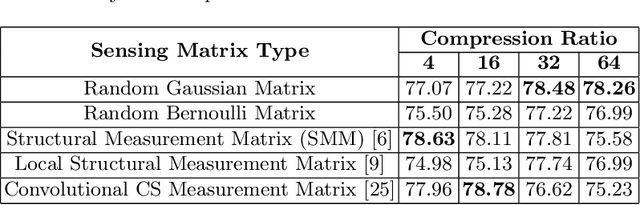

Abstract:Fall detection holds immense importance in the field of healthcare, where timely detection allows for instant medical assistance. In this context, we propose a 3D ConvNet architecture which consists of 3D Inception modules for fall detection. The proposed architecture is a custom version of Inflated 3D (I3D) architecture, that takes compressed measurements of video sequence as spatio-temporal input, obtained from compressive sensing framework, rather than video sequence as input, as in the case of I3D convolutional neural network. This is adopted since privacy raises a huge concern for patients being monitored through these RGB cameras. The proposed framework for fall detection is flexible enough with respect to a wide variety of measurement matrices. Ten action classes randomly selected from Kinetics-400 with no fall examples, are employed to train our 3D ConvNet post compressive sensing with different types of sensing matrices on the original video clips. Our results show that 3D ConvNet performance remains unchanged with different sensing matrices. Also, the performance obtained with Kinetics pre-trained 3D ConvNet on compressively sensed fall videos from benchmark datasets is better than the state-of-the-art techniques.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge