Rohit Keshari

Separate Scene Text Detector for Unseen Scripts is Not All You Need

Jul 29, 2023

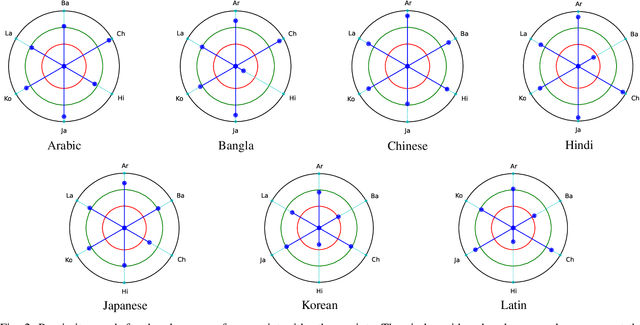

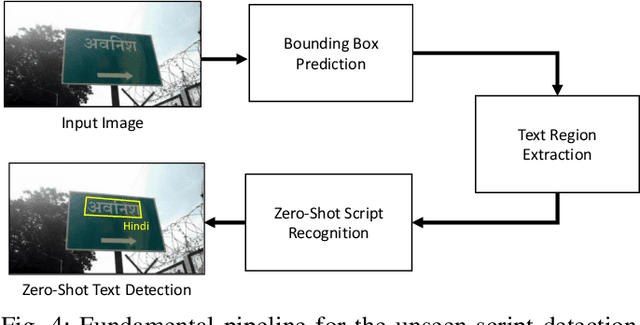

Abstract:Text detection in the wild is a well-known problem that becomes more challenging while handling multiple scripts. In the last decade, some scripts have gained the attention of the research community and achieved good detection performance. However, many scripts are low-resourced for training deep learning-based scene text detectors. It raises a critical question: Is there a need for separate training for new scripts? It is an unexplored query in the field of scene text detection. This paper acknowledges this problem and proposes a solution to detect scripts not present during training. In this work, the analysis has been performed to understand cross-script text detection, i.e., trained on one and tested on another. We found that the identical nature of text annotation (word-level/line-level) is crucial for better cross-script text detection. The different nature of text annotation between scripts degrades cross-script text detection performance. Additionally, for unseen script detection, the proposed solution utilizes vector embedding to map the stroke information of text corresponding to the script category. The proposed method is validated with a well-known multi-lingual scene text dataset under a zero-shot setting. The results show the potential of the proposed method for unseen script detection in natural images.

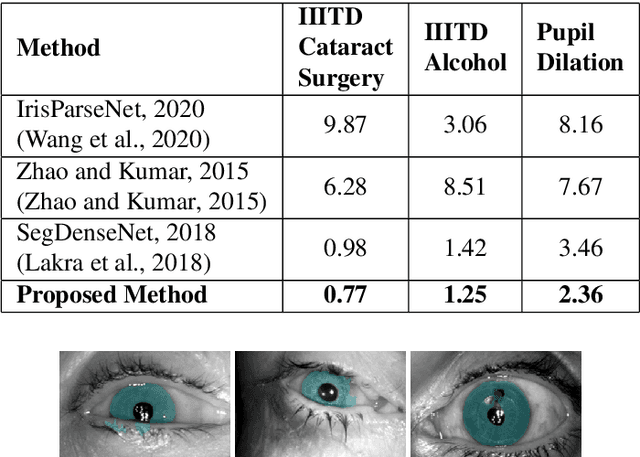

MTCD: Cataract Detection via Near Infrared Eye Images

Oct 06, 2021

Abstract:Globally, cataract is a common eye disease and one of the leading causes of blindness and vision impairment. The traditional process of detecting cataracts involves eye examination using a slit-lamp microscope or ophthalmoscope by an ophthalmologist, who checks for clouding of the normally clear lens of the eye. The lack of resources and unavailability of a sufficient number of experts pose a burden to the healthcare system throughout the world, and researchers are exploring the use of AI solutions for assisting the experts. Inspired by the progress in iris recognition, in this research, we present a novel algorithm for cataract detection using near-infrared eye images. The NIR cameras, which are popularly used in iris recognition, are of relatively low cost and easy to operate compared to ophthalmoscope setup for data capture. However, such NIR images have not been explored for cataract detection. We present deep learning-based eye segmentation and multitask network classification networks for cataract detection using NIR images as input. The proposed segmentation algorithm efficiently and effectively detects non-ideal eye boundaries and is cost-effective, and the classification network yields very high classification performance on the cataract dataset.

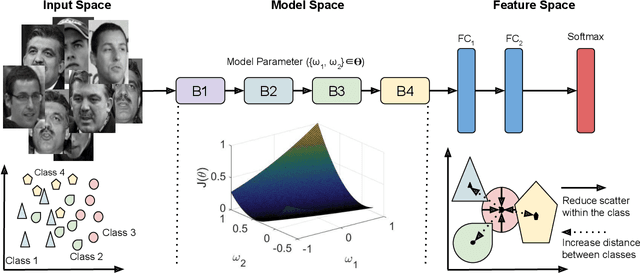

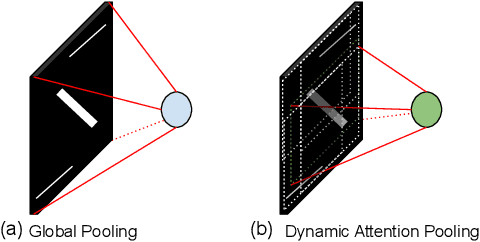

Unravelling Small Sample Size Problems in the Deep Learning World

Aug 08, 2020

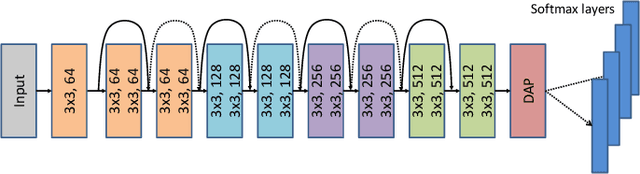

Abstract:The growth and success of deep learning approaches can be attributed to two major factors: availability of hardware resources and availability of large number of training samples. For problems with large training databases, deep learning models have achieved superlative performances. However, there are a lot of \textit{small sample size or $S^3$} problems for which it is not feasible to collect large training databases. It has been observed that deep learning models do not generalize well on $S^3$ problems and specialized solutions are required. In this paper, we first present a review of deep learning algorithms for small sample size problems in which the algorithms are segregated according to the space in which they operate, i.e. input space, model space, and feature space. Secondly, we present Dynamic Attention Pooling approach which focuses on extracting global information from the most discriminative sub-part of the feature map. The performance of the proposed dynamic attention pooling is analyzed with state-of-the-art ResNet model on relatively small publicly available datasets such as SVHN, C10, C100, and TinyImageNet.

Generalized Zero-Shot Learning Via Over-Complete Distribution

Apr 01, 2020

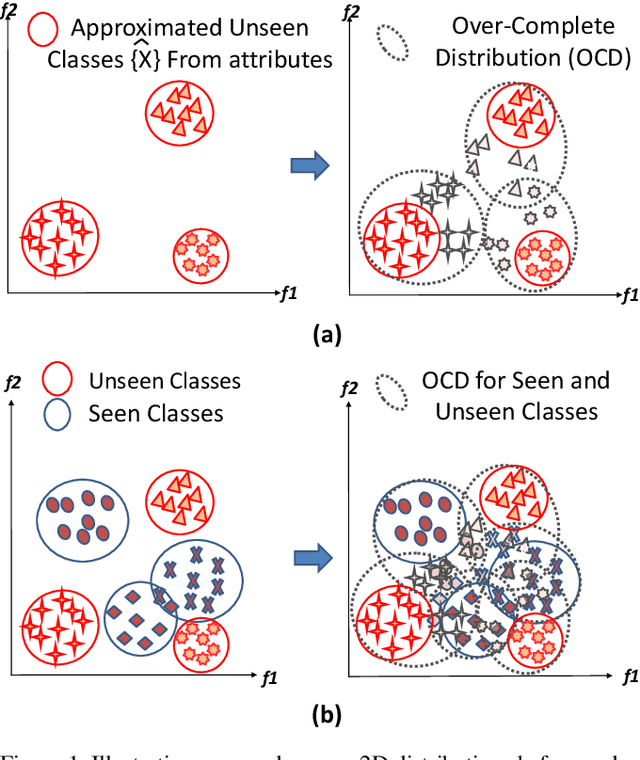

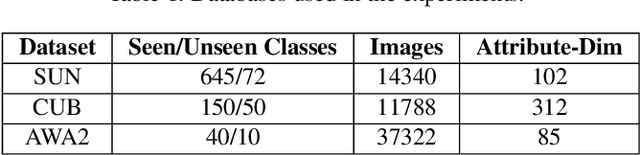

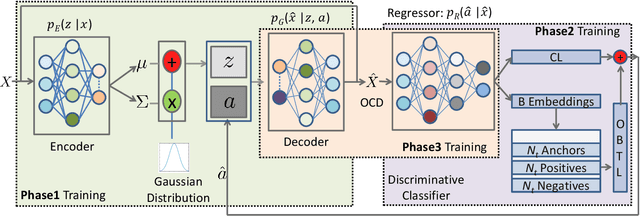

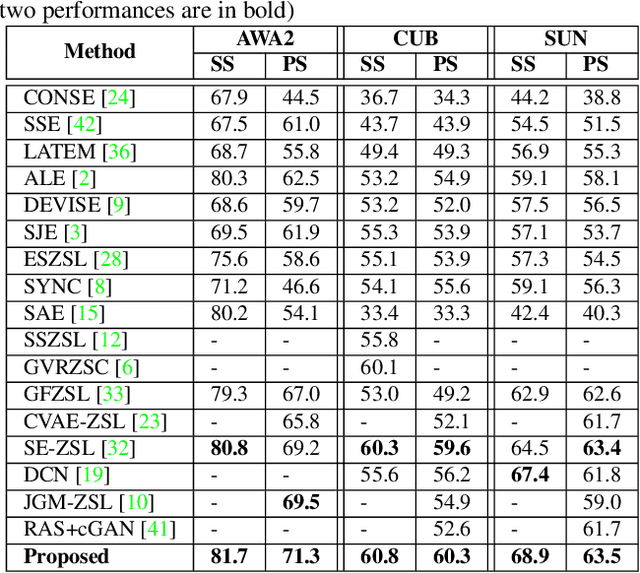

Abstract:A well trained and generalized deep neural network (DNN) should be robust to both seen and unseen classes. However, the performance of most of the existing supervised DNN algorithms degrade for classes which are unseen in the training set. To learn a discriminative classifier which yields good performance in Zero-Shot Learning (ZSL) settings, we propose to generate an Over-Complete Distribution (OCD) using Conditional Variational Autoencoder (CVAE) of both seen and unseen classes. In order to enforce the separability between classes and reduce the class scatter, we propose the use of Online Batch Triplet Loss (OBTL) and Center Loss (CL) on the generated OCD. The effectiveness of the framework is evaluated using both Zero-Shot Learning and Generalized Zero-Shot Learning protocols on three publicly available benchmark databases, SUN, CUB and AWA2. The results show that generating over-complete distributions and enforcing the classifier to learn a transform function from overlapping to non-overlapping distributions can improve the performance on both seen and unseen classes.

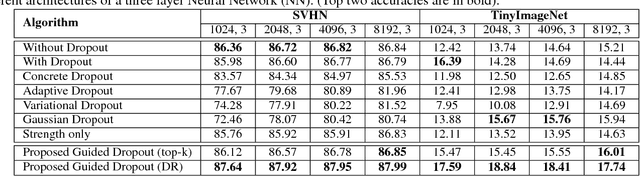

Guided Dropout

Dec 10, 2018

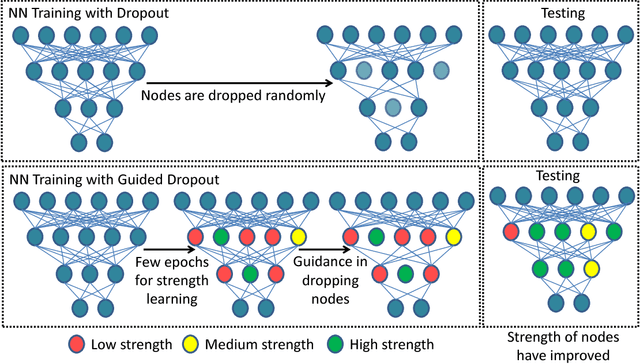

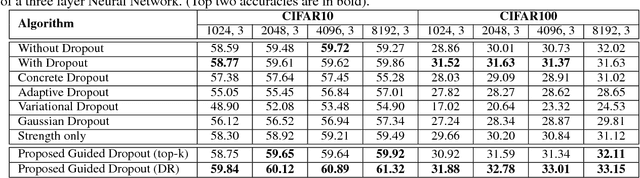

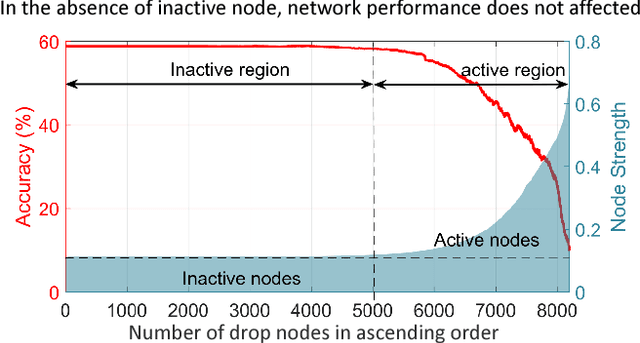

Abstract:Dropout is often used in deep neural networks to prevent over-fitting. Conventionally, dropout training invokes \textit{random drop} of nodes from the hidden layers of a Neural Network. It is our hypothesis that a guided selection of nodes for intelligent dropout can lead to better generalization as compared to the traditional dropout. In this research, we propose "guided dropout" for training deep neural network which drop nodes by measuring the strength of each node. We also demonstrate that conventional dropout is a specific case of the proposed guided dropout. Experimental evaluation on multiple datasets including MNIST, CIFAR10, CIFAR100, SVHN, and Tiny ImageNet demonstrate the efficacy of the proposed guided dropout.

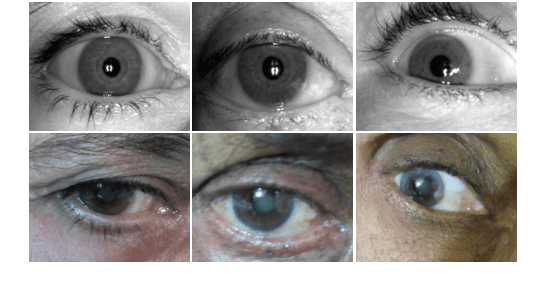

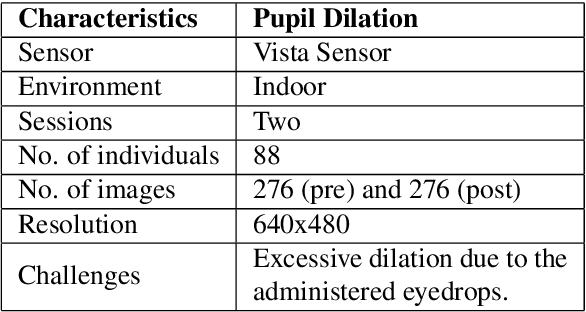

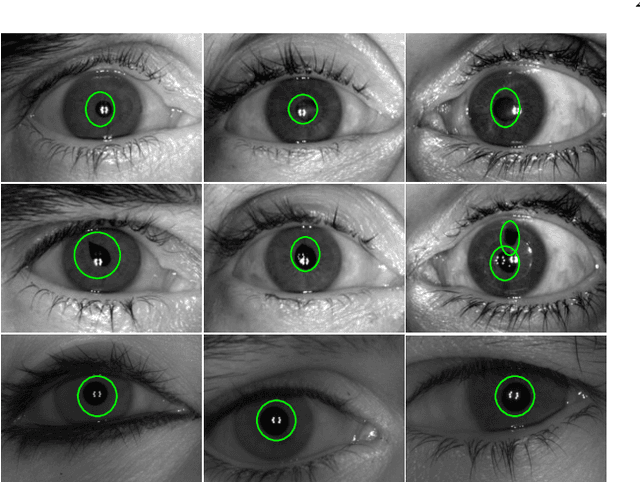

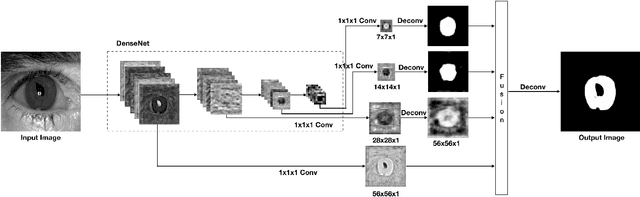

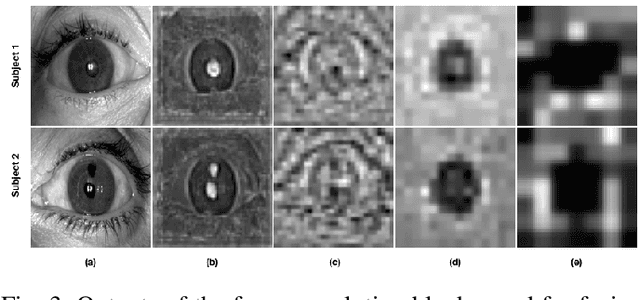

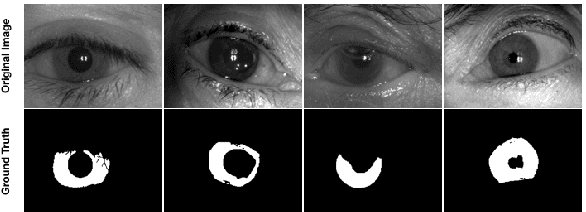

SegDenseNet: Iris Segmentation for Pre and Post Cataract Surgery

Apr 19, 2018

Abstract:Cataract is caused due to various factors such as age, trauma, genetics, smoking and substance consumption, and radiation. It is one of the major common ophthalmic diseases worldwide which can potentially affect iris-based biometric systems. India, which hosts the largest biometrics project in the world, has about 8 million people undergoing cataract surgery annually. While existing research shows that cataract does not have a major impact on iris recognition, our observations suggest that the iris segmentation approaches are not well equipped to handle cataract or post cataract surgery cases. Therefore, failure in iris segmentation affects the overall recognition performance. This paper presents an efficient iris segmentation algorithm with variations due to cataract and post cataract surgery. The proposed algorithm, termed as SegDenseNet, is a deep learning algorithm based on DenseNets. The experiments on the IIITD Cataract database show that improving the segmentation enhances the identification by up to 25% across different sensors and matchers.

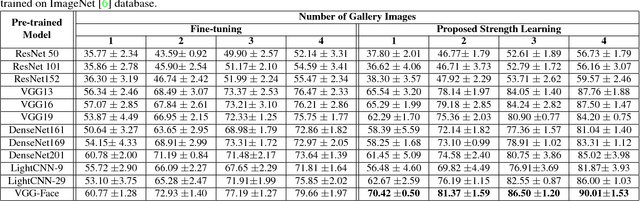

Learning Structure and Strength of CNN Filters for Small Sample Size Training

Mar 30, 2018

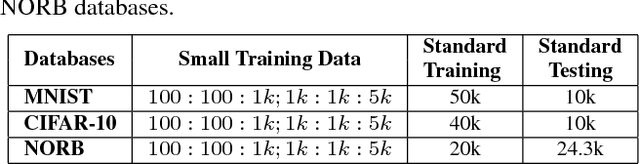

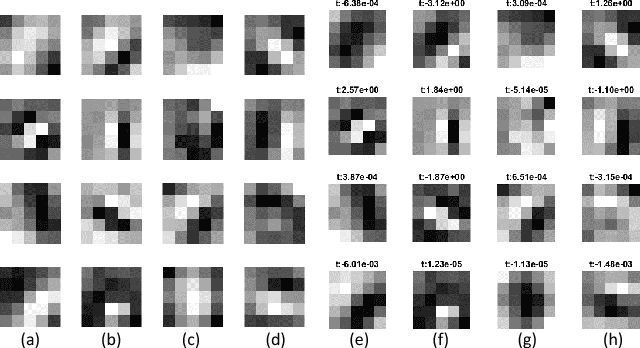

Abstract:Convolutional Neural Networks have provided state-of-the-art results in several computer vision problems. However, due to a large number of parameters in CNNs, they require a large number of training samples which is a limiting factor for small sample size problems. To address this limitation, we propose SSF-CNN which focuses on learning the structure and strength of filters. The structure of the filter is initialized using a dictionary-based filter learning algorithm and the strength of the filter is learned using the small sample training data. The architecture provides the flexibility of training with both small and large training databases and yields good accuracies even with small size training data. The effectiveness of the algorithm is first demonstrated on MNIST, CIFAR10, and NORB databases, with a varying number of training samples. The results show that SSF-CNN significantly reduces the number of parameters required for training while providing high accuracies the test databases. On small sample size problems such as newborn face recognition and Omniglot, it yields state-of-the-art results. Specifically, on the IIITD Newborn Face Database, the results demonstrate improvement in rank-1 identification accuracy by at least 10%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge