Risto Miikkulainen

Quantized Evolution Strategies: High-precision Fine-tuning of Quantized LLMs at Low-precision Cost

Feb 03, 2026Abstract:Post-Training Quantization (PTQ) is essential for deploying Large Language Models (LLMs) on memory-constrained devices, yet it renders models static and difficult to fine-tune. Standard fine-tuning paradigms, including Reinforcement Learning (RL), fundamentally rely on backpropagation and high-precision weights to compute gradients. Thus they cannot be used on quantized models, where the parameter space is discrete and non-differentiable. While Evolution Strategies (ES) offer a backpropagation-free alternative, optimization of the quantized parameters can still fail due to vanishing or inaccurate gradient. This paper introduces Quantized Evolution Strategies (QES), an optimization paradigm that performs full-parameter fine-tuning directly in the quantized space. QES is based on two innovations: (1) it integrates accumulated error feedback to preserve high-precision gradient signals, and (2) it utilizes a stateless seed replay to reduce memory usage to low-precision inference levels. QES significantly outperforms the state-of-the-art zeroth-order fine-tuning method on arithmetic reasoning tasks, making direct fine-tuning for quantized models possible. It therefore opens up the possibility for scaling up LLMs entirely in the quantized space. The source code is available at https://github.com/dibbla/Quantized-Evolution-Strategies .

Fine-Tuning Language Models to Know What They Know

Feb 02, 2026Abstract:Metacognition is a critical component of intelligence, specifically regarding the awareness of one's own knowledge. While humans rely on shared internal memory for both answering questions and reporting their knowledge state, this dependency in LLMs remains underexplored. This study proposes a framework to measure metacognitive ability $d_{\rm{type2}}'$ using a dual-prompt method, followed by the introduction of Evolution Strategy for Metacognitive Alignment (ESMA) to bind a model's internal knowledge to its explicit behaviors. ESMA demonstrates robust generalization across diverse untrained settings, indicating a enhancement in the model's ability to reference its own knowledge. Furthermore, parameter analysis attributes these improvements to a sparse set of significant modifications.

The Blessing of Dimensionality in LLM Fine-tuning: A Variance-Curvature Perspective

Jan 30, 2026Abstract:Weight-perturbation evolution strategies (ES) can fine-tune billion-parameter language models with surprisingly small populations (e.g., $N\!\approx\!30$), contradicting classical zeroth-order curse-of-dimensionality intuition. We also observe a second seemingly separate phenomenon: under fixed hyperparameters, the stochastic fine-tuning reward often rises, peaks, and then degrades in both ES and GRPO. We argue that both effects reflect a shared geometric property of fine-tuning landscapes: they are low-dimensional in curvature. A small set of high-curvature dimensions dominates improvement, producing (i) heterogeneous time scales that yield rise-then-decay under fixed stochasticity, as captured by a minimal quadratic stochastic-ascent model, and (ii) degenerate improving updates, where many random perturbations share similar components along these directions. Using ES as a geometric probe on fine-tuning reward landscapes of GSM8K, ARC-C, and WinoGrande across Qwen2.5-Instruct models (0.5B--7B), we show that reward-improving perturbations remain empirically accessible with small populations across scales. Together, these results reconcile ES scalability with non-monotonic training dynamics and suggest that high-dimensional fine-tuning may admit a broader class of viable optimization methods than worst-case theory implies.

Solving a Million-Step LLM Task with Zero Errors

Nov 12, 2025Abstract:LLMs have achieved remarkable breakthroughs in reasoning, insights, and tool use, but chaining these abilities into extended processes at the scale of those routinely executed by humans, organizations, and societies has remained out of reach. The models have a persistent error rate that prevents scale-up: for instance, recent experiments in the Towers of Hanoi benchmark domain showed that the process inevitably becomes derailed after at most a few hundred steps. Thus, although LLM research is often still benchmarked on tasks with relatively few dependent logical steps, there is increasing attention on the ability (or inability) of LLMs to perform long range tasks. This paper describes MAKER, the first system that successfully solves a task with over one million LLM steps with zero errors, and, in principle, scales far beyond this level. The approach relies on an extreme decomposition of a task into subtasks, each of which can be tackled by focused microagents. The high level of modularity resulting from the decomposition allows error correction to be applied at each step through an efficient multi-agent voting scheme. This combination of extreme decomposition and error correction makes scaling possible. Thus, the results suggest that instead of relying on continual improvement of current LLMs, massively decomposed agentic processes (MDAPs) may provide a way to efficiently solve problems at the level of organizations and societies.

Leveraging Evolutionary Surrogate-Assisted Prescription in Multi-Objective Chlorination Control Systems

Aug 26, 2025Abstract:This short, written report introduces the idea of Evolutionary Surrogate-Assisted Prescription (ESP) and presents preliminary results on its potential use in training real-world agents as a part of the 1st AI for Drinking Water Chlorination Challenge at IJCAI-2025. This work was done by a team from Project Resilience, an organization interested in bridging AI to real-world problems.

The Odyssey of the Fittest: Can Agents Survive and Still Be Good?

Feb 08, 2025Abstract:As AI models grow in power and generality, understanding how agents learn and make decisions in complex environments is critical to promoting ethical behavior. This paper examines the ethical implications of implementing biological drives, specifically, self preservation, into three different agents. A Bayesian agent optimized with NEAT, a Bayesian agent optimized with stochastic variational inference, and a GPT 4o agent play a simulated, LLM generated text based adventure game. The agents select actions at each scenario to survive, adapting to increasingly challenging scenarios. Post simulation analysis evaluates the ethical scores of the agent's decisions, uncovering the tradeoffs they navigate to survive. Specifically, analysis finds that when danger increases, agents ignore ethical considerations and opt for unethical behavior. The agents' collective behavior, trading ethics for survival, suggests that prioritizing survival increases the risk of unethical behavior. In the context of AGI, designing agents to prioritize survival may amplify the likelihood of unethical decision making and unintended emergent behaviors, raising fundamental questions about goal design in AI safety research.

How the Stroop Effect Arises from Optimal Response Times in Laterally Connected Self-Organizing Maps

Feb 05, 2025

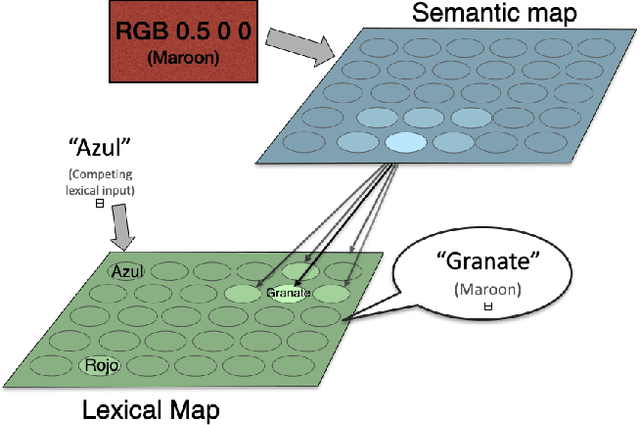

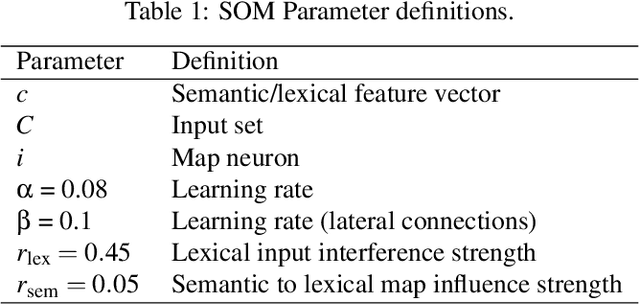

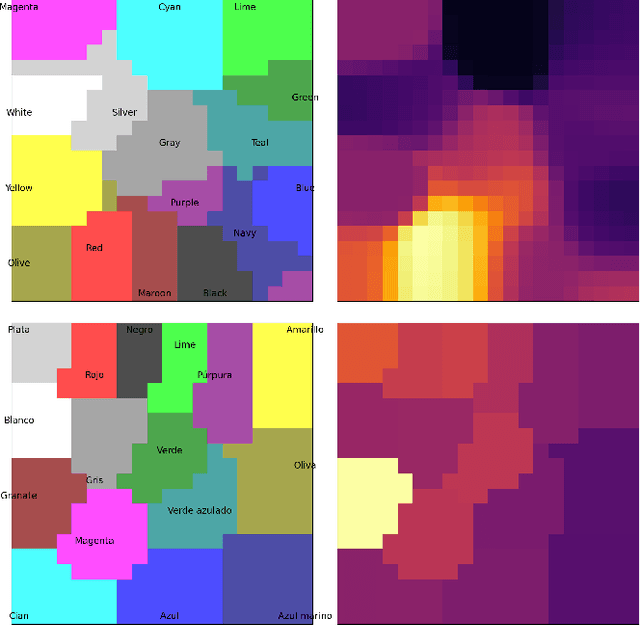

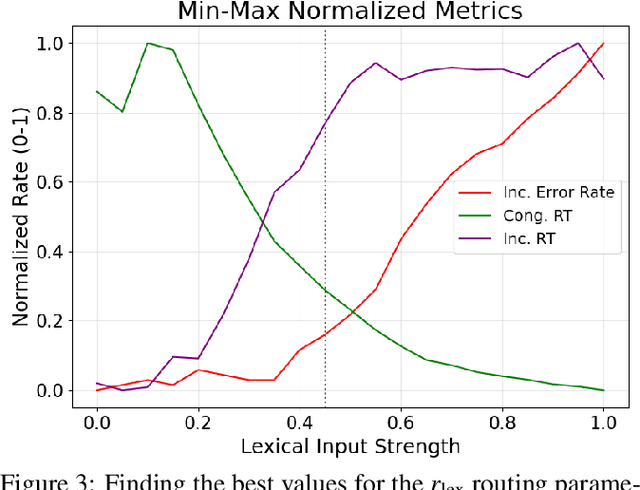

Abstract:The Stroop effect refers to cognitive interference in a color-naming task: When the color and the word do not match, the response is slower and more likely to be incorrect. The Stroop task is used to assess cognitive flexibility, selective attention, and executive function. This paper implements the Stroop task with self-organizing maps (SOMs): Target color and the competing word are inputs for the semantic and lexical maps, associative connections bring color information to the lexical map, and lateral connections combine their effects over time. The model achieved an overall accuracy of 84.2%, with significantly fewer errors and faster responses in congruent compared to no-input and incongruent conditions. The model's effect is a side effect of optimizing response times, and can thus be seen as a cost associated with overall efficient performance. The model can further serve studying neurologically-inspired cognitive control and related phenomena.

Unlocking the Potential of Global Human Expertise

Oct 31, 2024

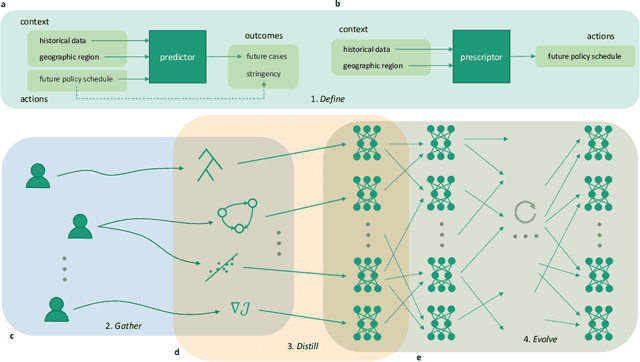

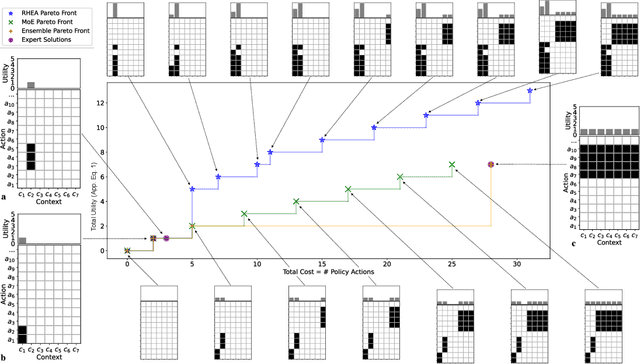

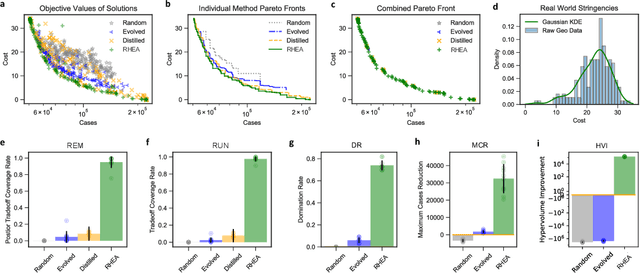

Abstract:Solving societal problems on a global scale requires the collection and processing of ideas and methods from diverse sets of international experts. As the number and diversity of human experts increase, so does the likelihood that elements in this collective knowledge can be combined and refined to discover novel and better solutions. However, it is difficult to identify, combine, and refine complementary information in an increasingly large and diverse knowledge base. This paper argues that artificial intelligence (AI) can play a crucial role in this process. An evolutionary AI framework, termed RHEA, fills this role by distilling knowledge from diverse models created by human experts into equivalent neural networks, which are then recombined and refined in a population-based search. The framework was implemented in a formal synthetic domain, demonstrating that it is transparent and systematic. It was then applied to the results of the XPRIZE Pandemic Response Challenge, in which over 100 teams of experts across 23 countries submitted models based on diverse methodologies to predict COVID-19 cases and suggest non-pharmaceutical intervention policies for 235 nations, states, and regions across the globe. Building upon this expert knowledge, by recombining and refining the 169 resulting policy suggestion models, RHEA discovered a broader and more effective set of policies than either AI or human experts alone, as evaluated based on real-world data. The results thus suggest that AI can play a crucial role in realizing the potential of human expertise in global problem-solving.

GPU-Accelerated Rule Evaluation and Evolution

Jun 03, 2024

Abstract:This paper introduces an innovative approach to boost the efficiency and scalability of Evolutionary Rule-based machine Learning (ERL), a key technique in explainable AI. While traditional ERL systems can distribute processes across multiple CPUs, fitness evaluation of candidate rules is a bottleneck, especially with large datasets. The method proposed in this paper, AERL (Accelerated ERL) solves this problem in two ways. First, by adopting GPU-optimized rule sets through a tensorized representation within the PyTorch framework, AERL mitigates the bottleneck and accelerates fitness evaluation significantly. Second, AERL takes further advantage of the GPUs by fine-tuning the rule coefficients via back-propagation, thereby improving search space exploration. Experimental evidence confirms that AERL search is faster and more effective, thus empowering explainable artificial intelligence.

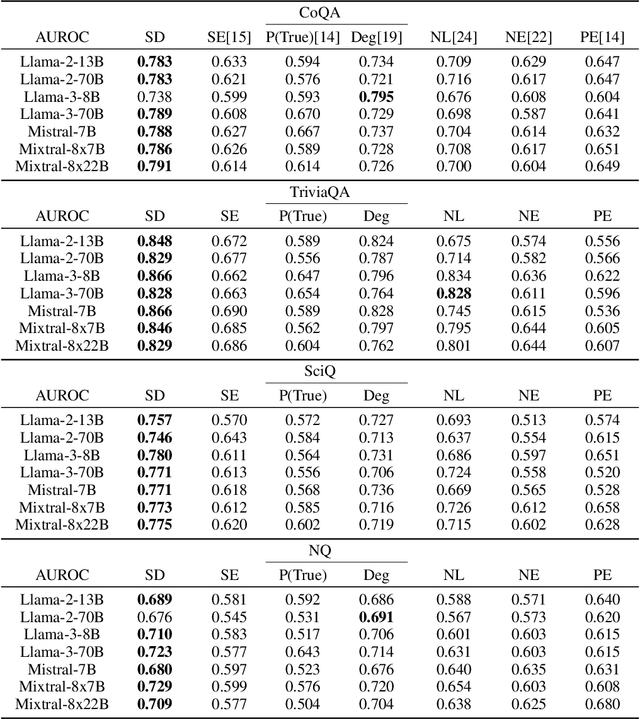

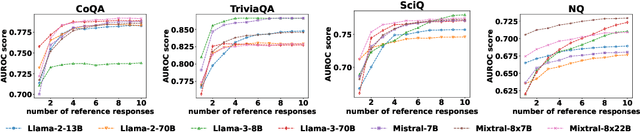

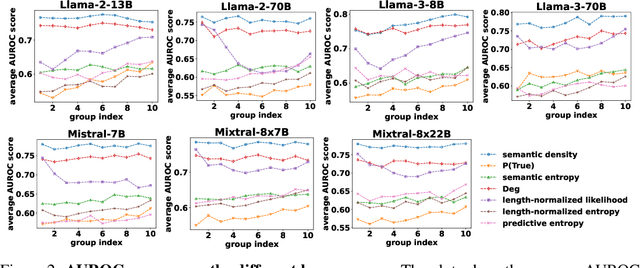

Semantic Density: Uncertainty Quantification in Semantic Space for Large Language Models

May 22, 2024

Abstract:With the widespread application of Large Language Models (LLMs) to various domains, concerns regarding the trustworthiness of LLMs in safety-critical scenarios have been raised, due to their unpredictable tendency to hallucinate and generate misinformation. Existing LLMs do not have an inherent functionality to provide the users with an uncertainty metric for each response it generates, making it difficult to evaluate trustworthiness. Although a number of works aim to develop uncertainty quantification methods for LLMs, they have fundamental limitations, such as being restricted to classification tasks, requiring additional training and data, considering only lexical instead of semantic information, and being prompt-wise but not response-wise. A new framework is proposed in this paper to address these issues. Semantic density extracts uncertainty information for each response from a probability distribution perspective in semantic space. It has no restriction on task types and is "off-the-shelf" for new models and tasks. Experiments on seven state-of-the-art LLMs, including the latest Llama 3 and Mixtral-8x22B models, on four free-form question-answering benchmarks demonstrate the superior performance and robustness of semantic density compared to prior approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge