Richard James Housden

Cardiac ultrasound simulation for autonomous ultrasound navigation

Feb 09, 2024Abstract:Ultrasound is well-established as an imaging modality for diagnostic and interventional purposes. However, the image quality varies with operator skills as acquiring and interpreting ultrasound images requires extensive training due to the imaging artefacts, the range of acquisition parameters and the variability of patient anatomies. Automating the image acquisition task could improve acquisition reproducibility and quality but training such an algorithm requires large amounts of navigation data, not saved in routine examinations. Thus, we propose a method to generate large amounts of ultrasound images from other modalities and from arbitrary positions, such that this pipeline can later be used by learning algorithms for navigation. We present a novel simulation pipeline which uses segmentations from other modalities, an optimized volumetric data representation and GPU-accelerated Monte Carlo path tracing to generate view-dependent and patient-specific ultrasound images. We extensively validate the correctness of our pipeline with a phantom experiment, where structures' sizes, contrast and speckle noise properties are assessed. Furthermore, we demonstrate its usability to train neural networks for navigation in an echocardiography view classification experiment by generating synthetic images from more than 1000 patients. Networks pre-trained with our simulations achieve significantly superior performance in settings where large real datasets are not available, especially for under-represented classes. The proposed approach allows for fast and accurate patient-specific ultrasound image generation, and its usability for training networks for navigation-related tasks is demonstrated.

Design and integration of a parallel, soft robotic end-effector for extracorporeal ultrasound

Jun 11, 2019

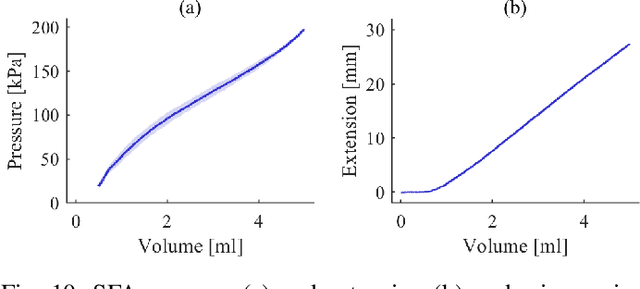

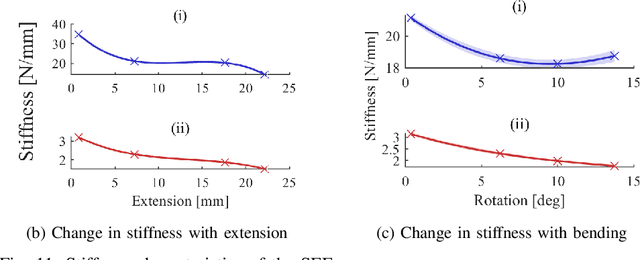

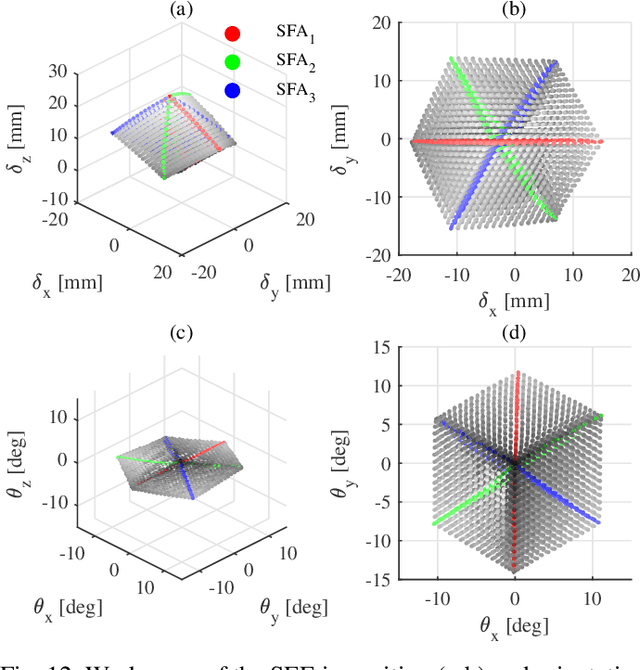

Abstract:In this work we address limitations in state-of-the-art ultrasound robots by designing and integrating the first soft robotic system for ultrasound imaging. It makes use of the inherent qualities of soft robotics technologies to establish a safe, adaptable interaction between ultrasound probe and patient. We acquire clinical data to establish the movement ranges and force levels required in prenatal foetal ultrasound imaging and design our system accordingly. The end-effector's stiffness characteristics allow for it to reach the desired workspace while maintaining a stable contact between ultrasound probe and patient under the determined loads. The system exhibits a high degree of safety due to its inherent compliance in the transversal direction. We verify the mechanical characteristics of the end-effector, derive and validate a kinetostatic model and demonstrate the robot's controllability with and without external loading. The imaging capabilities of the robot are shown in a tele-operated setting on a foetal phantom. The design exhibits the desired stiffness characteristics with a high stiffness along the ultrasound transducer and a high compliance in lateral direction. Twist is constrained using a braided mesh reinforcement. The model can accurately predict the end-effector pose with a mean error of about 6% in position 7% in orientation. The derived controller is, with an average position error of 0.39mm able to track a target pose efficiently without and with externally applied loads. Finally, the images acquired with the system are of equally good quality compared to a manual sonographer scan.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge