Richard C. Hendriks

Wideband Relative Transfer Function (RTF) Estimation Exploiting Frequency Correlations

Jul 19, 2024

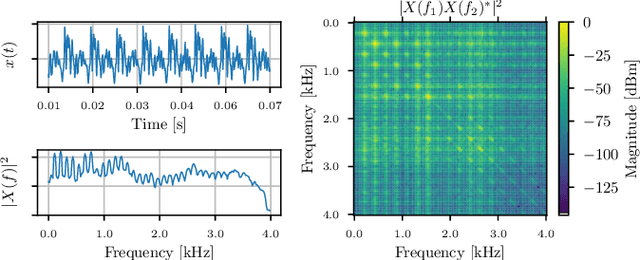

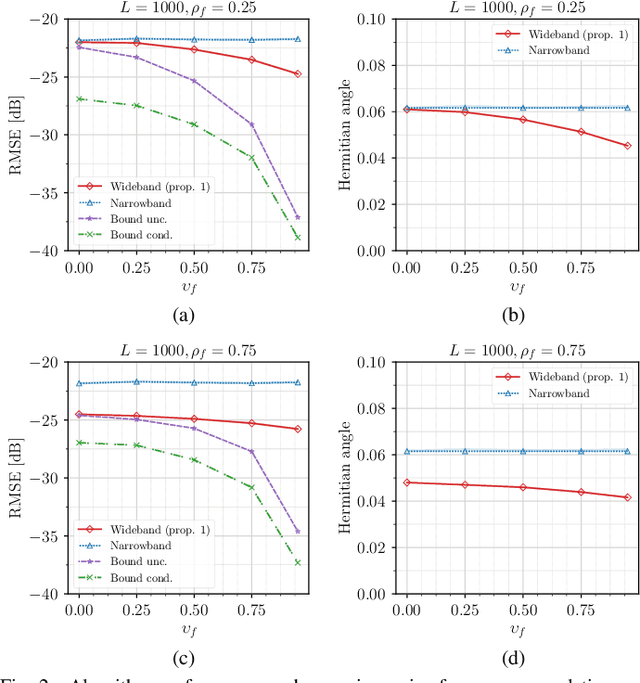

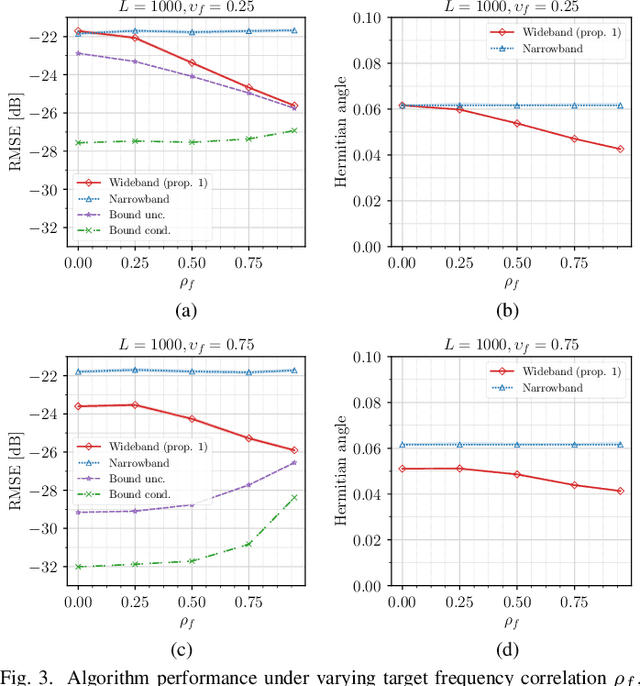

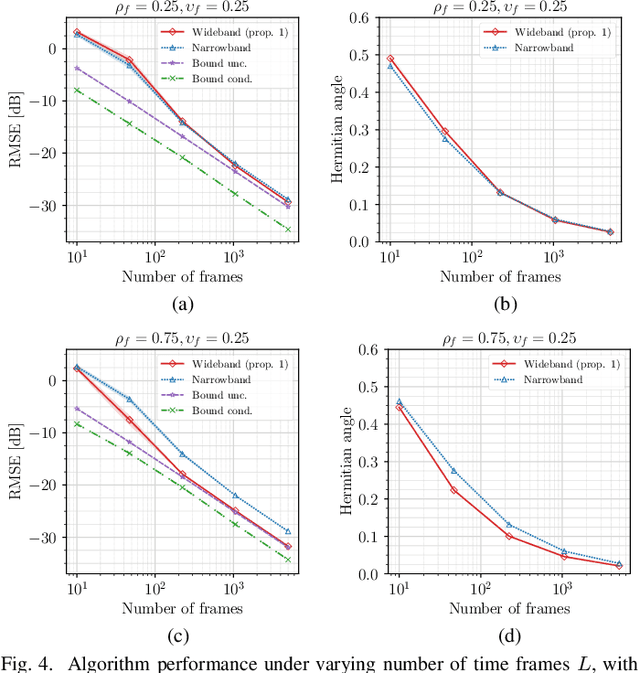

Abstract:This article focuses on estimating relative transfer functions (RTFs) for beamforming applications. While traditional methods assume that spectra are uncorrelated, this assumption is often violated in practical scenarios due to natural phenomena such as the Doppler effect, artificial manipulations like time-domain windowing, or the non-stationary nature of the signals, as observed in speech. To address this, we propose an RTF estimation technique that leverages spectral and spatial correlations through subspace analysis. To overcome the challenge of estimating second-order spectral statistics for real data, we employ a phase-adjusted estimator originally proposed in the context of engine fault detection. Additionally, we derive Cram\'er--Rao bounds (CRBs) for the RTF estimation task, providing theoretical insights into the achievable estimation accuracy. The bounds show that channel estimation can be performed more accurately if the noise or the target presents spectral correlations. Experiments on real and synthetic data show that our technique outperforms the narrowband maximum-likelihood estimator when the target exhibits spectral correlations. Although the accuracy of the proposed algorithm is generally close to the bound, there is some room for improvement, especially when noise signals with high spectral correlation are present. While the applications of channel estimation are diverse, we demonstrate the method in the context of array processing for speech.

Optimal Pilot Design for OTFS in Linear Time-Varying Channels

Mar 28, 2024Abstract:This paper investigates the positioning of the pilot symbols, as well as the power distribution between the pilot and the communication symbols in the OTFS modulation scheme. We analyze the pilot placements that minimize the mean squared error (MSE) in estimating the channel taps. In addition, we optimize the average channel capacity by adjusting the power balance. We show that this leads to a significant increase in average capacity. The results provide valuable guidance for designing the OTFS parameters to achieve maximum capacity. Numerical simulations are performed to validate the findings.

A Singular-value-based Marker for the Detection of Atrial Fibrillation Using High-resolution Electrograms and Multi-lead ECG

Jul 06, 2023

Abstract:The severity of atrial fibrillation (AF) can be assessed from intra-operative epicardial measurements (high-resolution electrograms), using metrics such as conduction block (CB) and continuous conduction delay and block (cCDCB). These features capture differences in conduction velocity and wavefront propagation. However, they do not clearly differentiate patients with various degrees of AF while they are in sinus rhythm, and complementary features are needed. In this work, we focus on the morphology of the action potentials, and derive features to detect variations in the atrial potential waveforms. Methods: We show that the spatial variation of atrial potential morphology during a single beat may be described by changes in the singular values of the epicardial measurement matrix. The method is non-parametric and requires little preprocessing. A corresponding singular value map points at areas subject to fractionation and block. Further, we developed an experiment where we simultaneously measure electrograms (EGMs) and a multi-lead ECG. Results: The captured data showed that the normalized singular values of the heartbeats during AF are higher than during SR, and that this difference is more pronounced for the (non-invasive) ECG data than for the EGM data, if the electrodes are positioned at favorable locations. Conclusion: Overall, the singular value-based features are a useful indicator to detect and evaluate AF. Significance: The proposed method might be beneficial for identifying electropathological regions in the tissue without estimating the local activation time.

On the Integration of Acoustics and LiDAR: a Multi-Modal Approach to Acoustic Reflector Estimation

Jun 08, 2022

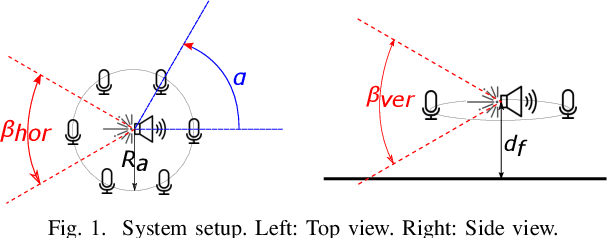

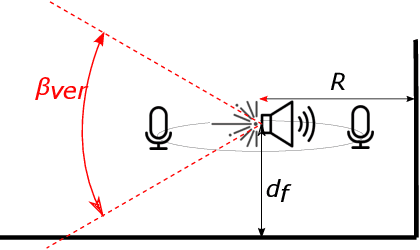

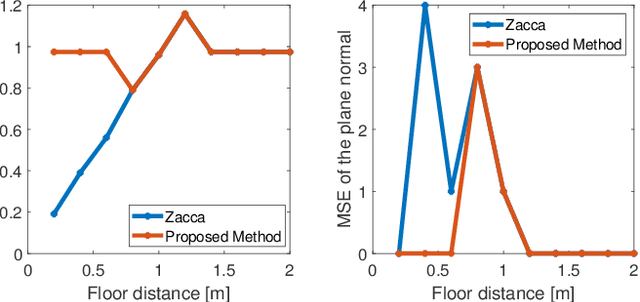

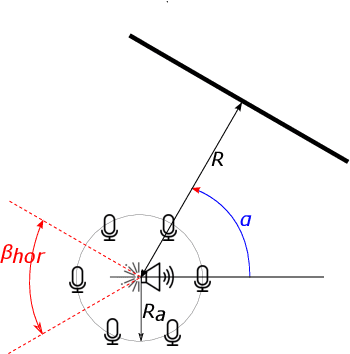

Abstract:Having knowledge on the room acoustic properties, e.g., the location of acoustic reflectors, allows to better reproduce the sound field as intended. Current state-of-the-art methods for room boundary detection using microphone measurements typically focus on a two-dimensional setting, causing a model mismatch when employed in real-life scenarios. Detection of arbitrary reflectors in three dimensions encounters practical limitations, e.g., the need for a spherical array and the increased computational complexity. Moreover, loudspeakers may not have an omnidirectional directivity pattern, as usually assumed in the literature, making the detection of acoustic reflectors in some directions more challenging. In the proposed method, a LiDAR sensor is added to a loudspeaker to improve wall detection accuracy and robustness. This is done in two ways. First, the model mismatch introduced by horizontal reflectors can be resolved by detecting reflectors with the LiDAR sensor to enable elimination of their detrimental influence from the 2D problem in pre-processing. Second, a LiDAR-based method is proposed to compensate for the challenging directions where the directive loudspeaker emits little energy. We show via simulations that this multi-modal approach, i.e., combining microphone and LiDAR sensors, improves the robustness and accuracy of wall detection.

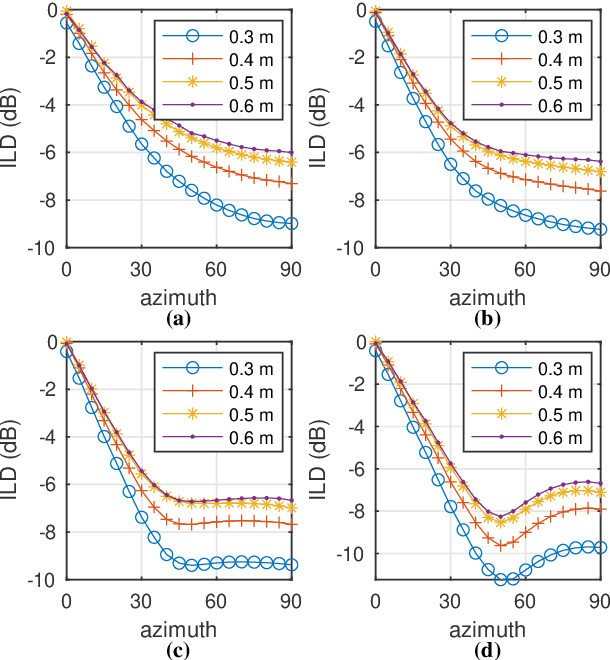

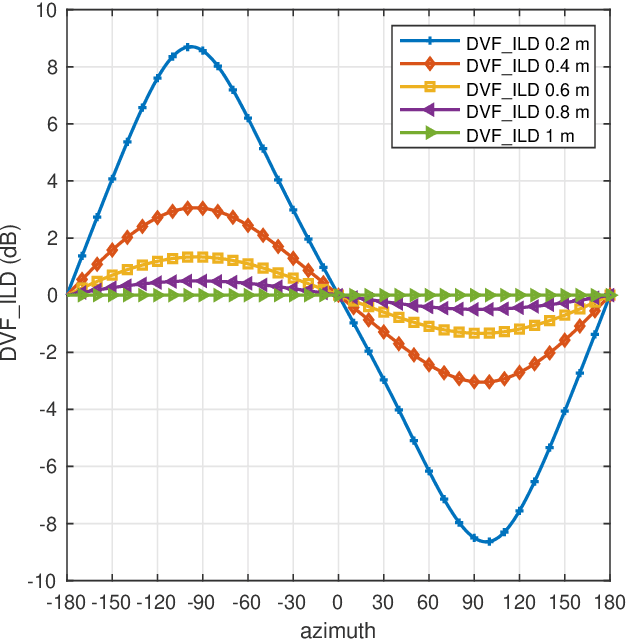

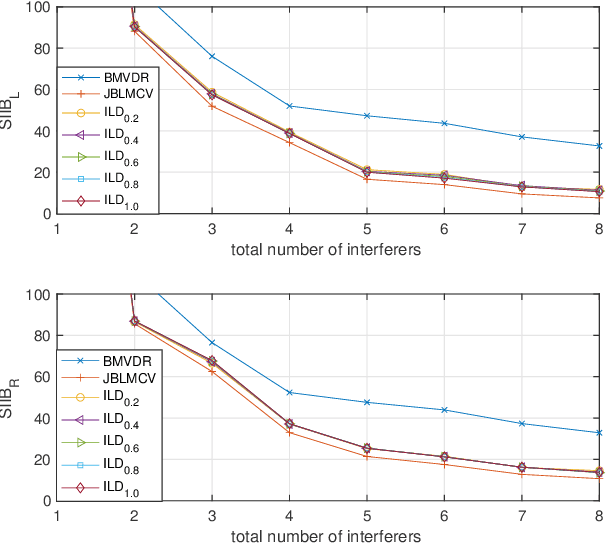

Localization based on enhanced low frequency interaural level difference

Jun 17, 2021

Abstract:The processing of low-frequency interaural time differences is found to be problematic among hearing-impaired people. The current generation of beamformers does not consider this deficiency. In an attempt to tackle this issue, we propose to replace the inaudible interaural time differences in the low-frequency region with the interaural level differences. In addition, a beamformer is introduced and analyzed, which enhances the low-frequency interaural level differences of the sound sources using a near-field transformation. The proposed beamforming problem is relaxed to a convex problem using semi-definite relaxation. The instrumental analysis suggests that the low-frequency interaural level differences are enhanced without hindering the provided intelligibility. A psychoacoustic localization test is done using a listening experiment, which suggests that the replacement of time differences into level differences improves the localization performance of normal-hearing listeners for an anechoic scene but not for a reverberant scene.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge