Jorge Martinez

Performance of Objective Speech Quality Metrics on Languages Beyond Validation Data: A Study of Turkish and Korean

May 22, 2025Abstract:Objective speech quality measures are widely used to assess the performance of video conferencing platforms and telecommunication systems. They predict human-rated speech quality and are crucial for assessing the systems quality of experience. Despite the widespread use, the quality measures are developed on a limited set of languages. This can be problematic since the performance on unseen languages is consequently not guaranteed or even studied. Here we raise awareness to this issue by investigating the performance of two objective speech quality measures (PESQ and ViSQOL) on Turkish and Korean. Using English as baseline, we show that Turkish samples have significantly higher ViSQOL scores and that for Turkish male speakers the correlation between PESQ and ViSQOL is highest. These results highlight the need to explore biases across metrics and to develop a labeled speech quality dataset with a variety of languages.

Loudspeaker Beamforming to Enhance Speech Recognition Performance of Voice Driven Applications

Jan 14, 2025

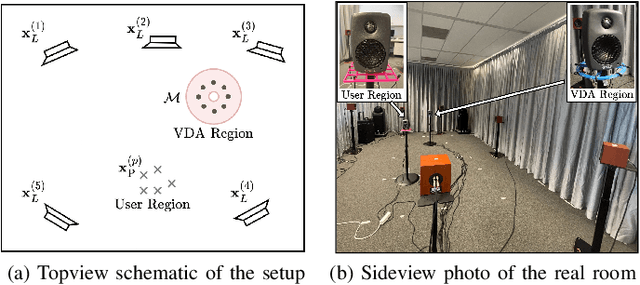

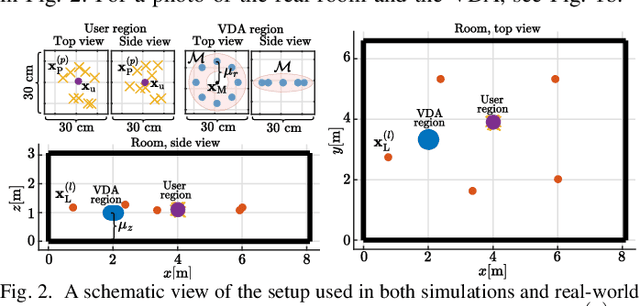

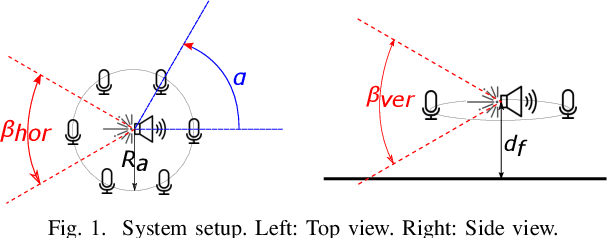

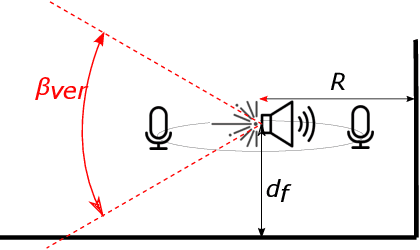

Abstract:In this paper we propose a robust loudspeaker beamforming algorithm which is used to enhance the performance of voice driven applications in scenarios where the loudspeakers introduce the majority of the noise, e.g. when music is playing loudly. The loudspeaker beamformer modifies the loudspeaker playback signals to create a low-acoustic-energy region around the device that implements automatic speech recognition for a voice driven application (VDA). The algorithm utilises a distortion measure based on human auditory perception to limit the distortion perceived by human listeners. Simulations and real-world experiments show that the proposed loudspeaker beamformer improves the speech recognition performance in all tested scenarios. Moreover, the algorithm allows to further reduce the acoustic energy around the VDA device at the expense of reduced objective audio quality at the listener's location.

On the Integration of Acoustics and LiDAR: a Multi-Modal Approach to Acoustic Reflector Estimation

Jun 08, 2022

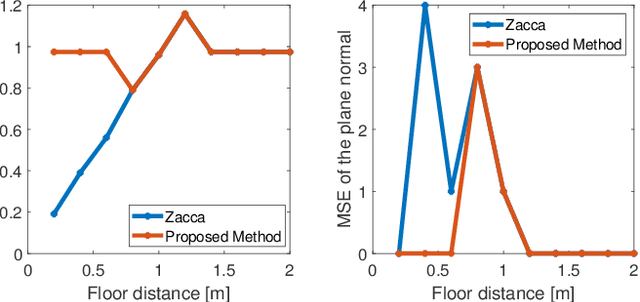

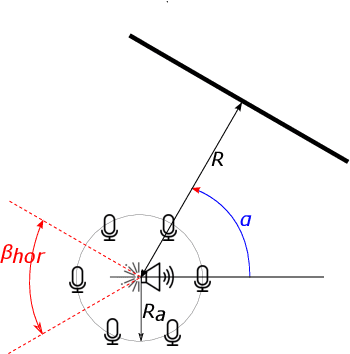

Abstract:Having knowledge on the room acoustic properties, e.g., the location of acoustic reflectors, allows to better reproduce the sound field as intended. Current state-of-the-art methods for room boundary detection using microphone measurements typically focus on a two-dimensional setting, causing a model mismatch when employed in real-life scenarios. Detection of arbitrary reflectors in three dimensions encounters practical limitations, e.g., the need for a spherical array and the increased computational complexity. Moreover, loudspeakers may not have an omnidirectional directivity pattern, as usually assumed in the literature, making the detection of acoustic reflectors in some directions more challenging. In the proposed method, a LiDAR sensor is added to a loudspeaker to improve wall detection accuracy and robustness. This is done in two ways. First, the model mismatch introduced by horizontal reflectors can be resolved by detecting reflectors with the LiDAR sensor to enable elimination of their detrimental influence from the 2D problem in pre-processing. Second, a LiDAR-based method is proposed to compensate for the challenging directions where the directive loudspeaker emits little energy. We show via simulations that this multi-modal approach, i.e., combining microphone and LiDAR sensors, improves the robustness and accuracy of wall detection.

Unsupervised edge map scoring: a statistical complexity approach

Feb 10, 2014

Abstract:We propose a new Statistical Complexity Measure (SCM) to qualify edge maps without Ground Truth (GT) knowledge. The measure is the product of two indices, an \emph{Equilibrium} index $\mathcal{E}$ obtained by projecting the edge map into a family of edge patterns, and an \emph{Entropy} index $\mathcal{H}$, defined as a function of the Kolmogorov Smirnov (KS) statistic. This new measure can be used for performance characterization which includes: (i)~the specific evaluation of an algorithm (intra-technique process) in order to identify its best parameters, and (ii)~the comparison of different algorithms (inter-technique process) in order to classify them according to their quality. Results made over images of the South Florida and Berkeley databases show that our approach significantly improves over Pratt's Figure of Merit (PFoM) which is the objective reference-based edge map evaluation standard, as it takes into account more features in its evaluation.

Accuracy of MAP segmentation with hidden Potts and Markov mesh prior models via Path Constrained Viterbi Training, Iterated Conditional Modes and Graph Cut based algorithms

Jul 11, 2013

Abstract:In this paper, we study statistical classification accuracy of two different Markov field environments for pixelwise image segmentation, considering the labels of the image as hidden states and solving the estimation of such labels as a solution of the MAP equation. The emission distribution is assumed the same in all models, and the difference lays in the Markovian prior hypothesis made over the labeling random field. The a priori labeling knowledge will be modeled with a) a second order anisotropic Markov Mesh and b) a classical isotropic Potts model. Under such models, we will consider three different segmentation procedures, 2D Path Constrained Viterbi training for the Hidden Markov Mesh, a Graph Cut based segmentation for the first order isotropic Potts model, and ICM (Iterated Conditional Modes) for the second order isotropic Potts model. We provide a unified view of all three methods, and investigate goodness of fit for classification, studying the influence of parameter estimation, computational gain, and extent of automation in the statistical measures Overall Accuracy, Relative Improvement and Kappa coefficient, allowing robust and accurate statistical analysis on synthetic and real-life experimental data coming from the field of Dental Diagnostic Radiography. All algorithms, using the learned parameters, generate good segmentations with little interaction when the images have a clear multimodal histogram. Suboptimal learning proves to be frail in the case of non-distinctive modes, which limits the complexity of usable models, and hence the achievable error rate as well. All Matlab code written is provided in a toolbox available for download from our website, following the Reproducible Research Paradigm.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge