Ana Georgina Flesia

Unsupervised edge map scoring: a statistical complexity approach

Feb 10, 2014

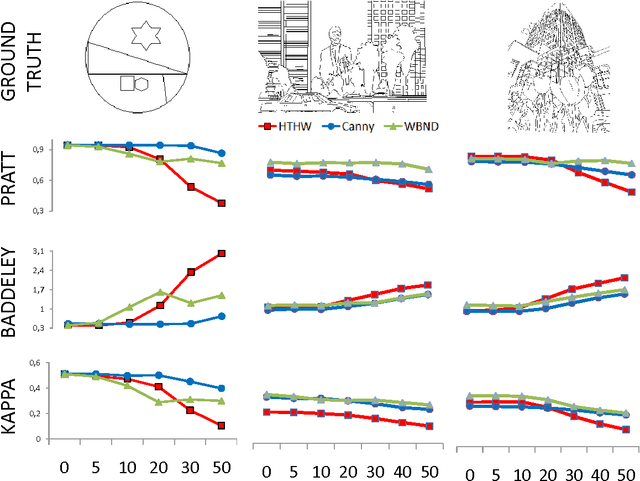

Abstract:We propose a new Statistical Complexity Measure (SCM) to qualify edge maps without Ground Truth (GT) knowledge. The measure is the product of two indices, an \emph{Equilibrium} index $\mathcal{E}$ obtained by projecting the edge map into a family of edge patterns, and an \emph{Entropy} index $\mathcal{H}$, defined as a function of the Kolmogorov Smirnov (KS) statistic. This new measure can be used for performance characterization which includes: (i)~the specific evaluation of an algorithm (intra-technique process) in order to identify its best parameters, and (ii)~the comparison of different algorithms (inter-technique process) in order to classify them according to their quality. Results made over images of the South Florida and Berkeley databases show that our approach significantly improves over Pratt's Figure of Merit (PFoM) which is the objective reference-based edge map evaluation standard, as it takes into account more features in its evaluation.

Accuracy of MAP segmentation with hidden Potts and Markov mesh prior models via Path Constrained Viterbi Training, Iterated Conditional Modes and Graph Cut based algorithms

Jul 11, 2013

Abstract:In this paper, we study statistical classification accuracy of two different Markov field environments for pixelwise image segmentation, considering the labels of the image as hidden states and solving the estimation of such labels as a solution of the MAP equation. The emission distribution is assumed the same in all models, and the difference lays in the Markovian prior hypothesis made over the labeling random field. The a priori labeling knowledge will be modeled with a) a second order anisotropic Markov Mesh and b) a classical isotropic Potts model. Under such models, we will consider three different segmentation procedures, 2D Path Constrained Viterbi training for the Hidden Markov Mesh, a Graph Cut based segmentation for the first order isotropic Potts model, and ICM (Iterated Conditional Modes) for the second order isotropic Potts model. We provide a unified view of all three methods, and investigate goodness of fit for classification, studying the influence of parameter estimation, computational gain, and extent of automation in the statistical measures Overall Accuracy, Relative Improvement and Kappa coefficient, allowing robust and accurate statistical analysis on synthetic and real-life experimental data coming from the field of Dental Diagnostic Radiography. All algorithms, using the learned parameters, generate good segmentations with little interaction when the images have a clear multimodal histogram. Suboptimal learning proves to be frail in the case of non-distinctive modes, which limits the complexity of usable models, and hence the achievable error rate as well. All Matlab code written is provided in a toolbox available for download from our website, following the Reproducible Research Paradigm.

Markovian models for one dimensional structure estimation on heavily noisy imagery

Apr 29, 2013

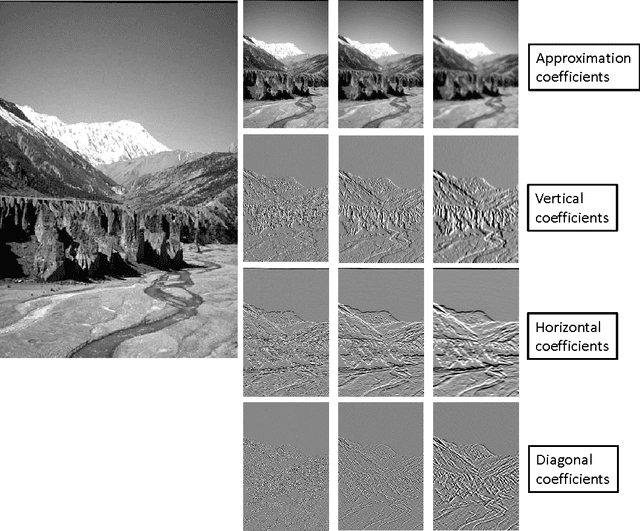

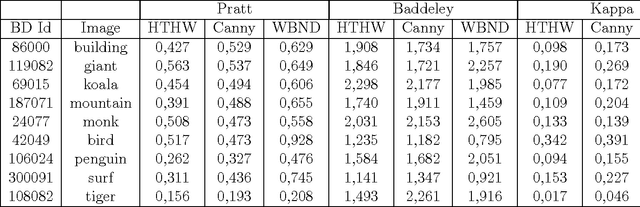

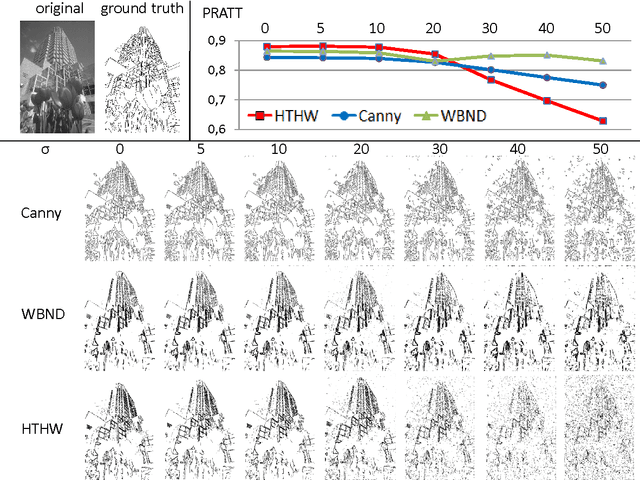

Abstract:Radar (SAR) images often exhibit profound appearance variations due to a variety of factors including clutter noise produced by the coherent nature of the illumination. Ultrasound images and infrared images have similar cluttered appearance, that make 1 dimensional structures, as edges and object boundaries difficult to locate. Structure information is usually extracted in two steps: first, building and edge strength mask classifying pixels as edge points by hypothesis testing, and secondly estimating from that mask, pixel wide connected edges. With constant false alarm rate (CFAR) edge strength detectors for speckle clutter, the image needs to be scanned by a sliding window composed of several differently oriented splitting sub-windows. The accuracy of edge location for these ratio detectors depends strongly on the orientation of the sub-windows. In this work we propose to transform the edge strength detection problem into a binary segmentation problem in the undecimated wavelet domain, solvable using parallel 1d Hidden Markov Models. For general dependency models, exact estimation of the state map becomes computationally complex, but in our model, exact MAP is feasible. The effectiveness of our approach is demonstrated on simulated noisy real-life natural images with available ground truth, while the strength of our output edge map is measured with Pratt's, Baddeley an Kappa proficiency measures. Finally, analysis and experiments on three different types of SAR images, with different polarizations, resolutions and textures, illustrate that the proposed method can detect structure on SAR images effectively, providing a very good start point for active contour methods.

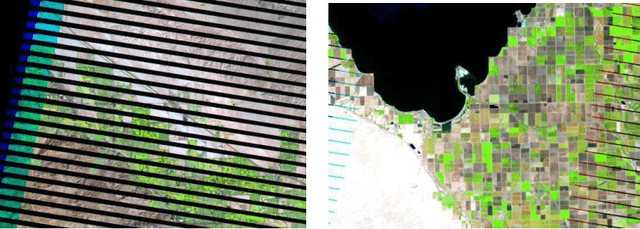

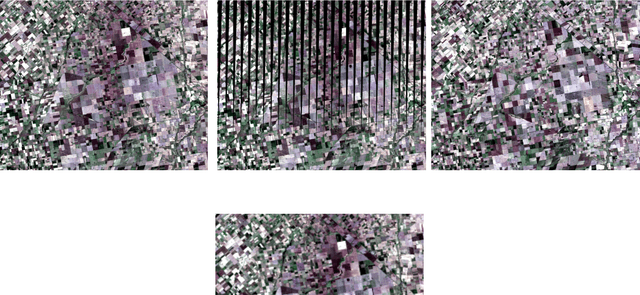

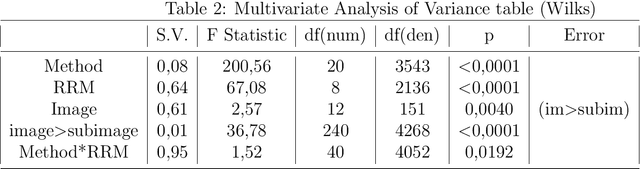

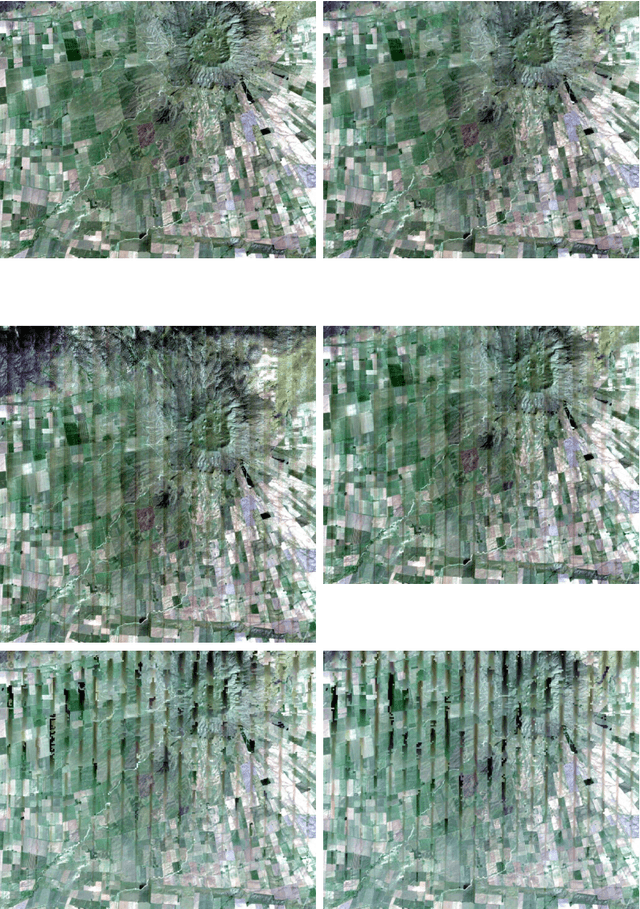

Large gaps imputation in remote sensed imagery of the environment

Jun 22, 2010

Abstract:Imputation of missing data in large regions of satellite imagery is necessary when the acquired image has been damaged by shadows due to clouds, or information gaps produced by sensor failure. The general approach for imputation of missing data, that could not be considered missed at random, suggests the use of other available data. Previous work, like local linear histogram matching, take advantage of a co-registered older image obtained by the same sensor, yielding good results in filling homogeneous regions, but poor results if the scenes being combined have radical differences in target radiance due, for example, to the presence of sun glint or snow. This study proposes three different alternatives for filling the data gaps. The first two involves merging radiometric information from a lower resolution image acquired at the same time, in the Fourier domain (Method A), and using linear regression (Method B). The third method consider segmentation as the main target of processing, and propose a method to fill the gaps in the map of classes, avoiding direct imputation (Method C). All the methods were compared by means of a large simulation study, evaluating performance with a multivariate response vector with four measures: Q, RMSE, Kappa and Overall Accuracy coefficients. Difference in performance were tested with a MANOVA mixed model design with two main effects, imputation method and type of lower resolution extra data, and a blocking third factor with a nested sub-factor, introduced by the real Landsat image and the sub-images that were used. Method B proved to be the best for all criteria.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge