Richard Bean

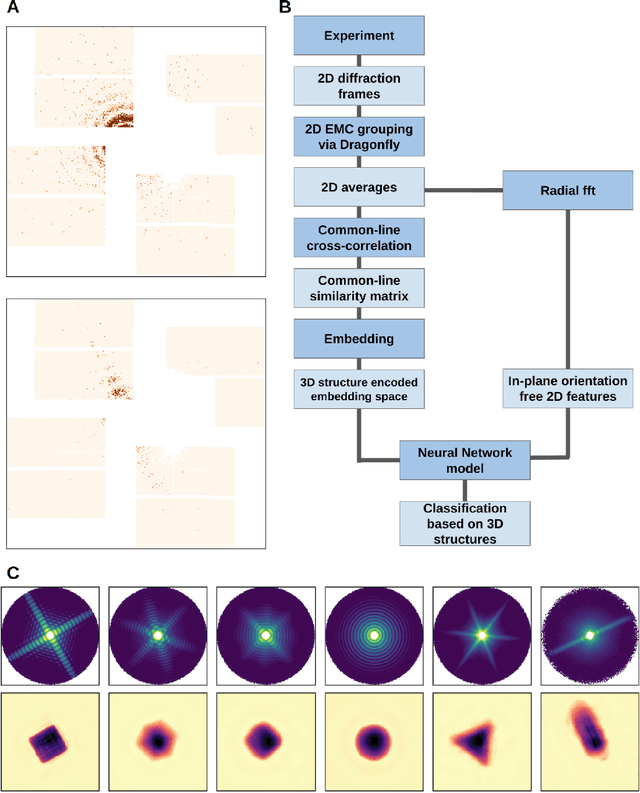

Observation of Aerosolization-induced Morphological Changes in Viral Capsids

Jul 16, 2024

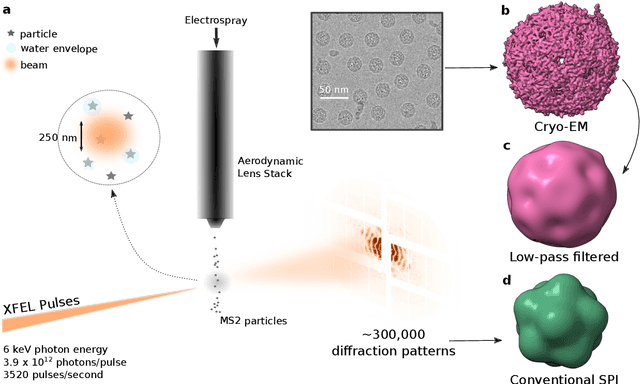

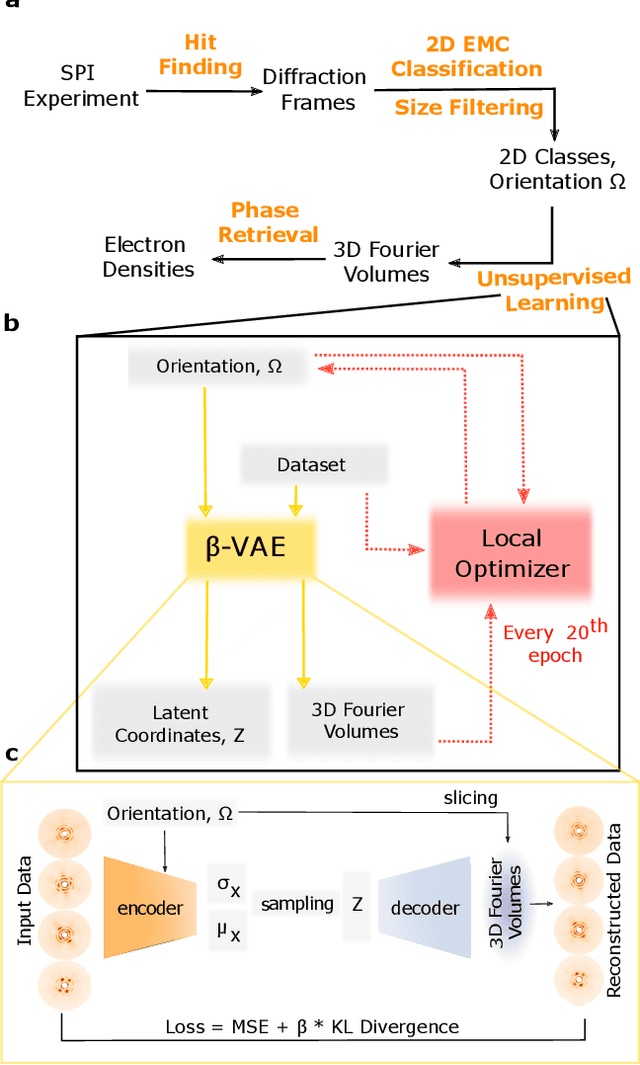

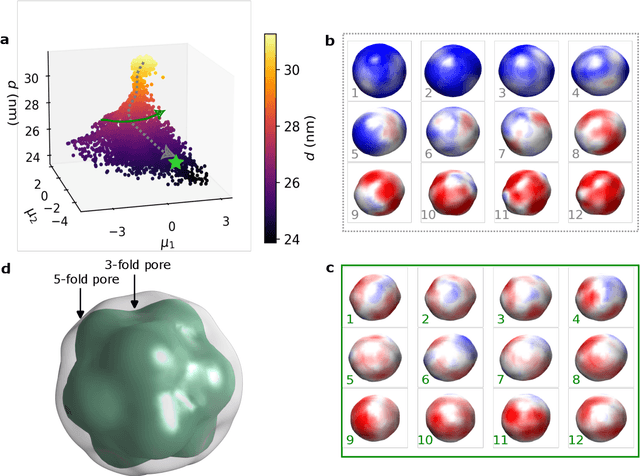

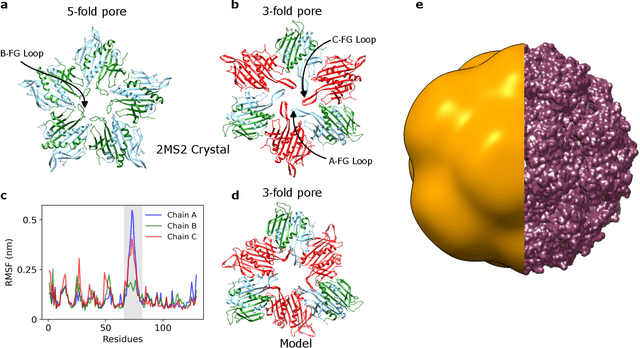

Abstract:Single-stranded RNA viruses co-assemble their capsid with the genome and variations in capsid structures can have significant functional relevance. In particular, viruses need to respond to a dehydrating environment to prevent genomic degradation and remain active upon rehydration. Theoretical work has predicted low-energy buckling transitions in icosahedral capsids which could protect the virus from further dehydration. However, there has been no direct experimental evidence, nor molecular mechanism, for such behaviour. Here we observe this transition using X-ray single particle imaging of MS2 bacteriophages after aerosolization. Using a combination of machine learning tools, we classify hundreds of thousands of single particle diffraction patterns to learn the structural landscape of the capsid morphology as a function of time spent in the aerosol phase. We found a previously unreported compact conformation as well as intermediate structures which suggest an incoherent buckling transition which does not preserve icosahedral symmetry. Finally, we propose a mechanism of this buckling, where a single 19-residue loop is destabilised, leading to the large observed morphology change. Our results provide experimental evidence for a mechanism by which viral capsids protect themselves from dehydration. In the process, these findings also demonstrate the power of single particle X-ray imaging and machine learning methods in studying biomolecular structural dynamics.

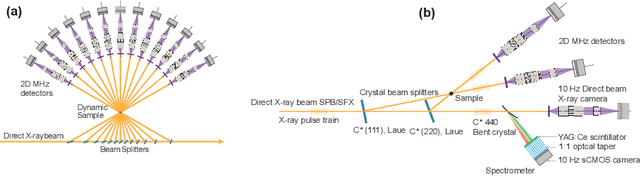

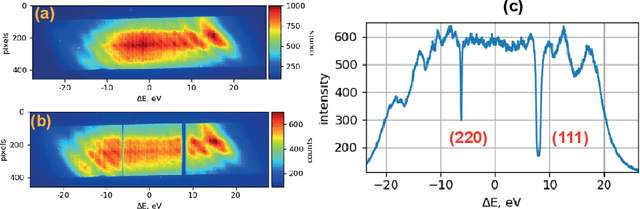

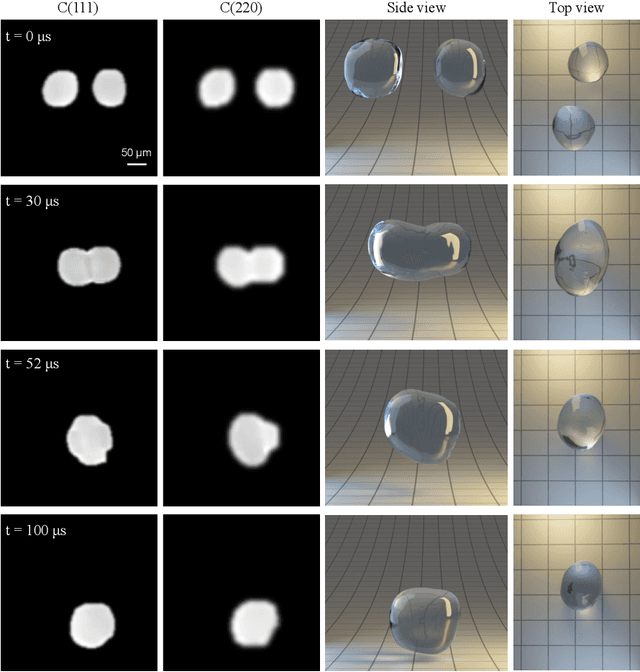

Megahertz X-ray Multi-projection imaging

May 19, 2023

Abstract:X-ray time-resolved tomography is one of the most popular X-ray techniques to probe dynamics in three dimensions (3D). Recent developments in time-resolved tomography opened the possibility of recording kilohertz-rate 3D movies. However, tomography requires rotating the sample with respect to the X-ray beam, which prevents characterization of faster structural dynamics. Here, we present megahertz (MHz) X-ray multi-projection imaging (MHz-XMPI), a technique capable of recording volumetric information at MHz rates and micrometer resolution without scanning the sample. We achieved this by harnessing the unique megahertz pulse structure and intensity of the European X-ray Free-electron Laser with a combination of novel detection and reconstruction approaches that do not require sample rotations. Our approach enables generating multiple X-ray probes that simultaneously record several angular projections for each pulse in the megahertz pulse burst. We provide a proof-of-concept demonstration of the MHz-XMPI technique's capability to probe 4D (3D+time) information on stochastic phenomena and non-reproducible processes three orders of magnitude faster than state-of-the-art time-resolved X-ray tomography, by generating 3D movies of binary droplet collisions. We anticipate that MHz-XMPI will enable in-situ and operando studies that were impossible before, either due to the lack of temporal resolution or because the systems were opaque (such as for MHz imaging based on optical microscopy).

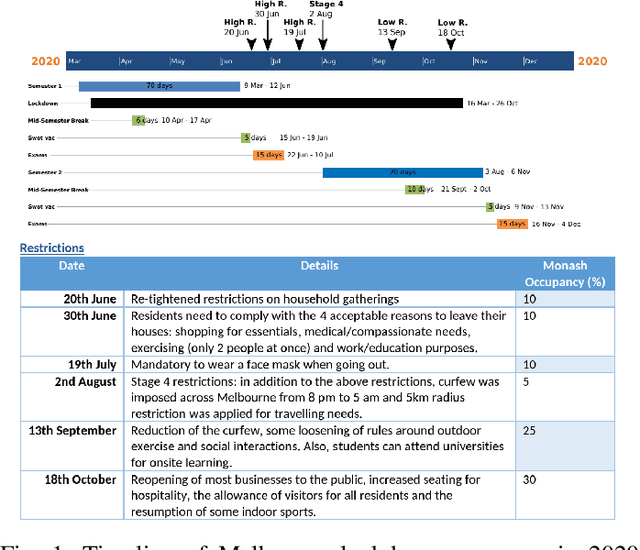

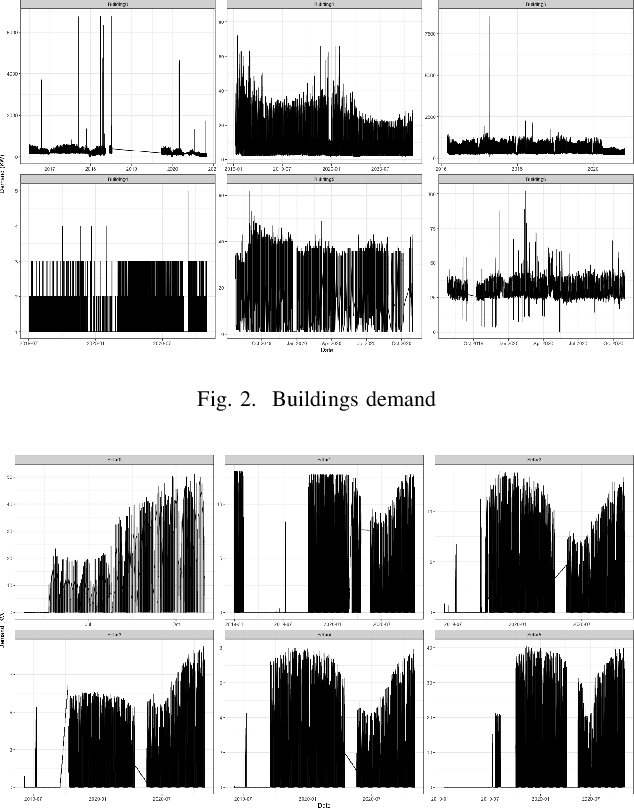

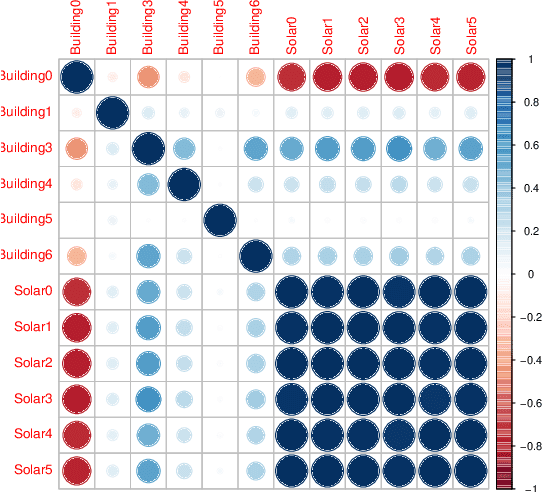

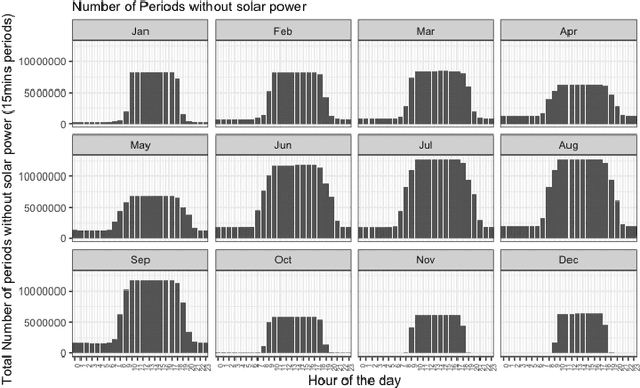

Comparison and Evaluation of Methods for a Predict+Optimize Problem in Renewable Energy

Dec 21, 2022

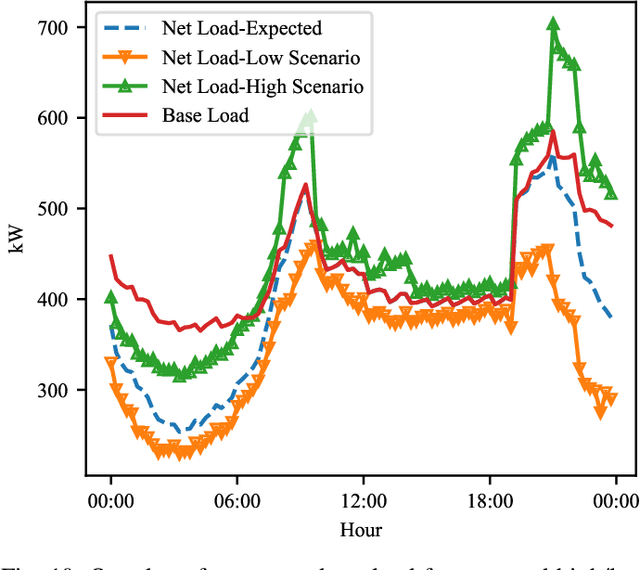

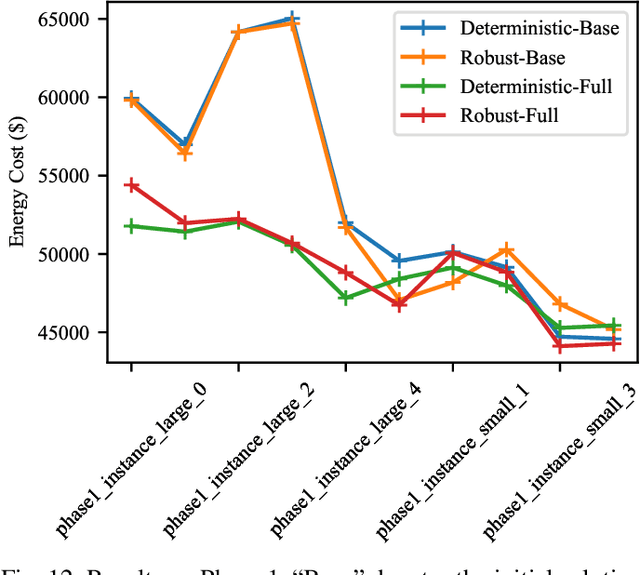

Abstract:Algorithms that involve both forecasting and optimization are at the core of solutions to many difficult real-world problems, such as in supply chains (inventory optimization), traffic, and in the transition towards carbon-free energy generation in battery/load/production scheduling in sustainable energy systems. Typically, in these scenarios we want to solve an optimization problem that depends on unknown future values, which therefore need to be forecast. As both forecasting and optimization are difficult problems in their own right, relatively few research has been done in this area. This paper presents the findings of the ``IEEE-CIS Technical Challenge on Predict+Optimize for Renewable Energy Scheduling," held in 2021. We present a comparison and evaluation of the seven highest-ranked solutions in the competition, to provide researchers with a benchmark problem and to establish the state of the art for this benchmark, with the aim to foster and facilitate research in this area. The competition used data from the Monash Microgrid, as well as weather data and energy market data. It then focused on two main challenges: forecasting renewable energy production and demand, and obtaining an optimal schedule for the activities (lectures) and on-site batteries that lead to the lowest cost of energy. The most accurate forecasts were obtained by gradient-boosted tree and random forest models, and optimization was mostly performed using mixed integer linear and quadratic programming. The winning method predicted different scenarios and optimized over all scenarios jointly using a sample average approximation method.

How to predict and optimise with asymmetric error metrics

Nov 24, 2022

Abstract:In this paper, we examine the concept of the predict and optimise problem with specific reference to the third Technical Challenge of the IEEE Computational Intelligence Society. In this competition, entrants were asked to forecast building energy use and solar generation at six buildings and six solar installations, and then use their forecast to optimize energy cost while scheduling classes and batteries over a month. We examine the possible effect of underforecasting and overforecasting and asymmetric errors on the optimisation cost. We explore the different nature of loss functions for the prediction and optimisation phase and propose to adjust the final forecasts for a better optimisation cost. We report that while there is a positive correlation between these two, more appropriate loss functions can be used to optimise the costs associated with final decisions.

Methodology for forecasting and optimization in IEEE-CIS 3rd Technical Challenge

Feb 02, 2022

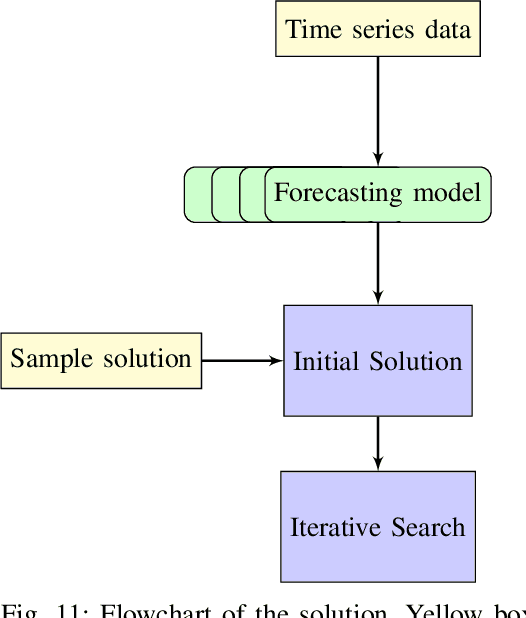

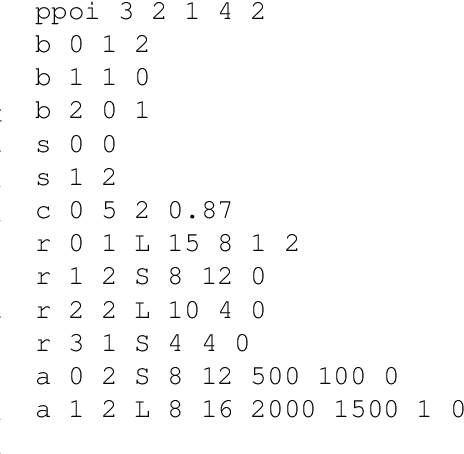

Abstract:This report provides a description of the methodology I used in the IEEE-CIS 3rd Technical Challenge. For the forecast, I used a quantile regression forest approach using the solar variables provided by the Bureau of Meterology of Australia (BOM) and many of the weather variables from the European Centre for Medium-Range Weather Forecasting (ECMWF). Groups of buildings and all of the solar instances were trained together as they were observed to be closely correlated over time. Other variables used included Fourier values based on hour of day and day of year, and binary variables for combinations of days of the week. The start dates for the time series were carefully tuned based on phase 1 and cleaning and thresholding was used to reduce the observed error rate for each time series. For the optimization, a four-step approach was used using the forecast developed. First, a mixed-integer program (MIP) was solved for the recurring and recurring plus once-off activities, then each of these was extended using a mixed-integer quadratic program (MIQP). The general strategy was chosen from one of two ("array" from the "array" and "tuples" approaches) while the specific step improvement strategy was chosen from one of five ("no forced discharge").

Unsupervised learning approaches to characterize heterogeneous samples using X-ray single particle imaging

Sep 13, 2021

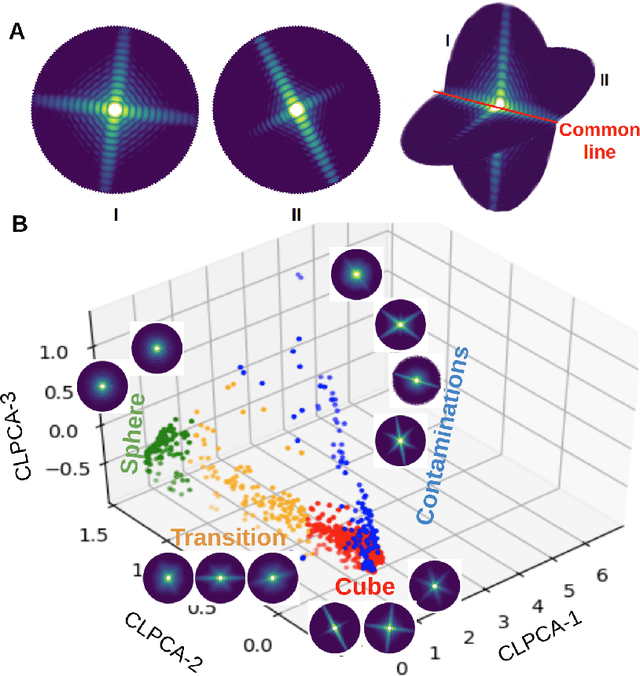

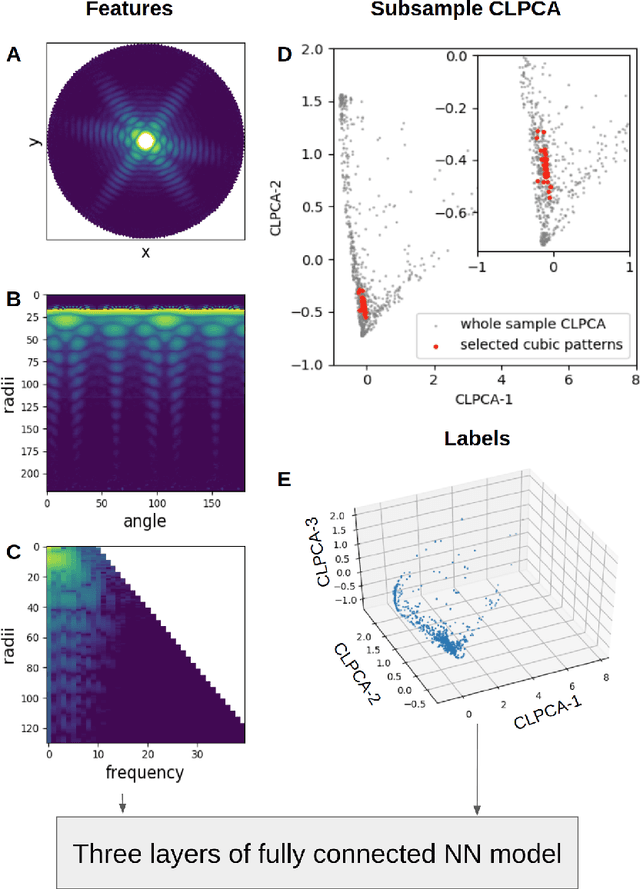

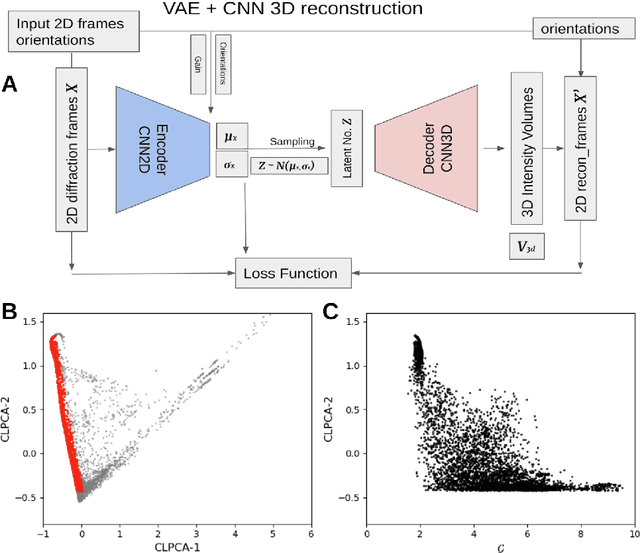

Abstract:One of the outstanding analytical problems in X-ray single particle imaging (SPI) is the classification of structural heterogeneity, which is especially difficult given the low signal-to-noise ratios of individual patterns and that even identical objects can yield patterns that vary greatly when orientation is taken into consideration. We propose two methods which explicitly account for this orientation-induced variation and can robustly determine the structural landscape of a sample ensemble. The first, termed common-line principal component analysis (PCA) provides a rough classification which is essentially parameter-free and can be run automatically on any SPI dataset. The second method, utilizing variation auto-encoders (VAEs) can generate 3D structures of the objects at any point in the structural landscape. We implement both these methods in combination with the noise-tolerant expand-maximize-compress (EMC) algorithm and demonstrate its utility by applying it to an experimental dataset from gold nanoparticles with only a few thousand photons per pattern and recover both discrete structural classes as well as continuous deformations. These developments diverge from previous approaches of extracting reproducible subsets of patterns from a dataset and open up the possibility to move beyond studying homogeneous sample sets and study open questions on topics such as nanocrystal growth and dynamics as well as phase transitions which have not been externally triggered.

Using solar and load predictions in battery scheduling at the residential level

Oct 26, 2018

Abstract:Smart solar inverters can be used to store, monitor and manage a home's solar energy. We describe a smart solar inverter system with battery which can either operate in an automatic mode or receive commands over a network to charge and discharge at a given rate. In order to make battery storage financially viable and advantageous to the consumers, effective battery scheduling algorithms can be employed. Particularly, when time-of-use tariffs are in effect in the region of the inverter, it is possible in some cases to schedule the battery to save money for the individual customer, compared to the "automatic" mode. Hence, this paper presents and evaluates the performance of a novel battery scheduling algorithm for residential consumers of solar energy. The proposed battery scheduling algorithm optimizes the cost of electricity over next 24 hours for residential consumers. The cost minimization is realized by controlling the charging/discharging of battery storage system based on the predictions for load and solar power generation values. The scheduling problem is formulated as a linear programming problem. We performed computer simulations over 83 inverters using several months of hourly load and PV data. The simulation results indicate that key factors affecting the viability of optimization are the tariffs and the PV to Load ratio at each inverter. Depending on the tariff, savings of between 1% and 10% can be expected over the automatic approach. The prediction approach used in this paper is also shown to out-perform basic "persistence" forecasting approaches. We have also examined the approaches for improving the prediction accuracy and optimization effectiveness.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge