Reza Ahmadi

Swin UNETR segmentation with automated geometry filtering for biomechanical modeling of knee joint cartilage

Jul 08, 2024

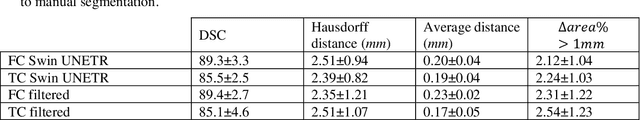

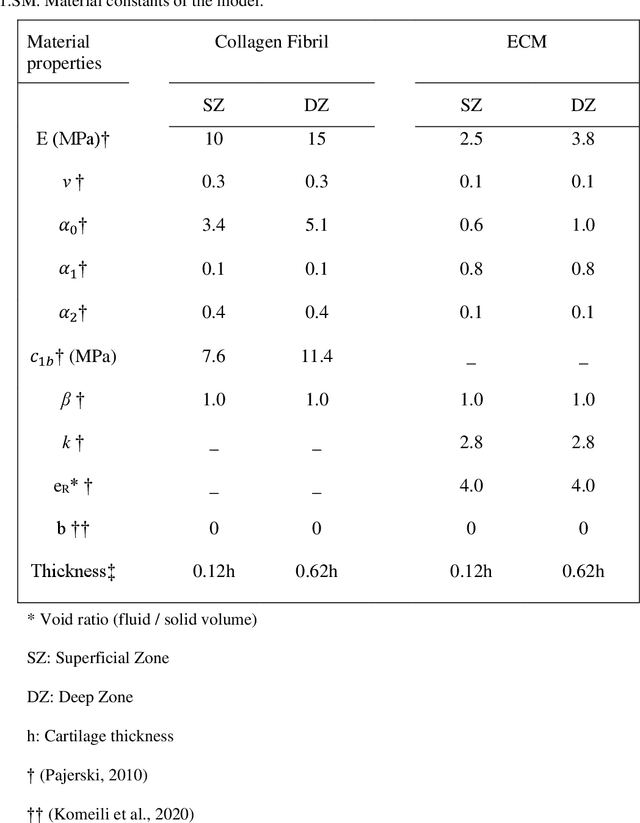

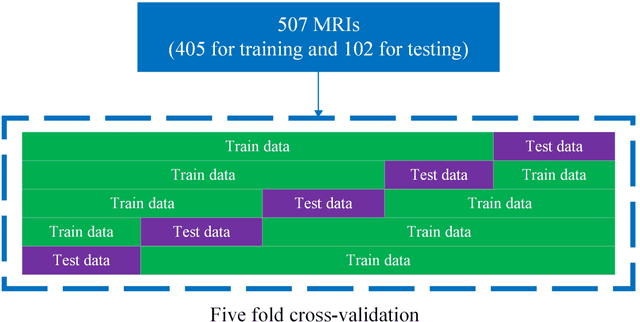

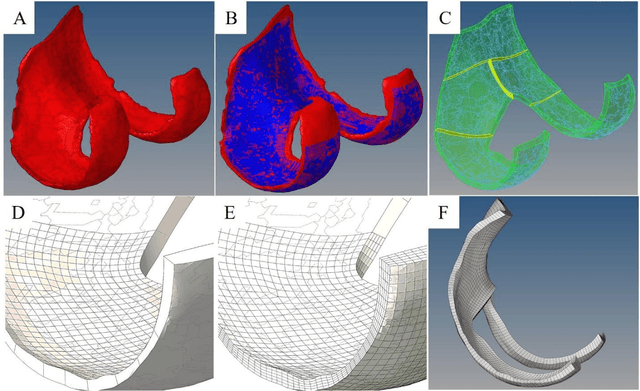

Abstract:Simulation studies, such as finite element (FE) modeling, offer insights into knee joint biomechanics, which may not be achieved through experimental methods without direct involvement of patients. While generic FE models have been used to predict tissue biomechanics, they overlook variations in population-specific geometry, loading, and material properties. In contrast, subject-specific models account for these factors, delivering enhanced predictive precision but requiring significant effort and time for development. This study aimed to facilitate subject-specific knee joint FE modeling by integrating an automated cartilage segmentation algorithm using a 3D Swin UNETR. This algorithm provided initial segmentation of knee cartilage, followed by automated geometry filtering to refine surface roughness and continuity. In addition to the standard metrics of image segmentation performance, such as Dice similarity coefficient (DSC) and Hausdorff distance, the method's effectiveness was also assessed in FE simulation. Nine pairs of knee cartilage FE models, using manual and automated segmentation methods, were developed to compare the predicted stress and strain responses during gait. The automated segmentation achieved high Dice similarity coefficients of 89.4% for femoral and 85.1% for tibial cartilage, with a Hausdorff distance of 2.3 mm between the automated and manual segmentation. Mechanical results including maximum principal stress and strain, fluid pressure, fibril strain, and contact area showed no significant differences between the manual and automated FE models. These findings demonstrate the effectiveness of the proposed automated segmentation method in creating accurate knee joint FE models.

Distributed Energy Management and Demand Response in Smart Grids: A Multi-Agent Deep Reinforcement Learning Framework

Nov 29, 2022

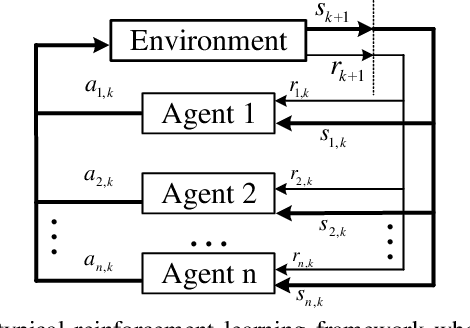

Abstract:This paper presents a multi-agent Deep Reinforcement Learning (DRL) framework for autonomous control and integration of renewable energy resources into smart power grid systems. In particular, the proposed framework jointly considers demand response (DR) and distributed energy management (DEM) for residential end-users. DR has a widely recognized potential for improving power grid stability and reliability, while at the same time reducing end-users energy bills. However, the conventional DR techniques come with several shortcomings, such as the inability to handle operational uncertainties while incurring end-user disutility, which prevents widespread adoption in real-world applications. The proposed framework addresses these shortcomings by implementing DR and DEM based on real-time pricing strategy that is achieved using deep reinforcement learning. Furthermore, this framework enables the power grid service provider to leverage distributed energy resources (i.e., PV rooftop panels and battery storage) as dispatchable assets to support the smart grid during peak hours, thus achieving management of distributed energy resources. Simulation results based on the Deep Q-Network (DQN) demonstrate significant improvements of the 24-hour accumulative profit for both prosumers and the power grid service provider, as well as major reductions in the utilization of the power grid reserve generators.

A Multi-Agent Deep Reinforcement Learning Approach for a Distributed Energy Marketplace in Smart Grids

Sep 23, 2020

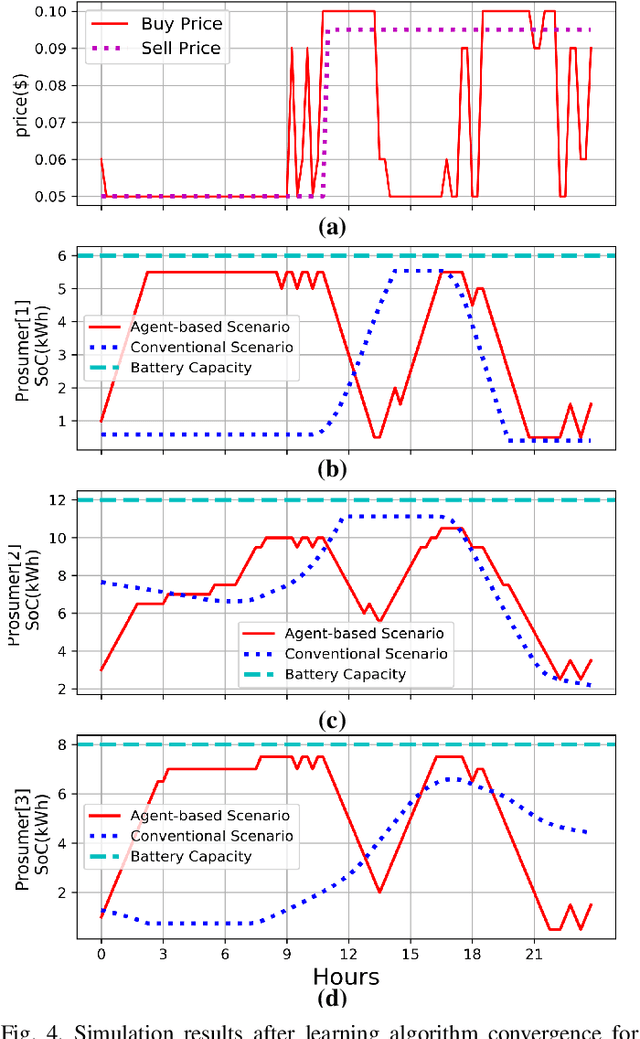

Abstract:This paper presents a Reinforcement Learning (RL) based energy market for a prosumer dominated microgrid. The proposed market model facilitates a real-time and demanddependent dynamic pricing environment, which reduces grid costs and improves the economic benefits for prosumers. Furthermore, this market model enables the grid operator to leverage prosumers storage capacity as a dispatchable asset for grid support applications. Simulation results based on the Deep QNetwork (DQN) framework demonstrate significant improvements of the 24-hour accumulative profit for both prosumers and the grid operator, as well as major reductions in grid reserve power utilization.

Demand Responsive Dynamic Pricing Framework for Prosumer Dominated Microgrids using Multiagent Reinforcement Learning

Sep 23, 2020

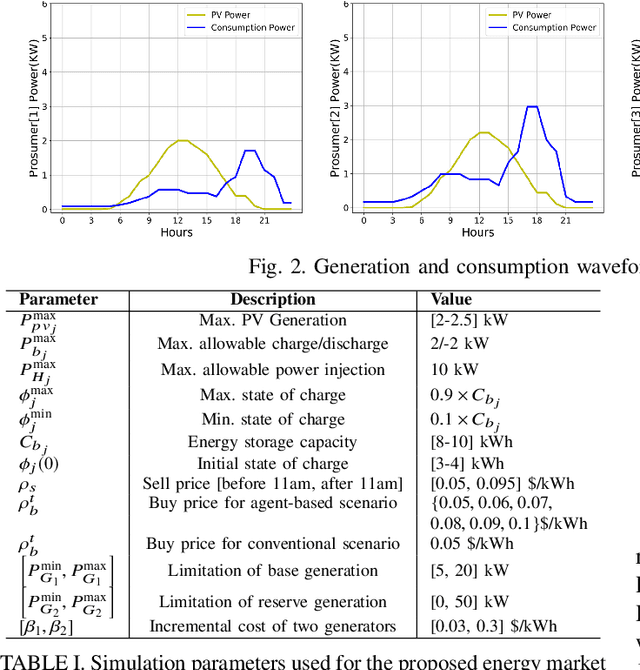

Abstract:Demand Response (DR) has a widely recognized potential for improving grid stability and reliability while reducing customers energy bills. However, the conventional DR techniques come with several shortcomings, such as inability to handle operational uncertainties and incurring customer disutility, impeding their wide spread adoption in real-world applications. This paper proposes a new multiagent Reinforcement Learning (RL) based decision-making environment for implementing a Real-Time Pricing (RTP) DR technique in a prosumer dominated microgrid. The proposed technique addresses several shortcomings common to traditional DR methods and provides significant economic benefits to the grid operator and prosumers. To show its better efficacy, the proposed DR method is compared to a baseline traditional operation scenario in a small-scale microgrid system. Finally, investigations on the use of prosumers energy storage capacity in this microgrid highlight the advantages of the proposed method in establishing a balanced market setup.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge