Arman Ghasemi

Combating Uncertainties in Wind and Distributed PV Energy Sources Using Integrated Reinforcement Learning and Time-Series Forecasting

Feb 27, 2023

Abstract:Renewable energy sources, such as wind and solar power, are increasingly being integrated into smart grid systems. However, when compared to traditional energy resources, the unpredictability of renewable energy generation poses significant challenges for both electricity providers and utility companies. Furthermore, the large-scale integration of distributed energy resources (such as PV systems) creates new challenges for energy management in microgrids. To tackle these issues, we propose a novel framework with two objectives: (i) combating uncertainty of renewable energy in smart grid by leveraging time-series forecasting with Long-Short Term Memory (LSTM) solutions, and (ii) establishing distributed and dynamic decision-making framework with multi-agent reinforcement learning using Deep Deterministic Policy Gradient (DDPG) algorithm. The proposed framework considers both objectives concurrently to fully integrate them, while considering both wholesale and retail markets, thereby enabling efficient energy management in the presence of uncertain and distributed renewable energy sources. Through extensive numerical simulations, we demonstrate that the proposed solution significantly improves the profit of load serving entities (LSE) by providing a more accurate wind generation forecast. Furthermore, our results demonstrate that households with PV and battery installations can increase their profits by using intelligent battery charge/discharge actions determined by the DDPG agents.

Distributed Energy Management and Demand Response in Smart Grids: A Multi-Agent Deep Reinforcement Learning Framework

Nov 29, 2022

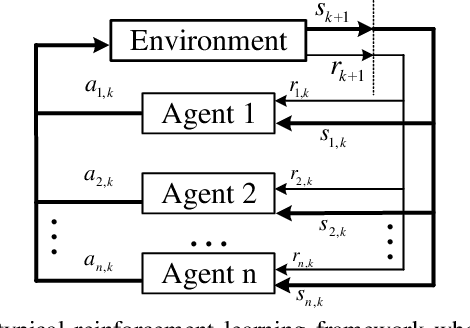

Abstract:This paper presents a multi-agent Deep Reinforcement Learning (DRL) framework for autonomous control and integration of renewable energy resources into smart power grid systems. In particular, the proposed framework jointly considers demand response (DR) and distributed energy management (DEM) for residential end-users. DR has a widely recognized potential for improving power grid stability and reliability, while at the same time reducing end-users energy bills. However, the conventional DR techniques come with several shortcomings, such as the inability to handle operational uncertainties while incurring end-user disutility, which prevents widespread adoption in real-world applications. The proposed framework addresses these shortcomings by implementing DR and DEM based on real-time pricing strategy that is achieved using deep reinforcement learning. Furthermore, this framework enables the power grid service provider to leverage distributed energy resources (i.e., PV rooftop panels and battery storage) as dispatchable assets to support the smart grid during peak hours, thus achieving management of distributed energy resources. Simulation results based on the Deep Q-Network (DQN) demonstrate significant improvements of the 24-hour accumulative profit for both prosumers and the power grid service provider, as well as major reductions in the utilization of the power grid reserve generators.

A Multi-Agent Deep Reinforcement Learning Approach for a Distributed Energy Marketplace in Smart Grids

Sep 23, 2020

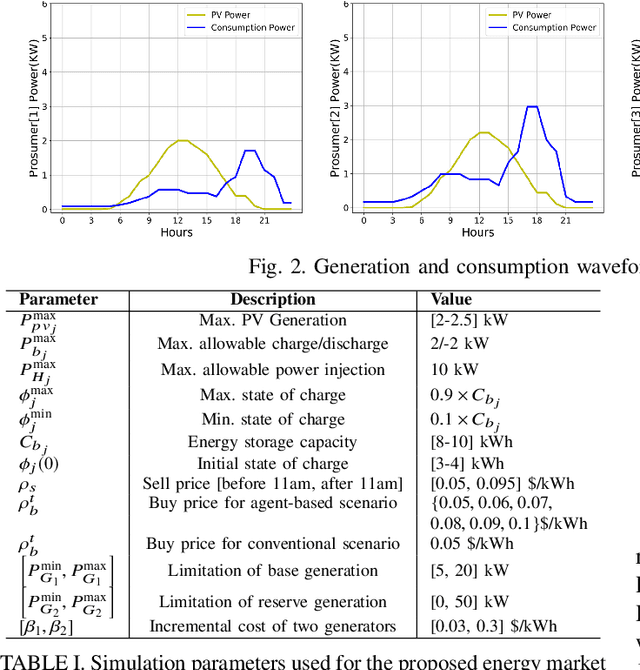

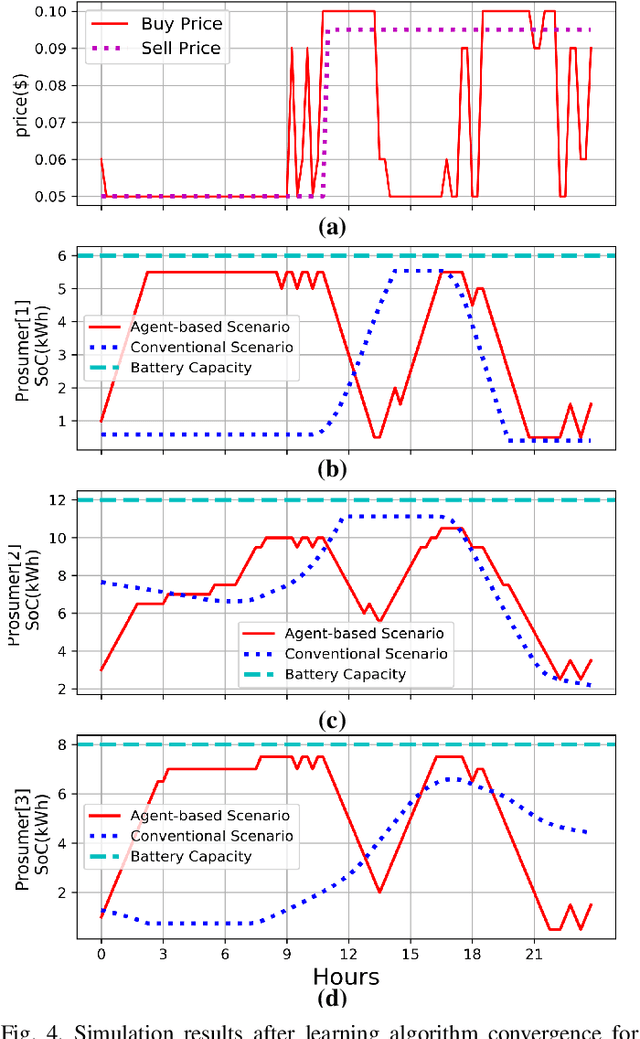

Abstract:This paper presents a Reinforcement Learning (RL) based energy market for a prosumer dominated microgrid. The proposed market model facilitates a real-time and demanddependent dynamic pricing environment, which reduces grid costs and improves the economic benefits for prosumers. Furthermore, this market model enables the grid operator to leverage prosumers storage capacity as a dispatchable asset for grid support applications. Simulation results based on the Deep QNetwork (DQN) framework demonstrate significant improvements of the 24-hour accumulative profit for both prosumers and the grid operator, as well as major reductions in grid reserve power utilization.

Demand Responsive Dynamic Pricing Framework for Prosumer Dominated Microgrids using Multiagent Reinforcement Learning

Sep 23, 2020

Abstract:Demand Response (DR) has a widely recognized potential for improving grid stability and reliability while reducing customers energy bills. However, the conventional DR techniques come with several shortcomings, such as inability to handle operational uncertainties and incurring customer disutility, impeding their wide spread adoption in real-world applications. This paper proposes a new multiagent Reinforcement Learning (RL) based decision-making environment for implementing a Real-Time Pricing (RTP) DR technique in a prosumer dominated microgrid. The proposed technique addresses several shortcomings common to traditional DR methods and provides significant economic benefits to the grid operator and prosumers. To show its better efficacy, the proposed DR method is compared to a baseline traditional operation scenario in a small-scale microgrid system. Finally, investigations on the use of prosumers energy storage capacity in this microgrid highlight the advantages of the proposed method in establishing a balanced market setup.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge