Ren Yuan

Enhanced Lung Cancer Survival Prediction using Semi-Supervised Pseudo-Labeling and Learning from Diverse PET/CT Datasets

Nov 25, 2024

Abstract:Objective: This study explores a semi-supervised learning (SSL), pseudo-labeled strategy using diverse datasets to enhance lung cancer (LCa) survival predictions, analyzing Handcrafted and Deep Radiomic Features (HRF/DRF) from PET/CT scans with Hybrid Machine Learning Systems (HMLS). Methods: We collected 199 LCa patients with both PET & CT images, obtained from The Cancer Imaging Archive (TCIA) and our local database, alongside 408 head&neck cancer (HNCa) PET/CT images from TCIA. We extracted 215 HRFs and 1024 DRFs by PySERA and a 3D-Autoencoder, respectively, within the ViSERA software, from segmented primary tumors. The supervised strategy (SL) employed a HMLSs: PCA connected with 4 classifiers on both HRF and DRFs. SSL strategy expanded the datasets by adding 408 pseudo-labeled HNCa cases (labeled by Random Forest algorithm) to 199 LCa cases, using the same HMLSs techniques. Furthermore, Principal Component Analysis (PCA) linked with 4 survival prediction algorithms were utilized in survival hazard ratio analysis. Results: SSL strategy outperformed SL method (p-value<0.05), achieving an average accuracy of 0.85 with DRFs from PET and PCA+ Multi-Layer Perceptron (MLP), compared to 0.65 for SL strategy using DRFs from CT and PCA+ K-Nearest Neighbor (KNN). Additionally, PCA linked with Component-wise Gradient Boosting Survival Analysis on both HRFs and DRFs, as extracted from CT, had an average c-index of 0.80 with a Log Rank p-value<<0.001, confirmed by external testing. Conclusions: Shifting from HRFs and SL to DRFs and SSL strategies, particularly in contexts with limited data points, enabling CT or PET alone to significantly achieve high predictive performance.

Tensor Radiomics: Paradigm for Systematic Incorporation of Multi-Flavoured Radiomics Features

Mar 12, 2022

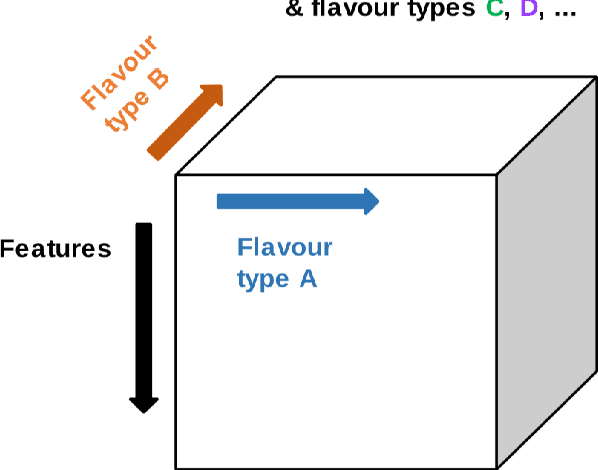

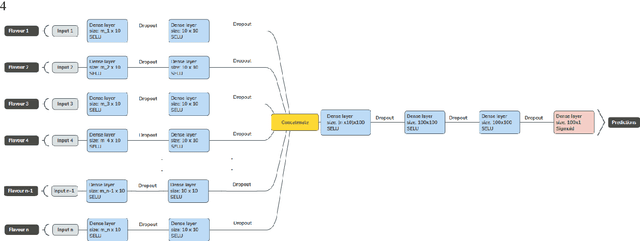

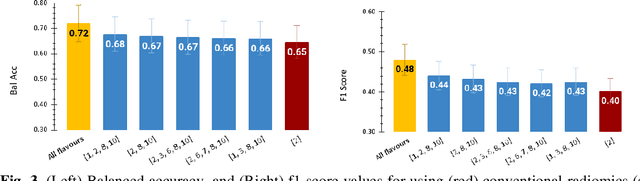

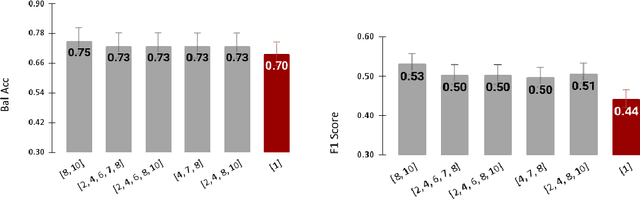

Abstract:Radiomics features extract quantitative information from medical images, towards the derivation of biomarkers for clinical tasks, such as diagnosis, prognosis, or treatment response assessment. Different image discretization parameters (e.g. bin number or size), convolutional filters, segmentation perturbation, or multi-modality fusion levels can be used to generate radiomics features and ultimately signatures. Commonly, only one set of parameters is used; resulting in only one value or flavour for a given RF. We propose tensor radiomics (TR) where tensors of features calculated with multiple combinations of parameters (i.e. flavours) are utilized to optimize the construction of radiomics signatures. We present examples of TR as applied to PET/CT, MRI, and CT imaging invoking machine learning or deep learning solutions, and reproducibility analyses: (1) TR via varying bin sizes on CT images of lung cancer and PET-CT images of head & neck cancer (HNC) for overall survival prediction. A hybrid deep neural network, referred to as TR-Net, along with two ML-based flavour fusion methods showed improved accuracy compared to regular rediomics features. (2) TR built from different segmentation perturbations and different bin sizes for classification of late-stage lung cancer response to first-line immunotherapy using CT images. TR improved predicted patient responses. (3) TR via multi-flavour generated radiomics features in MR imaging showed improved reproducibility when compared to many single-flavour features. (4) TR via multiple PET/CT fusions in HNC. Flavours were built from different fusions using methods, such as Laplacian pyramids and wavelet transforms. TR improved overall survival prediction. Our results suggest that the proposed TR paradigm has the potential to improve performance capabilities in different medical imaging tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge