Raza Nowrozy

Embracing the Generative AI Revolution: Advancing Tertiary Education in Cybersecurity with GPT

Mar 18, 2024Abstract:The rapid advancement of generative Artificial Intelligence (AI) technologies, particularly Generative Pre-trained Transformer (GPT) models such as ChatGPT, has the potential to significantly impact cybersecurity. In this study, we investigated the impact of GPTs, specifically ChatGPT, on tertiary education in cybersecurity, and provided recommendations for universities to adapt their curricula to meet the evolving needs of the industry. Our research highlighted the importance of understanding the alignment between GPT's ``mental model'' and human cognition, as well as the enhancement of GPT capabilities to human skills based on Bloom's taxonomy. By analyzing current educational practices and the alignment of curricula with industry requirements, we concluded that universities providing practical degrees like cybersecurity should align closely with industry demand and embrace the inevitable generative AI revolution, while applying stringent ethics oversight to safeguard responsible GPT usage. We proposed a set of recommendations focused on updating university curricula, promoting agility within universities, fostering collaboration between academia, industry, and policymakers, and evaluating and assessing educational outcomes.

GPT, Ontology, and CAABAC: A Tripartite Personalized Access Control Model Anchored by Compliance, Context and Attribute

Mar 13, 2024

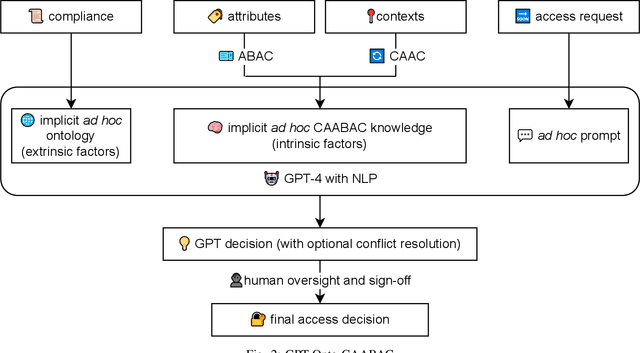

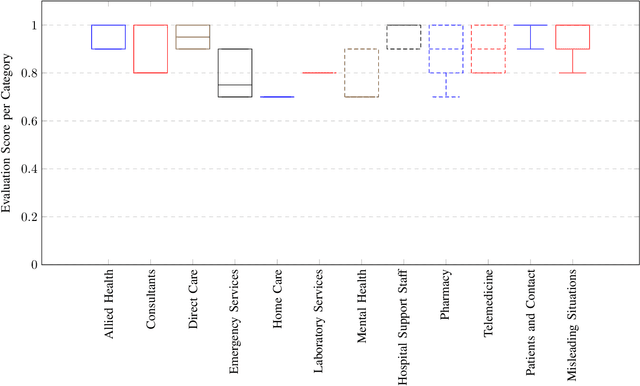

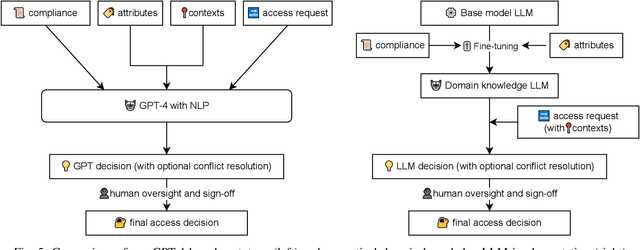

Abstract:As digital healthcare evolves, the security of electronic health records (EHR) becomes increasingly crucial. This study presents the GPT-Onto-CAABAC framework, integrating Generative Pretrained Transformer (GPT), medical-legal ontologies and Context-Aware Attribute-Based Access Control (CAABAC) to enhance EHR access security. Unlike traditional models, GPT-Onto-CAABAC dynamically interprets policies and adapts to changing healthcare and legal environments, offering customized access control solutions. Through empirical evaluation, this framework is shown to be effective in improving EHR security by accurately aligning access decisions with complex regulatory and situational requirements. The findings suggest its broader applicability in sectors where access control must meet stringent compliance and adaptability standards.

From COBIT to ISO 42001: Evaluating Cybersecurity Frameworks for Opportunities, Risks, and Regulatory Compliance in Commercializing Large Language Models

Feb 24, 2024Abstract:This study investigated the integration readiness of four predominant cybersecurity Governance, Risk and Compliance (GRC) frameworks - NIST CSF 2.0, COBIT 2019, ISO 27001:2022, and the latest ISO 42001:2023 - for the opportunities, risks, and regulatory compliance when adopting Large Language Models (LLMs), using qualitative content analysis and expert validation. Our analysis, with both LLMs and human experts in the loop, uncovered potential for LLM integration together with inadequacies in LLM risk oversight of those frameworks. Comparative gap analysis has highlighted that the new ISO 42001:2023, specifically designed for Artificial Intelligence (AI) management systems, provided most comprehensive facilitation for LLM opportunities, whereas COBIT 2019 aligned most closely with the impending European Union AI Act. Nonetheless, our findings suggested that all evaluated frameworks would benefit from enhancements to more effectively and more comprehensively address the multifaceted risks associated with LLMs, indicating a critical and time-sensitive need for their continuous evolution. We propose integrating human-expert-in-the-loop validation processes as crucial for enhancing cybersecurity frameworks to support secure and compliant LLM integration, and discuss implications for the continuous evolution of cybersecurity GRC frameworks to support the secure integration of LLMs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge