Ravi Vaidyanathan

Evaluating Spoken Language as a Biomarker for Automated Screening of Cognitive Impairment

Jan 30, 2025Abstract:Timely and accurate assessment of cognitive impairment is a major unmet need in populations at risk. Alterations in speech and language can be early predictors of Alzheimer's disease and related dementias (ADRD) before clinical signs of neurodegeneration. Voice biomarkers offer a scalable and non-invasive solution for automated screening. However, the clinical applicability of machine learning (ML) remains limited by challenges in generalisability, interpretability, and access to patient data to train clinically applicable predictive models. Using DementiaBank recordings (N=291, 64% female), we evaluated ML techniques for ADRD screening and severity prediction from spoken language. We validated model generalisability with pilot data collected in-residence from older adults (N=22, 59% female). Risk stratification and linguistic feature importance analysis enhanced the interpretability and clinical utility of predictions. For ADRD classification, a Random Forest applied to lexical features achieved a mean sensitivity of 69.4% (95% confidence interval (CI) = 66.4-72.5) and specificity of 83.3% (78.0-88.7). On real-world pilot data, this model achieved a mean sensitivity of 70.0% (58.0-82.0) and specificity of 52.5% (39.3-65.7). For severity prediction using Mini-Mental State Examination (MMSE) scores, a Random Forest Regressor achieved a mean absolute MMSE error of 3.7 (3.7-3.8), with comparable performance of 3.3 (3.1-3.5) on pilot data. Linguistic features associated with higher ADRD risk included increased use of pronouns and adverbs, greater disfluency, reduced analytical thinking, lower lexical diversity and fewer words reflecting a psychological state of completion. Our interpretable predictive modelling offers a novel approach for in-home integration with conversational AI to monitor cognitive health and triage higher-risk individuals, enabling earlier detection and intervention.

An attention model to analyse the risk of agitation and urinary tract infections in people with dementia

Jan 18, 2021

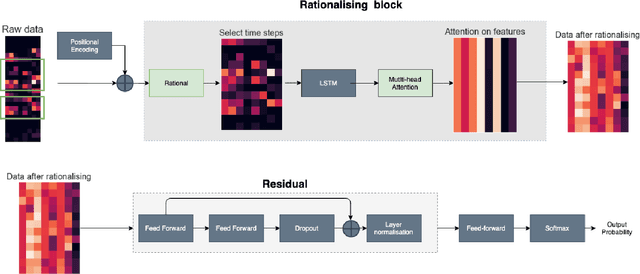

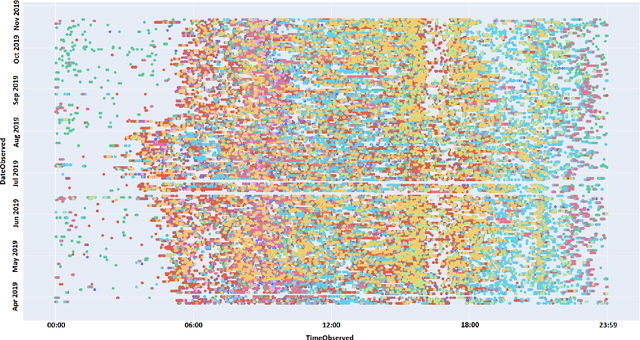

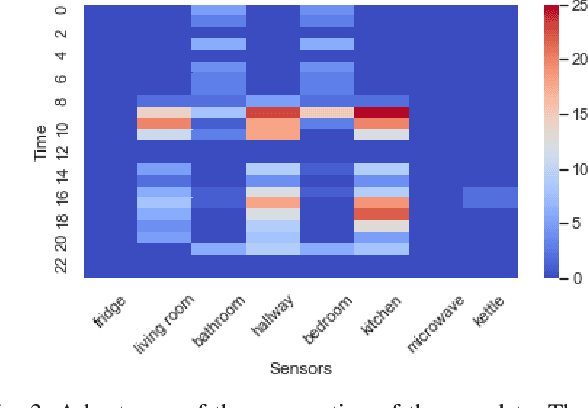

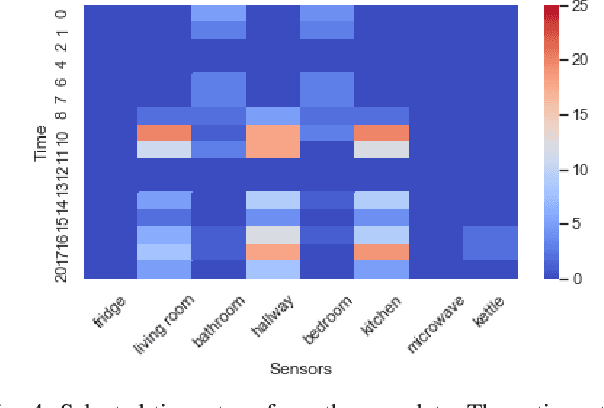

Abstract:Behavioural symptoms and urinary tract infections (UTI) are among the most common problems faced by people with dementia. One of the key challenges in the management of these conditions is early detection and timely intervention in order to reduce distress and avoid unplanned hospital admissions. Using in-home sensing technologies and machine learning models for sensor data integration and analysis provides opportunities to detect and predict clinically significant events and changes in health status. We have developed an integrated platform to collect in-home sensor data and performed an observational study to apply machine learning models for agitation and UTI risk analysis. We collected a large dataset from 88 participants with a mean age of 82 and a standard deviation of 6.5 (47 females and 41 males) to evaluate a new deep learning model that utilises attention and rational mechanism. The proposed solution can process a large volume of data over a period of time and extract significant patterns in a time-series data (i.e. attention) and use the extracted features and patterns to train risk analysis models (i.e. rational). The proposed model can explain the predictions by indicating which time-steps and features are used in a long series of time-series data. The model provides a recall of 91\% and precision of 83\% in detecting the risk of agitation and UTIs. This model can be used for early detection of conditions such as UTIs and managing of neuropsychiatric symptoms such as agitation in association with initial treatment and early intervention approaches. In our study we have developed a set of clinical pathways for early interventions using the alerts generated by the proposed model and a clinical monitoring team has been set up to use the platform and respond to the alerts according to the created intervention plans.

Emotive Response to a Hybrid-Face Robot and Translation to Consumer Social Robots

Dec 08, 2020

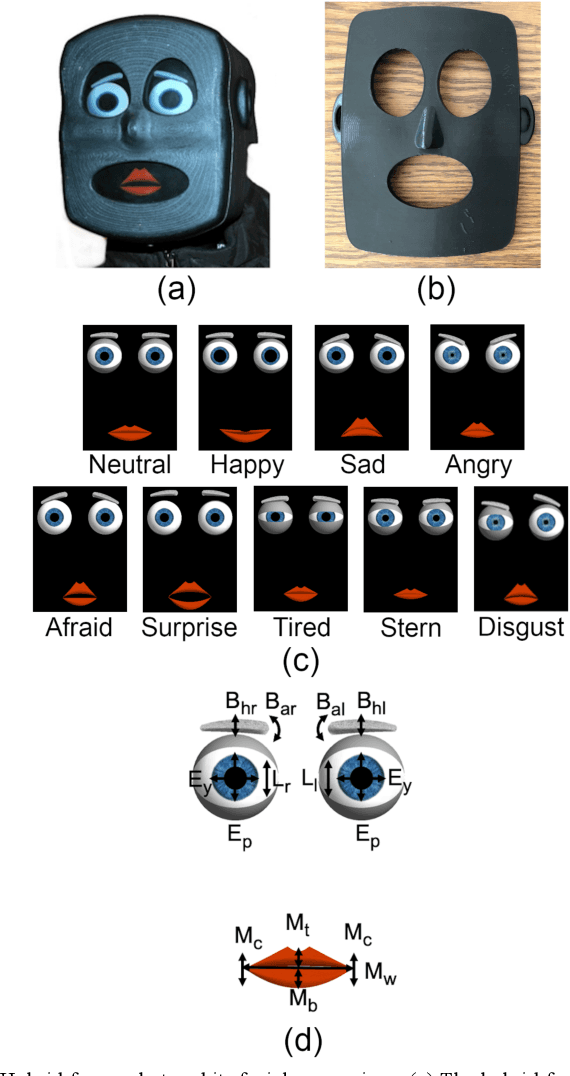

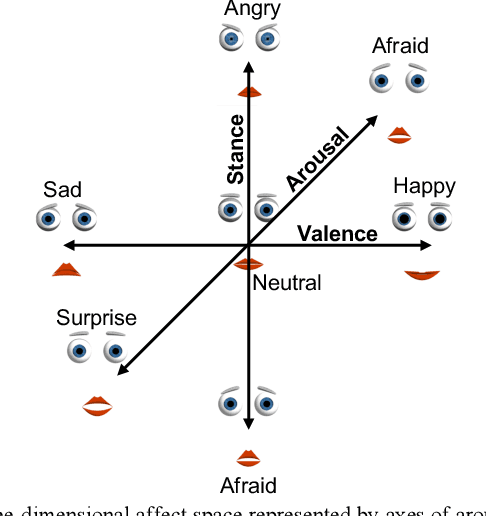

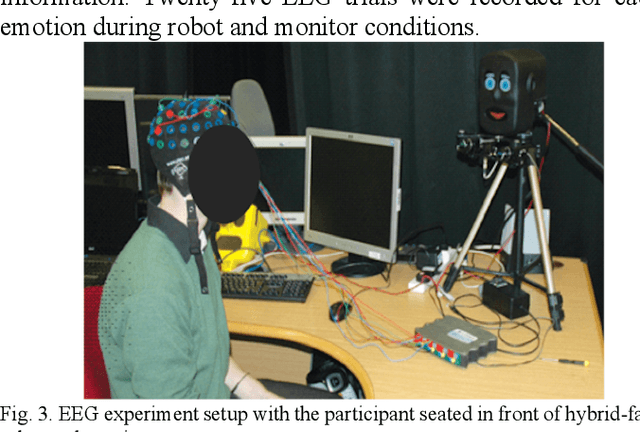

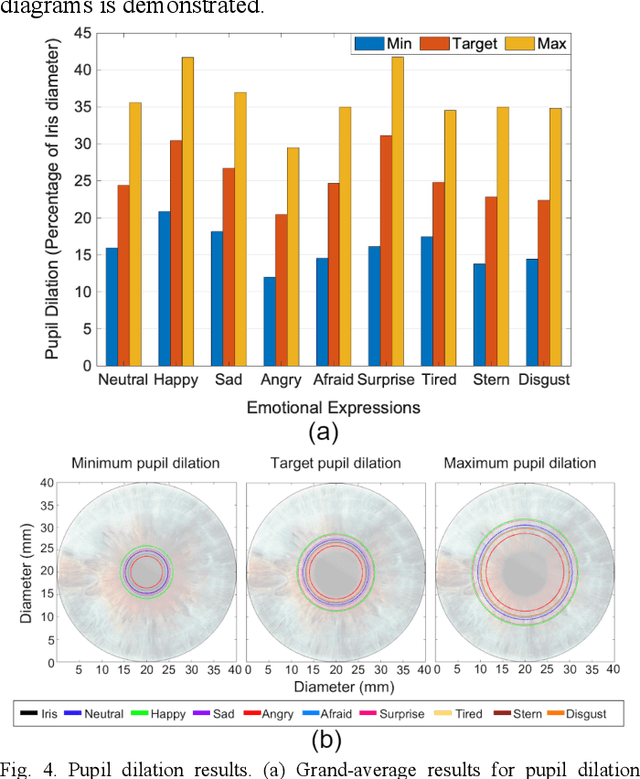

Abstract:We introduce the conceptual formulation, design, fabrication, control and commercial translation with IoT connection of a hybrid-face social robot and validation of human emotional response to its affective interactions. The hybrid-face robot integrates a 3D printed faceplate and a digital display to simplify conveyance of complex facial movements while providing the impression of three-dimensional depth for natural interaction. We map the space of potential emotions of the robot to specific facial feature parameters and characterise the recognisability of the humanoid hybrid-face robot's archetypal facial expressions. We introduce pupil dilation as an additional degree of freedom for conveyance of emotive states. Human interaction experiments demonstrate the ability to effectively convey emotion from the hybrid-robot face to human observers by mapping their neurophysiological electroencephalography (EEG) response to perceived emotional information and through interviews. Results show main hybrid-face robotic expressions can be discriminated with recognition rates above 80% and invoke human emotive response similar to that of actual human faces as measured by the face-specific N170 event-related potentials in EEG. The hybrid-face robot concept has been modified, implemented, and released in the commercial IoT robotic platform Miko (My Companion), an affective robot with facial and conversational features currently in use for human-robot interaction in children by Emotix Inc. We demonstrate that human EEG responses to Miko emotions are comparative to neurophysiological responses for actual human facial recognition. Finally, interviews show above 90% expression recognition rates in our commercial robot. We conclude that simplified hybrid-face abstraction conveys emotions effectively and enhances human-robot interaction.

Model Predictive Control for Human-Centred Lower Limb Robotic Assistance

Nov 10, 2020

Abstract:Loss of mobility or balance resulting from neural trauma is a critical consideration in public health. Robotic exoskeletons hold great potential for rehabilitation and assisted movement, yet optimal assist-as-needed (AAN) control remains unresolved given pathological variance among patients. We introduce a model predictive control (MPC) architecture for lower limb exoskeletons centred around a fuzzy logic algorithm (FLA) identifying modes of assistance based on human involvement. Assistance modes are: 1) passive for human relaxed and robot dominant, 2) active-assist for human cooperation with the task, and 3) safety in the case of human resistance to the robot. Human torque is estimated from electromyography (EMG) signals prior to joint motions, enabling advanced prediction of torque by the MPC and selection of assistance mode by the FLA. The controller is demonstrated in hardware with three subjects on a 1-DOF knee exoskeleton tracking a sinusoidal trajectory with human relaxed assistive, and resistive. Experimental results show quick and appropriate transfers among the assistance modes and satisfied assistive performance in each mode. Results illustrate an objective approach to lower limb robotic assistance through on-the-fly transition between modes of movement, providing a new level of human-robot synergy for mobility assist and rehabilitation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge