Rasoul Mohammadi Nasiri

Error-Aware Spatial Ensembles for Video Frame Interpolation

Jul 25, 2022

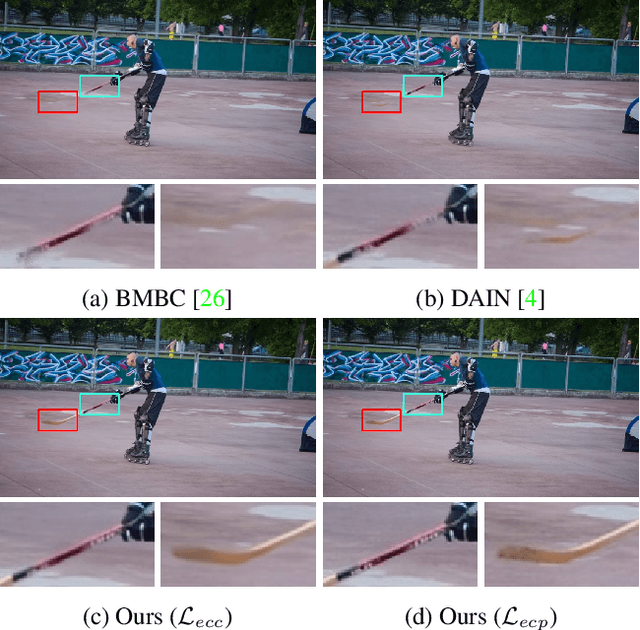

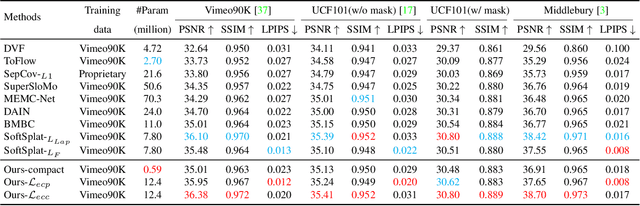

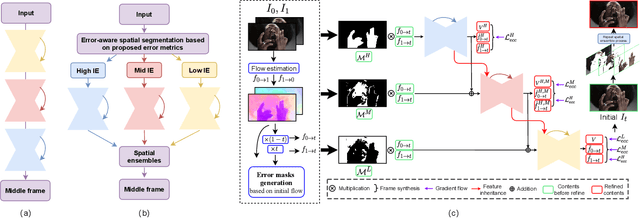

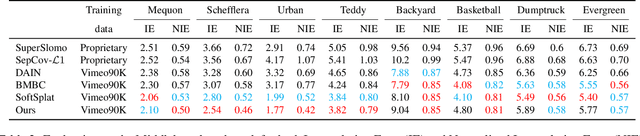

Abstract:Video frame interpolation~(VFI) algorithms have improved considerably in recent years due to unprecedented progress in both data-driven algorithms and their implementations. Recent research has introduced advanced motion estimation or novel warping methods as the means to address challenging VFI scenarios. However, none of the published VFI works considers the spatially non-uniform characteristics of the interpolation error (IE). This work introduces such a solution. By closely examining the correlation between optical flow and IE, the paper proposes novel error prediction metrics that partition the middle frame into distinct regions corresponding to different IE levels. Building upon this IE-driven segmentation, and through the use of novel error-controlled loss functions, it introduces an ensemble of spatially adaptive interpolation units that progressively processes and integrates the segmented regions. This spatial ensemble results in an effective and computationally attractive VFI solution. Extensive experimentation on popular video interpolation benchmarks indicates that the proposed solution outperforms the current state-of-the-art (SOTA) in applications of current interest.

All at Once: Temporally Adaptive Multi-Frame Interpolation with Advanced Motion Modeling

Jul 23, 2020

Abstract:Recent advances in high refresh rate displays as well as the increased interest in high rate of slow motion and frame up-conversion fuel the demand for efficient and cost-effective multi-frame video interpolation solutions. To that regard, inserting multiple frames between consecutive video frames are of paramount importance for the consumer electronics industry. State-of-the-art methods are iterative solutions interpolating one frame at the time. They introduce temporal inconsistencies and clearly noticeable visual artifacts. Departing from the state-of-the-art, this work introduces a true multi-frame interpolator. It utilizes a pyramidal style network in the temporal domain to complete the multi-frame interpolation task in one-shot. A novel flow estimation procedure using a relaxed loss function, and an advanced, cubic-based, motion model is also used to further boost interpolation accuracy when complex motion segments are encountered. Results on the Adobe240 dataset show that the proposed method generates visually pleasing, temporally consistent frames, outperforms the current best off-the-shelf method by 1.57db in PSNR with 8 times smaller model and 7.7 times faster. The proposed method can be easily extended to interpolate a large number of new frames while remaining efficient because of the one-shot mechanism.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge