Ramin Bashizade

Accelerating Markov Random Field Inference with Uncertainty Quantification

Aug 02, 2021

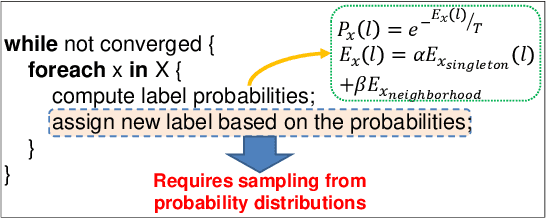

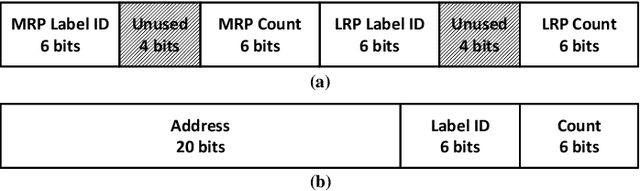

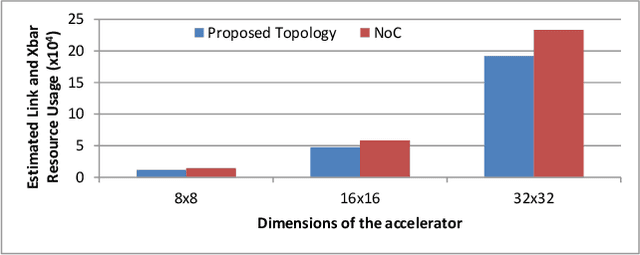

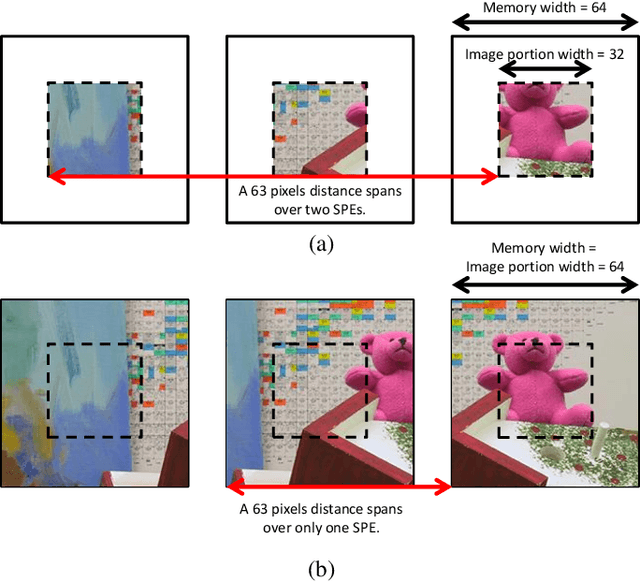

Abstract:Statistical machine learning has widespread application in various domains. These methods include probabilistic algorithms, such as Markov Chain Monte-Carlo (MCMC), which rely on generating random numbers from probability distributions. These algorithms are computationally expensive on conventional processors, yet their statistical properties, namely interpretability and uncertainty quantification (UQ) compared to deep learning, make them an attractive alternative approach. Therefore, hardware specialization can be adopted to address the shortcomings of conventional processors in running these applications. In this paper, we propose a high-throughput accelerator for Markov Random Field (MRF) inference, a powerful model for representing a wide range of applications, using MCMC with Gibbs sampling. We propose a tiled architecture which takes advantage of near-memory computing, and memory optimizations tailored to the semantics of MRF. Additionally, we propose a novel hybrid on-chip/off-chip memory system and logging scheme to efficiently support UQ. This memory system design is not specific to MRF models and is applicable to applications using probabilistic algorithms. In addition, it dramatically reduces off-chip memory bandwidth requirements. We implemented an FPGA prototype of our proposed architecture using high-level synthesis tools and achieved 146MHz frequency for an accelerator with 32 function units on an Intel Arria 10 FPGA. Compared to prior work on FPGA, our accelerator achieves 26X speedup. Furthermore, our proposed memory system and logging scheme to support UQ reduces off-chip bandwidth by 71% for two applications. ASIC analysis in 15nm shows our design with 2048 function units running at 3GHz outperforms GPU implementations of motion estimation and stereo vision on Nvidia RTX2080Ti by 120X-210X, occupying only 7.7% of the area.

Beyond Application End-Point Results: Quantifying Statistical Robustness of MCMC Accelerators

Mar 05, 2020

Abstract:Statistical machine learning often uses probabilistic algorithms, such as Markov Chain Monte Carlo (MCMC), to solve a wide range of problems. Probabilistic computations, often considered too slow on conventional processors, can be accelerated with specialized hardware by exploiting parallelism and optimizing the design using various approximation techniques. Current methodologies for evaluating correctness of probabilistic accelerators are often incomplete, mostly focusing only on end-point result quality ("accuracy"). It is important for hardware designers and domain experts to look beyond end-point "accuracy" and be aware of the hardware optimizations impact on other statistical properties. This work takes a first step towards defining metrics and a methodology for quantitatively evaluating correctness of probabilistic accelerators beyond end-point result quality. We propose three pillars of statistical robustness: 1) sampling quality, 2) convergence diagnostic, and 3) goodness of fit. We apply our framework to a representative MCMC accelerator and surface design issues that cannot be exposed using only application end-point result quality. Applying the framework to guide design space exploration shows that statistical robustness comparable to floating-point software can be achieved by slightly increasing the bit representation, without floating-point hardware requirements.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge