Rahul Chatterjee

Compact: Approximating Complex Activation Functions for Secure Computation

Sep 09, 2023

Abstract:Secure multi-party computation (MPC) techniques can be used to provide data privacy when users query deep neural network (DNN) models hosted on a public cloud. State-of-the-art MPC techniques can be directly leveraged for DNN models that use simple activation functions (AFs) such as ReLU. However, DNN model architectures designed for cutting-edge applications often use complex and highly non-linear AFs. Designing efficient MPC techniques for such complex AFs is an open problem. Towards this, we propose Compact, which produces piece-wise polynomial approximations of complex AFs to enable their efficient use with state-of-the-art MPC techniques. Compact neither requires nor imposes any restriction on model training and results in near-identical model accuracy. We extensively evaluate Compact on four different machine-learning tasks with DNN architectures that use popular complex AFs SiLU, GeLU, and Mish. Our experimental results show that Compact incurs negligible accuracy loss compared to DNN-specific approaches for handling complex non-linear AFs. We also incorporate Compact in two state-of-the-art MPC libraries for privacy-preserving inference and demonstrate that Compact provides 2x-5x speedup in computation compared to the state-of-the-art approximation approach for non-linear functions -- while providing similar or better accuracy for DNN models with large number of hidden layers

Invisible Perturbations: Physical Adversarial Examples Exploiting the Rolling Shutter Effect

Nov 30, 2020

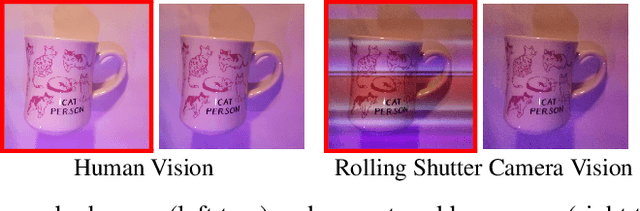

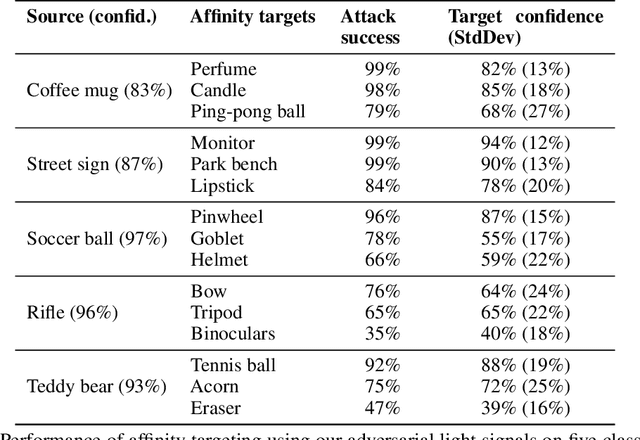

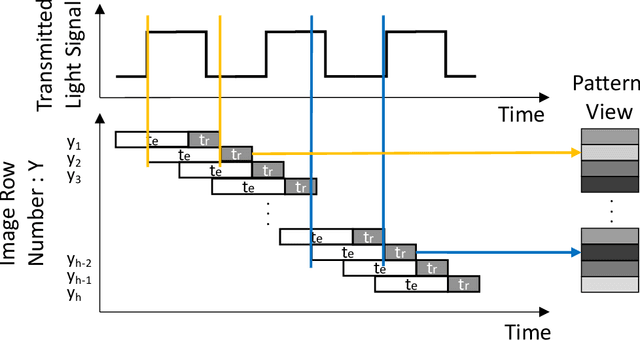

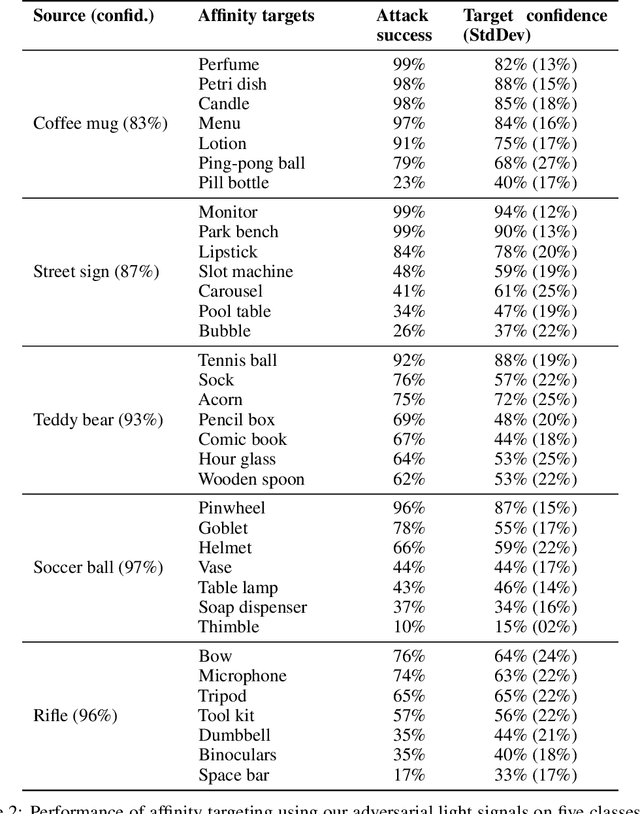

Abstract:Physical adversarial examples for camera-based computer vision have so far been achieved through visible artifacts -- a sticker on a Stop sign, colorful borders around eyeglasses or a 3D printed object with a colorful texture. An implicit assumption here is that the perturbations must be visible so that a camera can sense them. By contrast, we contribute a procedure to generate, for the first time, physical adversarial examples that are invisible to human eyes. Rather than modifying the victim object with visible artifacts, we modify light that illuminates the object. We demonstrate how an attacker can craft a modulated light signal that adversarially illuminates a scene and causes targeted misclassifications on a state-of-the-art ImageNet deep learning model. Concretely, we exploit the radiometric rolling shutter effect in commodity cameras to create precise striping patterns that appear on images. To human eyes, it appears like the object is illuminated, but the camera creates an image with stripes that will cause ML models to output the attacker-desired classification. We conduct a range of simulation and physical experiments with LEDs, demonstrating targeted attack rates up to 84%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge