Rafia Rahim

Distilling Stereo Networks for Performant and Efficient Leaner Networks

Mar 24, 2025

Abstract:Knowledge distillation has been quite popular in vision for tasks like classification and segmentation however not much work has been done for distilling state-of-the-art stereo matching methods despite their range of applications. One of the reasons for its lack of use in stereo matching networks is due to the inherent complexity of these networks, where a typical network is composed of multiple two- and three-dimensional modules. In this work, we systematically combine the insights from state-of-the-art stereo methods with general knowledge-distillation techniques to develop a joint framework for stereo networks distillation with competitive results and faster inference. Moreover, we show, via a detailed empirical analysis, that distilling knowledge from the stereo network requires careful design of the complete distillation pipeline starting from backbone to the right selection of distillation points and corresponding loss functions. This results in the student networks that are not only leaner and faster but give excellent performance . For instance, our student network while performing better than the performance oriented methods like PSMNet [1], CFNet [2], and LEAStereo [3]) on benchmark SceneFlow dataset, is 8x, 5x, and 8x faster respectively. Furthermore, compared to speed oriented methods having inference time less than 100ms, our student networks perform better than all the tested methods. In addition, our student network also shows better generalization capabilities when tested on unseen datasets like ETH3D and Middlebury.

LeanStereo: A Leaner Backbone based Stereo Network

Mar 24, 2025Abstract:Recently, end-to-end deep networks based stereo matching methods, mainly because of their performance, have gained popularity. However, this improvement in performance comes at the cost of increased computational and memory bandwidth requirements, thus necessitating specialized hardware (GPUs); even then, these methods have large inference times compared to classical methods. This limits their applicability in real-world applications. Although we desire high accuracy stereo methods albeit with reasonable inference time. To this end, we propose a fast end-to-end stereo matching method. Majority of this speedup comes from integrating a leaner backbone. To recover the performance lost because of a leaner backbone, we propose to use learned attention weights based cost volume combined with LogL1 loss for stereo matching. Using LogL1 loss not only improves the overall performance of the proposed network but also leads to faster convergence. We do a detailed empirical evaluation of different design choices and show that our method requires 4x less operations and is also about 9 to 14x faster compared to the state of the art methods like ACVNet [1], LEAStereo [2] and CFNet [3] while giving comparable performance.

* 8 pages, 4 figures

Separable Convolutions for Optimizing 3D Stereo Networks

Aug 23, 2021

Abstract:Deep learning based 3D stereo networks give superior performance compared to 2D networks and conventional stereo methods. However, this improvement in the performance comes at the cost of increased computational complexity, thus making these networks non-practical for the real-world applications. Specifically, these networks use 3D convolutions as a major work horse to refine and regress disparities. In this work first, we show that these 3D convolutions in stereo networks consume up to 94% of overall network operations and act as a major bottleneck. Next, we propose a set of "plug-&-run" separable convolutions to reduce the number of parameters and operations. When integrated with the existing state of the art stereo networks, these convolutions lead up to 7x reduction in number of operations and up to 3.5x reduction in parameters without compromising their performance. In fact these convolutions lead to improvement in their performance in the majority of cases.

MobileStereoNet: Towards Lightweight Deep Networks for Stereo Matching

Aug 22, 2021

Abstract:Recent methods in stereo matching have continuously improved the accuracy using deep models. This gain, however, is attained with a high increase in computation cost, such that the network may not fit even on a moderate GPU. This issue raises problems when the model needs to be deployed on resource-limited devices. For this, we propose two light models for stereo vision with reduced complexity and without sacrificing accuracy. Depending on the dimension of cost volume, we design a 2D and a 3D model with encoder-decoders built from 2D and 3D convolutions, respectively. To this end, we leverage 2D MobileNet blocks and extend them to 3D for stereo vision application. Besides, a new cost volume is proposed to boost the accuracy of the 2D model, making it performing close to 3D networks. Experiments show that the proposed 2D/3D networks effectively reduce the computational expense (27%/95% and 72%/38% fewer parameters/operations in 2D and 3D models, respectively) while upholding the accuracy. Our code is available at https://github.com/cogsys-tuebingen/mobilestereonet.

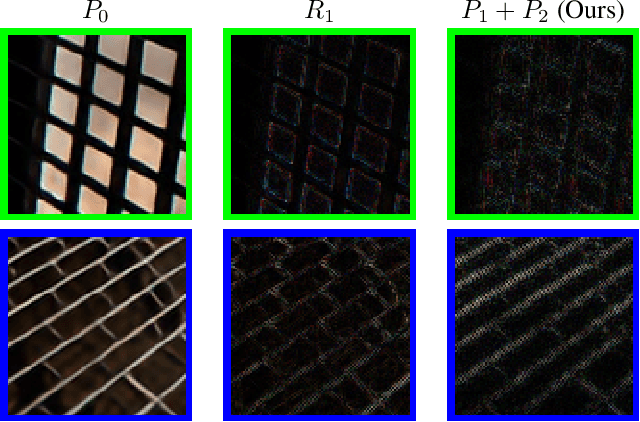

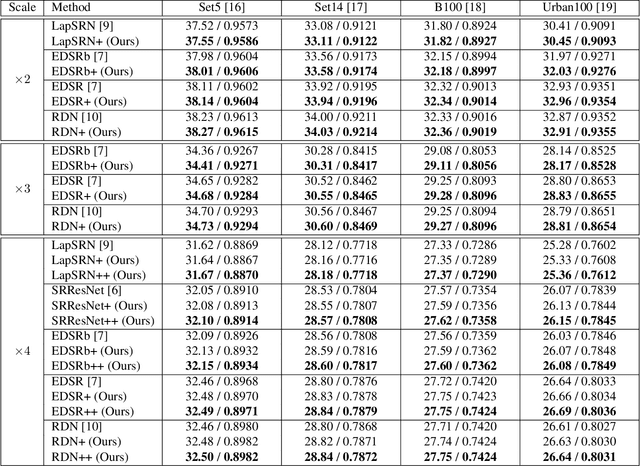

Improving Super-Resolution Methods via Incremental Residual Learning

Aug 21, 2018

Abstract:Recently, deep Convolutional Neural Networks (CNNs) have shown promising performance in accurate reconstruction of high resolution (HR) image, given its low resolution (LR) counter-part. However, recent state-of-the-art methods operate primarily on LR image for memory efficiency, but we show that it comes at the cost of performance. Furthermore, because spatial dimensions of input and output of such networks do not match, it's not possible to learn residuals in image space; we show that learning residuals in image space leads to performance enhancement. To this end, we propose a novel Incremental Residual Learning (IRL) framework to solve the above mentioned issues. In IRL, a set of branches i.e arbitrary image-to-image networks are trained sequentially where each branch takes spatially upsampled higher dimensional feature maps as input and predicts the residuals of all previous branches combined. We plug recent state of the art methods as base networks in IRL framework and demonstrate the consistent performance enhancement through extensive experiments on public benchmark datasets to set a new state of the art for super-resolution. Compared to the base networks our method incurs no extra memory overhead as only one branch is trained at a time. Furthermore, as our method is trained to learned residuals, complete set of branches are trained in only 20% of time relative to base network.

End-to-end Trained CNN Encode-Decoder Networks for Image Steganography

Nov 20, 2017

Abstract:All the existing image steganography methods use manually crafted features to hide binary payloads into cover images. This leads to small payload capacity and image distortion. Here we propose a convolutional neural network based encoder-decoder architecture for embedding of images as payload. To this end, we make following three major contributions: (i) we propose a deep learning based generic encoder-decoder architecture for image steganography; (ii) we introduce a new loss function that ensures joint end-to-end training of encoder-decoder networks; (iii) we perform extensive empirical evaluation of proposed architecture on a range of challenging publicly available datasets (MNIST, CIFAR10, PASCAL-VOC12, ImageNet, LFW) and report state-of-the-art payload capacity at high PSNR and SSIM values.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge