Rachitesh Kumar

Strategically-Robust Learning Algorithms for Bidding in First-Price Auctions

Feb 12, 2024

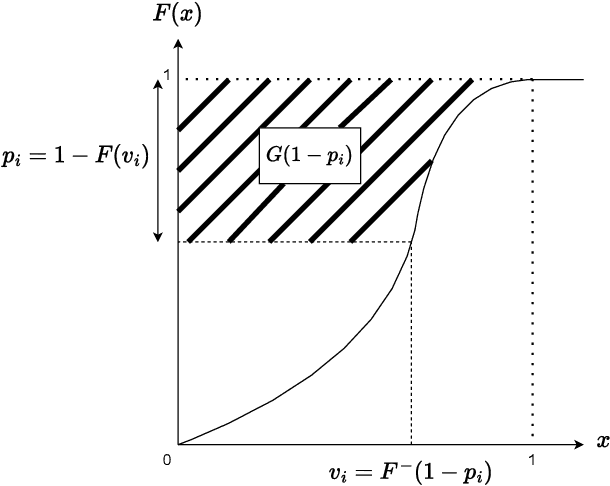

Abstract:Learning to bid in repeated first-price auctions is a fundamental problem at the interface of game theory and machine learning, which has seen a recent surge in interest due to the transition of display advertising to first-price auctions. In this work, we propose a novel concave formulation for pure-strategy bidding in first-price auctions, and use it to analyze natural Gradient-Ascent-based algorithms for this problem. Importantly, our analysis goes beyond regret, which was the typical focus of past work, and also accounts for the strategic backdrop of online-advertising markets where bidding algorithms are deployed -- we prove that our algorithms cannot be exploited by a strategic seller and that they incentivize truth-telling for the buyer. Concretely, we show that our algorithms achieve $O(\sqrt{T})$ regret when the highest competing bids are generated adversarially, and show that no online algorithm can do better. We further prove that the regret improves to $O(\log T)$ when the competition is stationary and stochastic. Moving beyond regret, we show that a strategic seller cannot exploit our algorithms to extract more revenue on average than is possible under the optimal mechanism, i.e., the seller cannot do much better than posting the monopoly reserve price in each auction. Finally, we prove that our algorithm is also incentive compatible -- it is a (nearly) dominant strategy for the buyer to report her values truthfully to the algorithm as a whole.

Robust Budget Pacing with a Single Sample

Feb 03, 2023Abstract:Major Internet advertising platforms offer budget pacing tools as a standard service for advertisers to manage their ad campaigns. Given the inherent non-stationarity in an advertiser's value and also competing advertisers' values over time, a commonly used approach is to learn a target expenditure plan that specifies a target spend as a function of time, and then run a controller that tracks this plan. This raises the question: how many historical samples are required to learn a good expenditure plan? We study this question by considering an advertiser repeatedly participating in $T$ second-price auctions, where the tuple of her value and the highest competing bid is drawn from an unknown time-varying distribution. The advertiser seeks to maximize her total utility subject to her budget constraint. Prior work has shown the sufficiency of $T\log T$ samples per distribution to achieve the optimal $O(\sqrt{T})$-regret. We dramatically improve this state-of-the-art and show that just one sample per distribution is enough to achieve the near-optimal $\tilde O(\sqrt{T})$-regret, while still being robust to noise in the sampling distributions.

Online Resource Allocation under Horizon Uncertainty

Jun 27, 2022

Abstract:We study stochastic online resource allocation: a decision maker needs to allocate limited resources to stochastically-generated sequentially-arriving requests in order to maximize reward. Motivated by practice, we consider a data-driven setting in which requests are drawn independently from a distribution that is unknown to the decision maker. Online resource allocation and its special cases have been studied extensively in the past, but these previous results crucially and universally rely on a practically-untenable assumption: the total number of requests (the horizon) is known to the decision maker in advance. In many applications, such as revenue management and online advertising, the number of requests can vary widely because of fluctuations in demand or user traffic intensity. In this work, we develop online algorithms that are robust to horizon uncertainty. In sharp contrast to the known-horizon setting, we show that no algorithm can achieve a constant asymptotic competitive ratio that is independent of the horizon uncertainty. We then introduce a novel algorithm that combines dual mirror descent with a carefully-chosen target consumption sequence and prove that it achieves a bounded competitive ratio. Our algorithm is near-optimal in the sense that its competitive ratio attains the optimal rate of growth when the horizon uncertainty grows large.

Single-Leg Revenue Management with Advice

Feb 18, 2022

Abstract:Single-leg revenue management is a foundational problem of revenue management that has been particularly impactful in the airline and hotel industry: Given $n$ units of a resource, e.g. flight seats, and a stream of sequentially-arriving customers segmented by fares, what is the optimal online policy for allocating the resource. Previous work focused on designing algorithms when forecasts are available, which are not robust to inaccuracies in the forecast, or online algorithms with worst-case performance guarantees, which can be too conservative in practice. In this work, we look at the single-leg revenue management problem through the lens of the algorithms-with-advice framework, which attempts to optimally incorporate advice/predictions about the future into online algorithms. In particular, we characterize the Pareto frontier that captures the tradeoff between consistency (performance when advice is accurate) and competitiveness (performance when advice is inaccurate) for every advice. Moreover, we provide an online algorithm that always achieves performance on this Pareto frontier. We also study the class of protection level policies, which is the most widely-deployed technique for single-leg revenue management: we provide an algorithm to incorporate advice into protection levels that optimally trades off consistency and competitiveness. Moreover, we empirically evaluate the performance of these algorithms on synthetic data. We find that our algorithm for protection level policies performs remarkably well on most instances, even if it is not guaranteed to be on the Pareto frontier in theory.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge